This past January, the Azure Monitor team announced the stable release of the Azure Monitor Metrics data plane API. This API grants the ability to query metrics for up to 50 Azure resources in a single call, providing a faster and more efficient way to retrieve metrics data for multiple resources.

To allow developers to seamlessly integrate multi-resource metrics queries into their applications, the Azure SDK team is excited to announce support in the latest stable releases of our Azure Monitor Query client libraries.

Sovereign cloud support

At the time of writing, the Azure Monitor Query libraries’ multi-resource metrics query feature is only available to Azure Public Cloud customers. Support for the Azure US Government and Azure China sovereign clouds is planned for later in the calendar year. For more information, see the language-specific GitHub issues:

You can access multi-resource metrics query APIs from the following package versions:

| Ecosystem | Minimum package version |

|---|---|

| .NET | 1.3.0 |

| Go | 1.0.0 |

| Java | 1.3.0 |

| JavaScript | 1.2.0 |

| Python | 1.3.0 |

A new home for multi-resource metrics queries

Earlier versions of the Azure Monitor Query client libraries required sending calls to the Azure Resource Manager APIs to query metrics on a per-resource basis. However, with the introduction of the Azure Monitor Metrics data plane API, a new client, MetricsClient, was added to facilitate data plane metrics operations in the .NET, Java, JavaScript, and Python libraries. For Go, instead of introducing a new client in the existing azquery module, we released the new azmetrics module.

Presently, a multi-resource metrics query is the sole supported operation in MetricsClient and azmetrics. However, we anticipate expanding the clients’ functionality to support other metrics-related query operations in the future.

Developer usage

Developers can now provide a list of resource IDs to the new client operation, eliminating the need for individual calls for each resource. However, to use this API, the following criteria must be satisfied:

- Each resource must be in the same Azure region denoted by the endpoint specified when instantiating the client.

- Each resource must belong to the same Azure subscription.

- User must have authorization to read monitoring data at the subscription level. For example, the

Monitoring Readerrole on the subscription to be queried.

The metric namespace that contains the metrics to be queried must also be specified. You can find a list of metric namespaces in this list of supported metrics by resource type.

The following code snippets demonstrate how to query the “Ingress” metric for multiple storage account resources in various languages.

Python

from azure.identity import DefaultAzureCredential

from azure.monitor.query import MetricsClient

resource_ids = [

"/subscriptions/00000000-0000-0000-0000-000000000000/resourceGroups/myResourceGroup/providers/Microsoft.Storage/storageAccounts/myStorageAccount",

"/subscriptions/00000000-0000-0000-0000-000000000000/resourceGroups/myResourceGroup/providers/Microsoft.Storage/storageAccounts/myStorageAccount2"

]

credential = DefaultAzureCredential()

endpoint = "https://eastus.metrics.monitor.azure.com"

client = MetricsClient(endpoint, credential)

metrics_query_results = client.query_resources(

resource_ids=resource_ids,

metric_namespace="Microsoft.Storage/storageAccounts",

metric_names=["Ingress"]

)Check out the Azure SDK for Python repository for more sample usage.

JavaScript/TypeScript

import { DefaultAzureCredential } from "@azure/identity";

import { MetricsClient } from "@azure/monitor-query";

export async function main() {

let resourceIds: string[] = [

"/subscriptions/00000000-0000-0000-0000-000000000000/resourceGroups/myResourceGroup/providers/Microsoft.Storage/storageAccounts/myStorageAccount",

"/subscriptions/00000000-0000-0000-0000-000000000000/resourceGroups/myResourceGroup/providers/Microsoft.Storage/storageAccounts/myStorageAccount2"

];

let metricsNamespace: string = "Microsoft.Storage/storageAccounts";

let metricNames: string[] = ["Ingress"];

const endpoint: string = "https://eastus.metrics.monitor.azure.com";

const credential = new DefaultAzureCredential();

const metricsClient: MetricsClient = new MetricsClient(

endpoint,

credential

);

const result: : MetricsQueryResult[] = await metricsClient.queryResources(

resourceIds,

metricNames,

metricsNamespace

);

}Check out the Azure SDK for JavaScript repository for more sample usage.

Java

import com.azure.identity.DefaultAzureCredentialBuilder;

import com.azure.monitor.query.models.MetricResult;

import com.azure.monitor.query.models.MetricsQueryResourcesResult;

import com.azure.monitor.query.models.MetricsQueryResult;

import java.util.Arrays;

import java.util.List;

public class MetricsSample {

public static void main(String[] args) {

MetricsClient metricsClient = new MetricsClientBuilder()

.credential(new DefaultAzureCredentialBuilder().build())

.endpoint("https://eastus.metrics.monitor.azure.com")

.buildClient();

List<String> resourceIds = Arrays.asList(

"/subscriptions/00000000-0000-0000-0000-000000000000/resourceGroups/myResourceGroup/providers/Microsoft.Storage/storageAccounts/myStorageAccount",

"/subscriptions/00000000-0000-0000-0000-000000000000/resourceGroups/myResourceGroup/providers/Microsoft.Storage/storageAccounts/myStorageAccount2"

);

MetricsQueryResourcesResult metricsQueryResourcesResult = metricsClient.queryResources(

resourceIds,

Arrays.asList("Ingress"),

"Microsoft.Storage/storageAccounts");

for (MetricsQueryResult result : metricsQueryResourcesResult.getMetricsQueryResults()) {

List<MetricResult> metrics = result.getMetrics();

}

}

}Check out the Azure SDK for Java repository for more sample usage.

.NET

using Azure;

using Azure.Core;

using Azure.Identity;

using Azure.Monitor.Query.Models;

using Azure.Monitor.Query;

List<ResourceIdentifier> resourceIds = new List<ResourceIdentifier>

{

new ResourceIdentifier("/subscriptions/00000000-0000-0000-0000-000000000000/resourceGroups/myResourceGroup/providers/Microsoft.Storage/storageAccounts/myStorageAccount"),

new ResourceIdentifier("/subscriptions/00000000-0000-0000-0000-000000000000/resourceGroups/myResourceGroup/providers/Microsoft.Storage/storageAccounts/myStorageAccount2")

};

var client = new MetricsClient(

new Uri("https://eastus.metrics.monitor.azure.com"),

new DefaultAzureCredential());

Response<MetricsQueryResourcesResult> result = await client.QueryResourcesAsync(

resourceIds: resourceIds,

metricNames: new List<string> { "Ingress" },

metricNamespace: "Microsoft.Storage/storageAccounts").ConfigureAwait(false);

MetricsQueryResourcesResult metricsQueryResults = result.Value;Check out the Azure SDK for .NET repository for more sample usage.

Go

import (

"context"

"github.com/Azure/azure-sdk-for-go/sdk/azidentity"

"github.com/Azure/azure-sdk-for-go/sdk/monitor/query/azmetrics"

)

func main() {

endpoint := "https://eastus.metrics.monitor.azure.com"

subscriptionID := "00000000-0000-0000-0000-000000000000"

resourceIDs := []string{

"/subscriptions/00000000-0000-0000-0000-000000000000/resourceGroups/myResourceGroup/providers/Microsoft.Storage/storageAccounts/myStorageAccount",

"/subscriptions/00000000-0000-0000-0000-000000000000/resourceGroups/myResourceGroup/providers/Microsoft.Storage/storageAccounts/myStorageAccount2",

}

cred, err := azidentity.NewDefaultAzureCredential(nil)

if err != nil {

// Handle error

}

client, err := azmetrics.NewClient(endpoint, cred, nil)

if err != nil {

// Handle error

}

res, err = client.QueryResources(

context.Background(),

subscriptionID,

"Microsoft.Storage/storageAccounts",

[]string{"Ingress"},

azmetrics.ResourceIDList{ResourceIDs: resourceIDs},

nil

)

if err != nil {

// Handle error

}

}Check out the Azure SDK for Go repository for more sample usage.

Summary

The introduction of the Azure Monitor Metrics data plane API and this new functionality in our client libraries marks a significant advancement in the way developers can query metrics for Azure resources. This feature simplifies and streamlines the process of querying multiple resources, greatly reducing the number of HTTP requests that need to be processed. As we continue to enhance these features and expand their capabilities, we look forward to seeing the innovative ways developers use them to optimize their applications and workflows.

Any feedback you have on how we can improve the libraries is greatly appreciated. Let’s have those conversations on GitHub at these locations:

The post Multi-resource metrics query support in the Azure Monitor Query libraries appeared first on Azure SDK Blog.

We are pleased to announce the GA release of enhanced patching capabilities for SQL Server on Azure VMs using Azure Update Manager. When you register your SQL Server on Azure VM with the SQL IaaS Agent extension, you unlock a number of feature benefits, including patch management at scale with Azure Update Manager.

Overview

Azure Update Manager is a unified service to help manage and govern updates for all your machines. You can monitor Windows and Linux update compliance across your deployments in Azure, on-premises, and on other cloud platforms from a single dashboard. By enabling Azure Update Manager, customers will now be able to:

- Perform one-time updates (or Patch on-demand): Schedule manual updates on demand

- Update management at scale: patch multiple VMs at the same time

- Configure schedules: configure robust schedules to patch groups of VMs based on your business needs

- Periodic Assessments: Automatically check for new updates every 24 hours and identify machines that may be out of compliance

Azure Update Manager has more categories to include for updates, including the ability to automatically install SQL Server Cumulative Updates (CUs), unlike the existing Automated Patching feature which can only install updates marked Critical or Important.

To get started using Azure Update Manager go to the SQL virtual machine resource in the Azure portal, choose Updates under Settings.

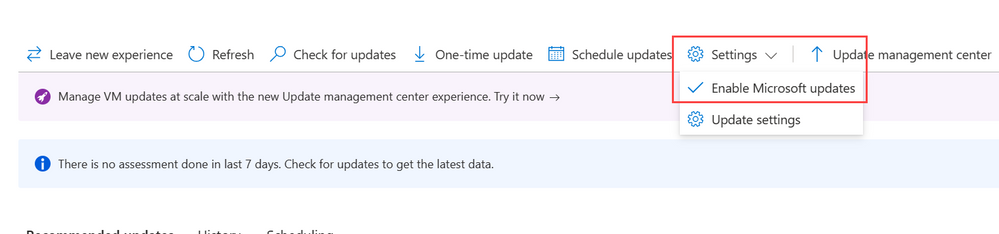

To allow your SQL VM to get SQL Server updates, customers will need to enable Microsoft Updates.

Migrate from Automated Patching to Azure Update Manager

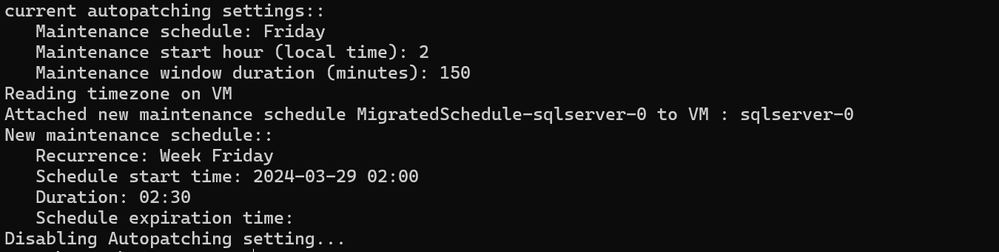

If you are currently using the Automated Patching feature offered by the SQL Server IaaS agent extension, and want to migrate to Azure Update Manager, you can do so by using the MigrateSQLVMPatchingSchedule PowerShell module to perform following steps:

- Disable Automated Patching

- Enable Microsoft Update on the virtual machine

- Create a new maintenance configuration in Azure Update Manager with a similar schedule to Automated Patching

- Assign the virtual machine to the maintenance configuration

To migrate to Azure Update Manager by using PowerShell, use the following sample script:

$rgname = 'YourResourceGroup' $vmname = 'YourVM' # Install latest migration module Install-Module -Name MigrateSQLVMPatchingSchedule-Module -Force -AllowClobber # Import the module Import-Module MigrateSQLVMPatchingSchedule-Module Convert-SQLVMPatchingSchedule -ResourceGroupName $rgname -VmName $vmname

The output of the script includes details about the old schedule in Automated Patching and details about the new schedule in Azure Update Manager. For example, if the Automated Patching schedule was every Friday, with a start hour of 2am, and a duration of 150 minutes, the output from the script is:

Additional Considerations

If you are currently using the SQL IaaS extension to patch, then be aware of conflicting schedules or consider disabling Automated Patching and migrating to Azure Update Manager to take advantage of the robust features.

At this point, patching SQL Server on Azure VMs through Azure Update Manager or Automated Patching via the SQL IaaS extension is not aware if the SQL Server is a part of an Always On availability group. It is important to keep this in mind when scheduling your updates with an automated process.

You can always go back to Automated Patching by selecting Leave new experience from the new Updates page.

Learn More

- About Azure Update Manager

- Review the following article about how to check and install on-demand updates

- Schedule patching configuration on Azure VMs

- Managing update configuration settings in Azure Update Manager

- Azure Update Manager for SQL Server on Azure VMs

Glenn is a Principal Product Manager for the App Platform team within the Developer Division at Microsoft, focusing on .NET. Before joining Microsoft, Glenn was a developer in Australia where he worked on software for various government departments.

Topics of Discussion:

[2:47] Glenn’s career path.

[6:33] The old .NET vs the new .NET.

[8:09] .NET was initially Windows-only but is now being rebuilt as open-source, cross-platform software.

[9:40] The evolution of .NET.

[9:53] .NET core.

[14:04] New features and ideas presented at .NET Conf.

[16:26] Aspire.

[18:58] Every piece of an Aspire solution uses open Telemetry as a standard.

[19:26] Redis.

[27:15] Aspire knows all the “what’ and “how” to deploy to the cloud, without explicit cloud knowledge.

[32:36] The intent of AZD.

[36:57] Handling the components of Aspire.

[40:21] How to add custom resources to Aspire.

[41:00] Opinionated vs non-opinionated development in the .NET ecosystem.

Mentioned in this Episode:

Programming with Palermo — New Video Podcast! Email us at programming@palermo.net.

Clear Measure, Inc. (Sponsor)

.NET DevOps for Azure: A Developer’s Guide to DevOps Architecture the Right Way, by Jeffrey Palermo — Available on Amazon!

Jeffrey Palermo’s Twitter — Follow to stay informed about future events!

Glenn Condron on New Capabilities on .NET - Ep 58

Building Cloud Native Apps with .NET 8

Want to Learn More?

Visit AzureDevOps.Show for show notes and additional episodes.

Download audio: https://traffic.libsyn.com/secure/azuredevops/ADP_293-00-05-48.mp3?dest-id=768873