Read more of this story at Slashdot.

Microsoft Copilot Studio and Microsoft Foundry (often referred to as Azure AI Foundry) are two key platforms in Microsoft’s AI ecosystem that allow organizations to create custom AI agents and AI-enabled applications. While both share the goal of enabling businesses to build intelligent, task-oriented “copilot” solutions, they are designed for different audiences and use cases.

To help you decide which path suits your organization, this blog provides an educational comparison of Copilot Studio vs. Azure AI Foundry, focusing on their unique strengths, feature parity and differences, and key criteria like control requirements, preferences, and integration needs. By understanding these factors, technical decision-makers, developers, IT admins, and business leaders can confidently select the right platform or even a hybrid approach for their AI agent projects.

Copilot Studio and Azure AI Foundry: At a Glance

- Copilot Studio is designed for business teams, pro‑makers, and IT admins who want a managed, low‑code SaaS environment with plug‑and‑play integrations.

- Microsoft Foundry is built for professional developers who need fine‑grained control, customization, and integration into their existing application and cloud infrastructure.

And the good news? Organizations often use both and they work together beautifully.

Feature Parity and Key Differences

While both platforms can achieve similar outcomes, they do so via different means. Here’s a high-level comparison of Copilot Studio and Azure AI Foundry:

|

Factor |

Copilot Studio (SaaS, Low-Code) |

Microsoft (Azure) AI Foundry (PaaS, Pro-Code) |

|

Target Users & Skills |

Business domain experts, IT pros, and “pro-makers” comfortable with low-code tools. Little to no coding is required for building agents. Ideal for quick solutions within business units. |

Professional developers, software engineers, and data scientists with coding/DevOps expertise. Deep programming skills needed for custom code, DevOps, and advanced AI scenarios. Suited for complex, large-scale AI projects. |

|

Platform Model |

Software-as-a-Service – fully managed by Microsoft. Agents and tools are built and run in Microsoft’s cloud (M365/Copilot service) with no infrastructure to manage. Simplified provisioning, automatic updates, and built-in compliance with Microsoft 365 environment. |

Platform-as-a-Service, runs in your Azure subscription. You deploy and manage the agent’s infrastructure (e.g. Azure compute, networking, storage) in your cloud. Offers full control over environment, updates, and data residency. |

|

Integration & Data |

Out-of-box connectors & data integrations for Microsoft 365 (SharePoint, Outlook, Teams) and 3rd-party SaaS via Power Platform connectors. Easy integration with business systems without coding, ideal for leveraging existing M365 and Power Platform assets. Data remains in Microsoft’s cloud (with M365 compliance and Purview governance) by default. |

Deep custom integration with any system or data source via code. Natively works with Azure services (Azure SQL, Cosmos DB, Functions, Kubernetes, Service Bus, etc.) and can connect to on-prem or multi-cloud resources via custom connectors. Suitable when data/code must stay in your network or cloud for compliance or performance reasons. |

|

Development Experience |

Low-code, UI-driven development. Build agents with visual designers and prompt editors. No-code orchestration through Topics (conversational flows) and Agent Flows (Power Automate). Rich library of pre-built components (tools/capabilities) that are auto-managed and continuously improved by Microsoft (e.g. Copilot connectors for M365, built-in tool evaluations). Emphasizes speed and simplicity over granular control. |

Code-first development. Offers web-based studio plus extensive SDKs, CLI, and VS Code integration for coding agents and custom tools. Supports full DevOps: you can use GitHub/Azure DevOps for CI/CD, custom testing, version control, and integrate with your existing software development toolchain. Provides maximum flexibility to define bespoke logic, but requires more time and skill, sacrificing immediate simplicity for long-term extensibility. |

|

Control & Governance |

Managed environment – minimal configuration needed. Governance is handled via Microsoft’s standard M365 admin centers: e.g. Admin Center, Entra ID, Microsoft Purview, Defender for identity, access, auditing, and compliance across copilots. Updates and performance optimizations (e.g. tool improvements) are applied automatically by Microsoft. Limited need (or ability) to tweak infrastructure or model behavior under the hood – fits organizations that want Microsoft to manage the heavy lifting. |

Microsoft Foundry provides a pro‑code, Azure‑native environment for teams that need full control over the agent runtime, integrations, and development workflow. Full stack control – you manage how and where agents run. Customizable governance using Azure’s security & monitoring tools: Azure AD (identity/RBAC), Key Vault, network security (private endpoints, VNETs), plus integrated logging and telemetry via Azure Monitor, App Insights, etc. Foundry includes a developer control plane for observing, debugging, and evaluating agents during development and runtime. This is ideal for organizations requiring fine-grained control, custom compliance configurations, and rigorous LLMOps practices. |

|

Deployment Channels |

One-click publishing to Microsoft 365 experiences (Teams, Outlook), web chat, SharePoint, email, and more – thanks to native support for multiple channels in Copilot Studio. Everything runs in the cloud; you don’t worry about hosting the bot. |

Flexible deployment options. Foundry agents can be exposed via APIs or the Activity Protocol, and integrated into apps or custom channels using the M365 Agents SDK. Foundry also supports deploying agents as web apps, containers, Azure Functions, or even private endpoints for internal use, giving teams freedom to run agents wherever needed (with more setup). |

|

Control and customization |

Copilot Studio trades off fine-grained control for simplicity and speed. It abstracts away infrastructure and handles many optimizations for you, which accelerates development but limits how deeply you can tweak the agent’s behavior. |

Azure Foundry, by contrast, gives you extensive control over the agent’s architecture, tools and environment – at the cost of more complex setup and effort. Consider your project’s needs: Does it demand custom code, specialized model tuning or on-premises data? If yes, Foundry provides the necessary flexibility. |

|

Common Scenarios |

· HR or Finance teams building departmental AI assistants · Sales operations automating workflows and knowledge retrieval · Fusion teams starting quickly without developer-heavy resources Copilot Studio gives teams a powerful way to build agents quickly without needing to set up compute, networking, identity or DevOps pipeline |

· Embedding agents into production SaaS apps · If team uses professional developer frameworks (Semantic Kernel, LangChain, AutoGen, etc.) · Building multi‑agent architectures with complex toolchains · You require integration with existing app code or multi-cloud architecture. · You need full observability, versioning, instrumentation or custom DevOps.

Foundry is ideal for software engineering teams who need configurability, extensibility and industrial-grade DevOps. |

Benefits of Combined Use: Embracing Hybrid approach

One important insight is that Copilot Studio and Foundry are not mutually exclusive. In fact, Microsoft designed them to be interoperable so that organizations can use both in tandem for different parts of a solution. This is especially relevant for large projects or “fusion teams” that include both low-code creators and pro developers. The pattern many enterprises land on:

- Developers build specialized tools / agents in Foundry

- Makers assemble user-facing workflow experience in Copilot Studio

- Agents can collaborate via agent-to-agent patterns (including A2A, where applicable)

Using both platforms together unlocks the best of both worlds:

- Seamless User Experience: Copilot Studio provides a polished, user-friendly interface for end-users, while Azure AI Foundry handles complex backend logic and data processing.

- Advanced AI Capabilities: Leverage Azure AI Foundry’s extensive model library and orchestration features to build sophisticated agents that can reason, learn, and adapt.

- Scalability & Flexibility: Azure AI Foundry’s cloud-native architecture ensures scalability for high-demand scenarios, while Copilot Studio’s low-code approach accelerates development cycles.

For the customers who don’t want to decide up front, Microsoft introduced a unified approach for scaling agent initiatives: Microsoft Agent Pre-Purchase Plan (P3) as part of the broader Agent Factory story, designed to reduce procurement friction across both platforms.

Security & Compliance using Microsoft Purview

Microsoft Copilot Studio: Microsoft Purview extends enterprise-grade security and compliance to agents built with Microsoft Copilot Studio by bringing AI interaction governance into the same control plane you use for the rest of Microsoft 365. With Purview, you can apply DSPM for AI insights, auditing, and data classification to Copilot Studio prompts and responses, and use familiar compliance capabilities like sensitivity labels, DLP, Insider Risk Management, Communication Compliance, eDiscovery, and Data Lifecycle Management to reduce oversharing risk and support investigations. For agents published to non-Microsoft channels, Purview management can require pay-as-you-go billing, while still using the same Purview policies and reporting workflows teams already rely on.

Microsoft Foundry: Microsoft Purview integrates with Microsoft Foundry to help organizations secure and govern AI interactions (prompts, responses, and related metadata) using Microsoft’s unified data security and compliance capabilities. Once enabled through the Foundry Control Plane or through Microsoft Defender for Cloud in Microsoft Azure Portal, Purview can provide DSPM for AI posture insights plus auditing, data classification, sensitivity labels, and enforcement-oriented controls like DLP, along with downstream compliance workflows such as Insider Risk, Communication Compliance, eDiscovery, and Data Lifecycle Management. This lets security and compliance teams apply consistent policies across AI apps and agents in Foundry, while gaining visibility and governance through the same Purview portal and reports used across the enterprise.

Conclusion

When it comes to Copilot Studio vs. Azure AI Foundry, there is no universally “best” choice – the ideal platform depends on your team’s composition and project requirements. Copilot Studio excels at enabling functional business teams and IT pros to build AI assistants quickly in a managed, compliant environment with minimal coding. Azure AI Foundry shines for developer-centric projects that need maximal flexibility, custom code, and deep integration with enterprise systems. The key is to identify what level of control, speed, and skill your scenario calls for. Use both together to build end-to-end intelligent systems that combine ease of use with powerful backend intelligence.

By thoughtfully aligning the platform to your team’s strengths and needs, you can minimize friction and maximize momentum on your AI agent journey delivering custom copilot solutions that are both quick to market and built for the long haul

Resources to explore

Use Microsoft Purview to manage data security & compliance for Microsoft Copilot Studio

Use Microsoft Purview to manage data security & compliance for Microsoft Foundry

Optimize Microsoft Foundry and Copilot Credit costs with Microsoft Agent pre-purchase plan

Johan shows how to use code metrics to help identify risk in your codebase.

⌚ Chapters:

00:00 Welcome

03:50 Overview of demo app

04:30 Generating code metrics for the app

06:00 Using GitHub Copilot to help understand code metrics

08:00 Measuring class coupling

11:50 Measuring depth of inheritance

13:50 Measuring cyclomatic complexity

19:25 Measuring maintainability

21:30 Discussion

24:20 Wrap-up

🎙️ Featuring: Robert Green and Johan Smarius

Today in this short episode of developer news we cover #Gemini and #GitHub news!

00:00 Intro

00:12 Google

00:36 GitHub

-----

Links

Google

• You can now verify Google AI-generated videos in the Gemini app - https://blog.google/technology/ai/verify-google-ai-videos-gemini-app/

GitHub

• Reduced pricing for GitHub-hosted runners usage - https://github.blog/changelog/2026-01-01-reduced-pricing-for-github-hosted-runners-usage/

-----

🐦X: https://x.com/theredcuber

🐙Github: https://github.com/noraa-junker

📃My website: https://noraajunker.ch

- ty: An extremely fast Python type checker and LSP

- Python Supply Chain Security Made Easy

- typing_extensions

- MI6 chief: We'll be as fluent in Python as we are in Russian

- Extras

- Joke

About the show

Connect with the hosts

- Michael: @mkennedy@fosstodon.org / @mkennedy.codes (bsky)

- Brian: @brianokken@fosstodon.org / @brianokken.bsky.social

- Show: @pythonbytes@fosstodon.org / @pythonbytes.fm (bsky)

Join us on YouTube at pythonbytes.fm/live to be part of the audience. Usually Monday at 10am PT. Older video versions available there too.

Finally, if you want an artisanal, hand-crafted digest of every week of the show notes in email form? Add your name and email to our friends of the show list, we'll never share it.

Brian #1: ty: An extremely fast Python type checker and LSP

- Charlie Marsh announced the Beta release of

tyon Dec 16 - “designed as an alternative to tools like mypy, Pyright, and Pylance.”

- Extremely fast even from first run

- Successive runs are incremental, only rerunning necessary computations as a user edits a file or function. This allows live updates.

- Includes nice visual diagnostics much like color enhanced tracebacks

- Extensive configuration control

- Nice for if you want to gradually fix warnings from ty for a project

- Also released a nice VSCode (or Cursor) extension

- Check the docs. There are lots of features.

- Also a note about disabling the default language server (or disabling ty’s language server) so you don’t have 2 running

Michael #2: Python Supply Chain Security Made Easy

- We know about supply chain security issues, but what can you do?

- Typosquatting (not great)

- Github/PyPI account take-overs (very bad)

- Enter pip-audit.

- Run it in two ways:

- Against your installed dependencies in current venv

- As a proper unit test (so when running pytest or CI/CD).

- Let others find out first, wait a week on all dependency updates:

uv pip compile requirements.piptools --upgrade --output-file requirements.txt --exclude-newer "1 week"

- Follow up article: DevOps Python Supply Chain Security

- Create a dedicated Docker image for testing dependencies with pip-audit in isolation before installing them into your venv.

- Run pip-compile / uv lock --upgrade to generate the new lock file

- Test in a ephemeral pip-audit optimized Docker container

- Only then if things pass, uv pip install / uv sync

- Add a dedicated Docker image build step that fails the

docker buildstep if a vulnerable package is found.

- Create a dedicated Docker image for testing dependencies with pip-audit in isolation before installing them into your venv.

Brian #3: typing_extensions

- Kind of a followup on the deprecation warning topic we were talking about in December.

- prioinv on Mastodon notified us that the project typing-extensions includes it as part of the backport set.

- The warnings.deprecated decorator is new to Python 3.13, but with

typing-extensions, you can use it in previous versions. - But

typing_extesionsis way cooler than just that. - The module serves 2 purposes:

- Enable use of new type system features on older Python versions.

- Enable experimentation with type system features proposed in new PEPs before they are accepted and added to the <code>typing</code> module.

- So cool.

- There’s a lot of features here. I’m hoping it allows someone to use the latest typing syntax across multiple Python versions.

- I’m “tentatively” excited. But I’m bracing for someone to tell me why it’s not a silver bullet.

Michael #4: MI6 chief: We'll be as fluent in Python as we are in Russian

- "Advances in artificial intelligence, biotechnology and quantum computing are not only revolutionizing economies but rewriting the reality of conflict, as they 'converge' to create science fiction-like tools,” said new MI6 chief Blaise Metreweli.

- She focused mainly on threats from Russia, the country is "testing us in the grey zone with tactics that are just below the threshold of war.”

- This demands what she called "mastery of technology" across the service, with officers required to become "as comfortable with lines of code as we are with human sources, as fluent in Python as we are in multiple other languages."

- Recruitment will target linguists, data scientists, engineers, and technologists alike.

Extras

Brian:

- Next chapter of Lean TDD being released today, Finding Waste in TDD

- Still going to attempt a Jan 31 deadline for first draft of book.

- That really doesn’t seem like enough time, but I’m optimistic.

- SteamDeck is not helping me find time to write

- But I very much appreciate the gift from my fam

- Send me game suggestions on Mastodon or Bluesky. I’d love to hear what you all are playing.

Michael:

- Astral has announced the Beta release of ty, which they say they are "ready to recommend to motivated users for production use."

- Reuven Lerner has a video series on Pandas 3

Joke: Error Handling in the age of AI

- Play on the inversion of JavaScript the Good Parts

Download audio: https://pythonbytes.fm/episodes/download/464/malicious-package-no-build-for-you.mp3

In this post I describe some of the recent updates added in version 1.0.0-beta19 of my source generator NuGet package NetEscapades.EnumGenerators which you can use to add fast methods for working with enums. I start by briefly describing why the package exists and what you can use it for, then I walk through some of the changes in the latest release.

Why should you use an enum source generator?

NetEscapades.EnumGenerators provides a source generator that is designed to work around an annoying characteristic of working with enums: some operations are surprisingly slow.

As an example, let's say you have the following enum:

public enum Colour

{

Red = 0,

Blue = 1,

}

At some point, you want to print the name of a Color variable, so you create this helper method:

public void PrintColour(Colour colour)

{

Console.WriteLine("You chose "+ colour.ToString()); // You chose Red

}

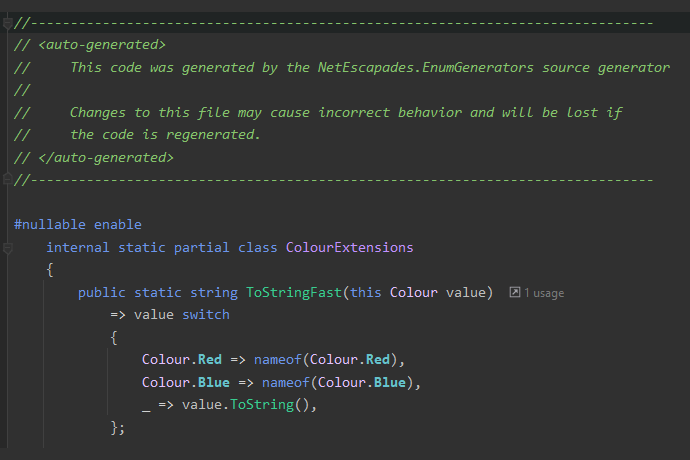

While this looks like it should be fast, it's really not. NetEscapades.EnumGenerators works by automatically generating an implementation that is fast. It generates a ToStringFast() method that looks something like this:

public static class ColourExtensions

{

public string ToStringFast(this Colour colour)

=> colour switch

{

Colour.Red => nameof(Colour.Red),

Colour.Blue => nameof(Colour.Blue),

_ => colour.ToString(),

}

}

}

This simple switch statement checks for each of the known values of Colour and uses nameof to return the textual representation of the enum. If it's an unknown value, then it falls back to the built-in ToString() implementation for simplicity of handling of unknown values (for example this is valid C#: PrintColour((Colour)123)).

If we compare these two implementations using BenchmarkDotNet for a known colour, you can see how much faster the ToStringFast() implementation is, even in .NET 10

| Method | Mean | Error | StdDev | Median | Gen0 | Allocated |

|---|---|---|---|---|---|---|

| ToString | 6.4389 ns | 0.1038 ns | 0.0971 ns | 6.4567 ns | 0.0038 | 24 B |

| ToStringFast | 0.0050 ns | 0.0202 ns | 0.0189 ns | 0.0000 ns | - | - |

Obviously your mileage may vary and the results will depend on the specific enum and which of the generated methods you're using, but in general, using the source generator should give you a free performance boost!

If you want to learn more about all the features the package provides, check my previous blog posts or see the project README.

That's the basics of why I think you should take a look at the source generator. Now let's take a look at the latest features added.

Updates in 1.0.0-beta19

Version 1.0.0-beta19 of NetEscapades.EnumGenerators was released to nuget.org recently and includes a number of new features. I'll describe each of the updates in more detail below, covering the following:

- Support for disabling number parsing.

- Support for automatically calling

ToLowerInvariant()orToUpperInvariant()on the serialized enum. - Add support for

ReadOnlySpan<T>APIs when using theSystem.MemoryNuGet package.

There are other fixes and features in 1.0.0-beta19, but these are the ones I'm focusing on in this post. I'll show some of the other features in the next post!

Support for disabling number parsing and additional options

The first feature addresses a long-standing request, disabling the fallback "number parsing" implemented in Parse() and TryParse(). For clarity I'll provide a brief example. Let's take the Color example again:

[EnumExtensions]

public enum Colour

{

Red = 0,

Blue = 1,

}

As well as ToStringFast(), we generate similar "fast" Parse(), TryParse(), and most of the other System.Enum static methods that you might expect. The TryParse() method for the above code looks something like this:

public static bool TryParse(string? name, out Colour value)

{

switch (name)

{

case nameof(Colour.Red):

value = Colour.Red;

return true;

case nameof(Colour.Blue):

value = Colour.Blue;

return true;

case string s when int.TryParse(name, out var val):

value = (Colour)val;

return true;

default:

value = default;

return false;

}

}

The first two branches in this example are what you might expect; the source generator generates an explicit switch statement for the Colour enum. However, the third case may look a little odd. The problem is that enums in C# are not a closed list of values, you can always do something like this:

Colour valid = (Colour)123;

string stillValid = valid.ToString(); // "123"

Colour parsed = Enum.Parse<Colour>(stillValid); // 123

Console.WriteLine(valid == parsed); // true

Essentially, you can pretty much parse any integer that has been ToString()ed as any enum. That's why the source generated code includes the case statement to try parsing as an integer—it's to ensure compatibility with the "built-in" Enum.Parse() and TryParse() behaviour.

However, that behaviour isn't always what you want. Arguably, it's rarely what you want, hence the request to allow disabling it.

Given it makes so much sense to allow disabling it, it's perhaps a little embarrassing that it took so long to address. I dragged my feet on it for several reasons:

- The built-in

System.Enumworks like this, and I want to be as drop-in compatible as possible. - There's a trivial "fix" by checking first that the first digit is not a number. e.g.

if (!char.IsDigit(text[0]) && ColourExtensions.TryParse(text, out var value)) - It's another configuration switch that would need to be added to

ParseandTryParse

Of all the reasons, that latter point is the one that vexed me. There were already two configuration knobs for Parse and TryParse, as well as versions that accept both string and ReadOnlySpan<char>:

public static partial class ColourExtensions

{

public static Colour Parse(string? name);

public static Colour Parse(string? name, bool ignoreCase);

public static Colour Parse(string? name, bool ignoreCase, bool allowMatchingMetadataAttribute);

public static Colour Parse(ReadOnlySpan<char> name);

public static Colour Parse(ReadOnlySpan<char> name, bool ignoreCase);

public static Colour Parse(ReadOnlySpan<char> name, bool ignoreCase, bool allowMatchingMetadataAttribute);

}

My concern was adding more and more parameters or overloads would make the generated API harder to understand. The simple answer was to take the classic approach of introducing an "options" object. This encapsulates all the available options in a single parameter, and can be extended without increasing the complexity of the generated APIs:

public static partial class ColourExtensions

{

public static Colour Parse(string? name, EnumParseOptions options);

public static Colour Parse(ReadOnlySpan<char> name, EnumParseOptions options);

}

The EnumParseOptions object is defined in the source generator dll that's referenced by your application, so it becomes part of the public API. It's defined as a readonly struct to avoid allocating an object on the heap just to call Parse (which would negate some of the benefits of these APIs).

public readonly struct EnumParseOptions

{

private readonly StringComparison? _comparisonType;

private readonly bool _blockNumberParsing;

public EnumParseOptions(

StringComparison comparisonType = StringComparison.Ordinal,

bool allowMatchingMetadataAttribute = false,

bool enableNumberParsing = true)

{

_comparisonType = comparisonType;

AllowMatchingMetadataAttribute = allowMatchingMetadataAttribute;

_blockNumberParsing = !enableNumberParsing;

}

public StringComparison ComparisonType => _comparisonType ?? StringComparison.Ordinal;

public bool AllowMatchingMetadataAttribute { get; }

public bool EnableNumberParsing => !_blockNumberParsing;

}

The main difficulty in designing this object was that the default values (i.e. (EnumParseOptions)default) had to match the "default" values used in other APIs, i.e.

- Not case sensitive

- Number parsing enabled

- Don't match metadata attributes (see my previous post about recent changes to metadata attributes!).

The final object ticks all those boxes, and it means you can now disable number parsing, using code like this:

string someNumber = "123";

ColourExtensions.Parse(someNumer, new EnumParseOptions(enableNumberParsing: false)); // throws ArgumentException

As well as introducing number parsing, this also provided a way to sneak in the ability to use any type of StringComparison during Parse or TryParse methods, instead of only supporting Ordinal and OrdinalIgnoreCase.

Support for automatic ToLowerInvariant() calls

The next feature was also a feature request that's been around for a long time, the ability to do the equivalent of ToString().ToLowerInvariant() but without the intermediate allocation, and with the transformation done at compile time, essentially generating something similar to these:

public static class ColourExtensions

{

public static string ToStringLowerInvariant(this Colour colour) => colour switch

{

Colour.Red => "red",

Colour.Blue => "blue",

_ => colour.ToString().ToLowerInvariant(),

};

public static string ToStringUpperInvariant(this Colour colour) => colour switch

{

Colour.Red => "RED",

Colour.Blue => "BLUE",

_ => colour.ToString().ToUpperInvariant(),

}

}

There are various reasons you might want to do that, for example if third-party APIs require that you use upper/lower for your enums, but you want to keep your definitions as canonical C# naming. This was another case where I could see the value, but I didn't really want to add it, as it looked like it would be a headache. ToStringLower() is kind of ugly, and there would be a bunch of extra overloads required again.

Just as for the number parsing scenario, the solution I settled on was to add a SerializationOptions object that supports a SerializationTransform, which can potentially be extended in the future if required (though I'm not chomping at the bit to add more options right now!)

public readonly struct SerializationOptions

{

public SerializationOptions(

bool useMetadataAttributes = false,

SerializationTransform transform = SerializationTransform.None)

{

UseMetadataAttributes = useMetadataAttributes;

Transform = transform;

}

public bool UseMetadataAttributes { get; }

public SerializationTransform Transform { get; }

}

public enum SerializationTransform

{

None,

LowerInvariant,

UpperInvariant,

}

You can then use the ToStringFast() overload that takes a SerializationOptions object, and it will output the lower version of your enum, without needing the intermediate ToStringFast call:

var colour = Colour.Red;

Console.WriteLine(colour.ToStringFast(new(transform: SerializationTransform.LowerInvariant))); // red

It's not the tersest of syntax, but there's ways to clean that up, and it means that adding additional options later if required should be less of an issue, but we shall see. In the short-term, it means that you can now use this feature if you find yourself needing to call ToLowerInvariant() or ToUpperInvariant() on your enums.

Support for the System.Memory NuGet package

From the start, NetEscapades.EnumGenerators has had support for parsing values from ReadOnlySpan<char>, just like the modern APIs in System.Enum do (but faster 😉). However, these APIs have always been guarded by a pre-processor directive; if you're using .NET Core 2.1+ or .NET Standard 2.1 then they're available, but if you're using .NET Framework, or .NET Standard 2.0, then they're not available.

#if NETCOREAPP2_1_OR_GREATER || NETSTANDARD2_1_OR_GREATER

public static bool IsDefined(in ReadOnlySpan<char> name);

public static bool IsDefined(in ReadOnlySpan<char> name, bool allowMatchingMetadataAttribute);

public static Colour Parse(in ReadOnlySpan<char> name);

public static bool TryParse(in ReadOnlySpan<char> name, out Colour colour);

// etc

#endif

In my experience, this isn't a massive problem these days. If you're targeting any version of .NET Core or modern .NET, then you have the APIs. If, on the other hand, you're on .NET Framework, then the speed of Enum.ToString() will really be the least of your performance worries, and the lack of the APIs probably don't matter that much.

Where it could still be an issue is .NET Standard 2.0. It's not recommended to use this target if you're creating libraries for .NET Core or modern .NET, but if you need to target both .NET Core and .NET Framework, then you don't necessarily have a lot of choice, you need to use .NET Standard.

What's more, there's a System.Memory NuGet package that provides polyfills for many of the APIs, in particular ReadOnlySpan<char>.

As I understand it the polyfill implementation isn't generally as fast as the built-in version, but it's still something of an improvement!

So the new feature in 1.0.0-beta19 of NetEscapades.EnumGenerators is that you can define an MSBuild property, EnumGenerator_UseSystemMemory=true, and the ReadOnlySpan<char> APIs will be available where previously they wouldn't be. Note that you only need to define this if you're targeting .NET Framework or .NET Standard 2.0 and want the ReadOnlySpan<char> APIs.

<Project Sdk="Microsoft.NET.Sdk">

<PropertyGroup>

<TargetFrameworks>netstandard2.0</TargetFrameworks>

<!-- 👇Setting this in a .NET Standard 2.0 project enables the ReadOnlySpan<char> APIs-->

<EnumGenerator_UseSystemMemory>true</EnumGenerator_UseSystemMemory>

</PropertyGroup>

<ItemGroup>

<PackageReference Include="NetEscapades.EnumGenerators" Version="1.0.0-beta19" />

<PackageReference Include="System.Memory" Version="4.6.3" />

</ItemGroup>

</Project>

Setting this property defines a constant, NETESCAPADES_ENUMGENERATORS_SYSTEM_MEMORY, which the updated generated code similarly predicates on:

// 👇 New in 1.0.0-beta19

#if NETCOREAPP2_1_OR_GREATER || NETSTANDARD2_1_OR_GREATER || NETESCAPADES_ENUMGENERATORS_SYSTEM_MEMORY

public static bool IsDefined(in ReadOnlySpan<char> name);

public static bool IsDefined(in ReadOnlySpan<char> name, bool allowMatchingMetadataAttribute);

public static Colour Parse(in ReadOnlySpan<char> name);

public static bool TryParse(in ReadOnlySpan<char> name, out Colour colour);

// etc

#endif

There are some caveats:

- The System.Memory package doesn't provide an implementation of

int.TryParse()that works withReadOnlySpan<char>, so:- If you're attempting to parse a

ReadOnlySpan<char>, - and the

ReadOnlySpan<char>doesn't represent one of your enum types - and you haven't disabled number parsing

- then the APIs will potentially allocate, so that they can call

int.TryParse(string).

- If you're attempting to parse a

- Additional warnings are included in the XML docs to warn about the above scenario

- If you set the variable, and haven't added a reference to System.Memory, you'll get compilation warnings.

As part of shipping the feature, I've also tentatively added "detection" of referencing the System.Memory NuGet package in the package .targets file, so that EnumGenerator_UseSystemMemory=true should be automatically set, simply by referencing System.Memory. However, I consider this part somewhat experimental, as it's not something I've tried to do before, I'm not sure it's something you should do, and I'm a long way from thinking it'll work 100% of the time 😅 I'd be interested in feedback on how I should do this, and/or whether it works for you!

I also experimented with a variety of other approaches. Instead of using a defined constant, you could also detect the availability of

ReadOnlySpan<char>in the generator itself, and emit different code entirely. But you'd still need to detect whether theint.TryParse()overloads are available (i.e. isReadOnlySpan<char>"built in" or package-provided), and overall it seemed way more complex to handle than the approach I settled on. I'm still somewhat torn though, and maye revert to this approach in the future. And I'm open to other suggestions!

So in summary, if you're using NetEscapades.EnumGenerators in a netstandard2.0 or .NET Framework package, and you're already referencing System.Memory, then in theory you should magically get additional ReadOnlySpan<char> APIs by updating to 1.0.0-beta19. If that's not the case, then I'd love if you could raise an issue so we can understand why, but you can also simply set EnumGenerator_UseSystemMemory=true to get all that performance goodness guaranteed.

Before I close, I'd like to say a big thank you to everyone who has raised issues and PRs for the project, especially Paulo Morgado for his discussion and work around the System.Memory feature! All feedback is greatly appreciated, so do raise an issue if you have any problems.

When is a non-pre-release 1.0.0 release coming?

Soon. I promise 😅 So give the new version a try and flag any issues so they can be fixed before we settle on the final API for once and for all! 😀

Summary

In this post I walked through some of the recent updates to NetEscapades.EnumGenerators shipped in version 1.0.0-beta18. I showed how introducing options objects for both the Parse()/TryParse() and ToString() methods allowed introducing new features such as disabling number parsing and serializing directly with ToLowerInvariant(). Finally, I showed the new support for ReadOnlySpan<char> APIs when using .NET Framework or .NET Standard 2.0 with the System.Memory NuGet package If you haven't already, I recommend updating and giving it a try! If you run into any problems, please do log an issue on GitHub.🙂