Introduction

When you have an existing deployed REST API that you want to change, you generally need to be very careful. Why would you want to change it? There can be a number of reasons:

-

Changes to the request contract (adding, removing or modifying request parameters)

-

Changes to the response contract (same as above)

-

Changes to the behaviour of the existing actions (they now do something slightly different)

-

Changes to the HTTP methods that the existing actions accept (not so common)

-

Changes to the HTTP return status codes

-

…

In a controlled environment, you can change at the same time the server and the client implementations, which means that you can update your clients accordingly, but, unfortunately, this is not always the case. Enter versioning!

With versioned APIs, you can have multiple versions of your API, one that the legacy clients will still recognise and be able to talk to, and possibly one or more that are more suitable for the new requirements: each client requests from the server the version it knows about, or, if one is not specified (legacy clients may not know about this), a default one is returned. Fortunately, ASP.NET Core MVC (and minimal API too, but that will be for another post) supports this quite nicely, meaning, it can let you have multiple endpoints that map to the same route but respond each to a different version. Let’s see how!

What is a Version?

In this context, a version can be one of three things:

-

A floating-point number, which consists of a major and a minor version, such as 1.0, 2.5, 3.0, etc

-

A date, such as 2024-05-01

-

A combination of both, such as 2024-05-01.1.0

A version can also have a status, which can be a freeform text that adds context to the version, and is usually one of “alpha”, “beta”, “pre”, etc. Versions are implemented by the ApiVersion class.

Throughout this post, for the sake of simplicity, I will always be referring to versions as floating point numbers.

Setting Up

We will need to add Nuget packages Asp.Versioning.Mvc, Asp.Versioning.Mvc.ApiExplorer, and Swashbuckle.AspNetCore (for Swashbuckle, more on this later) to your ASP.NET Core project. Then, on Program class, we need to register a few things:

builder.Services.AddApiVersioning(options =>

{

options.AssumeDefaultVersionWhenUnspecified = true;

options.DefaultApiVersion = new ApiVersion(1, 0); //same as ApiVersion.Default

options.ReportApiVersions = true;

options.ApiVersionReader = ApiVersionReader.Combine(

new UrlSegmentApiVersionReader(),

new QueryStringApiVersionReader("api-version"),

new HeaderApiVersionReader("X-Version"),

new MediaTypeApiVersionReader("X-Version"));

})

.AddMvc(options => {})

.AddApiExplorer(options =>

{

options.GroupNameFormat = "'v'VVV";

options.SubstituteApiVersionInUrl = true;

});

Let’s start with AddApiVersioning: we are here telling it that the default API version (DefaultApiVersion) should be 1.0 and that it should assume it when a specific version is not specified (AssumeDefaultVersionWhenUnspecified). ASP.NET Core should also report all API versions that we have (ReportApiVersions), something that you may consider turning off in production. So, and how does ASP.NET Core infer the version that we want? Well, this is left in charge to the ApiVersionReader. We have a number of possible strategies, and, here, we are combining them all. They are:

-

UrlSegmentApiVersionReader: extracts the API version from the URL path, for example,

/api/v3/Product; this is one of the most common strategies

-

QueryStringApiVersionReader: in this case, the version comes from a query string parameter, for example,

?api-version=3; good mainly for testing purposes, IMO

-

HeaderApiVersionReader: headless clients can send the desired version as a request header, for example:

X-Version: 2. Also a must-have

-

MediaTypeApiVersionReader: this one is not so commonly used, but it allows to retrieve the version from the

Accept or

Content-Type request header fields, as in:

Accept: application/json; X-Version=1.0; from my experience, it is rarely used, but, if you need more information, check out the

guidelines

Of course, you can come up with your clever way of extracting the version from the request, all you need is to roll out your own implementation of IApiVersionReader. Here, the choice of parameters (api-version, X-Version) is obviously up to you, but I used the most common ones for each strategy. Usually, UrlSegmentApiVersionReader is what you want, together with the default version, for when you don’t specify a version on the URL.

Let’s have a look now at AddApiExplorer. Here, essentially, we are specifying how to name our versions (GroupNameFormat), in this case, starting with a v and consisting of the major, optional minor version, and status (VVV), this is essentially for OpenAPI clients such as Swashbuckle of which we’ll talk soon (see here for the full reference of the format string). We’re also telling it to substitute any {version} parameter it sees in route templates for the actual version (SubstituteApiVersionInUrl), for example, api/v{version}/[controller].

Versioning Controller Actions

We have essentially three ways to version our actions:

-

Using attributes

-

Using code

-

Using conventions

Using Attributes

Let’s first have a look at how to use attributes to version some controller actions. One decision that needs to be made is: should we have our different version action methods on the same controller or separate them? If we are to have them on the same controller, we can come up with something like this:

[ApiController]

[Route("api/[controller]")]

[Route("api/v{version:apiVersion}/[controller]")]

[ApiVersion("1.0")]

public class ProductController : ControllerBase

{

[HttpGet]

public Data Get()

{

//not important here

}

[HttpGet]

[ApiVersion(2.0, Deprecated = true)]

public ExtendedData GetV2()

{

//not important here

}

}

In this particular example, we are declaring two routes templates to our controller:

The first one just says: hey, if no version is specified and the Product controller is requested, here it is. Because we are applying the [ApiVersion] attribute to the controller class, its Get method will default to the version specified in it, whereas GetV2 will honour the specific version attribute that is applied to it. Worth noting that we can specify a version either as a string (“1.0”) or as a floating-point number (2.0). Just for completeness, we are also marking GetV2 with the Deprecated flag, which is something that we can leverage later on to extract information from the version, but does not affect in any way how the version is called, or that it can be called. For completeness, if we apply the [Obsolete] attribute, we get exactly the same result, from a metadata point of view. The usage of apiVersion to constraint the value of {version} is optional, it’s just there to prevent us from entering something on the URL that doesn’t make sense as a version.

Yet another option would be to declare new versions on a new controller class, probably the most typical case:

[ApiController]

[ControllerName("Product")]

[Route("api/v{version:apiVersion}/[controller]")]

[ApiVersion("3.0")]

[ApiVersion("4.0")]

public class ProductVXController : ControllerBase

{

[HttpGet]

[MapToApiVersion("3.0")]

public ExtendedData GetV3()

{

//not important here

}

[HttpGet]

[MapToApiVersion("4.0")]

public ExtendedData GetV4()

{

//not important here

}

}

Notice that here we are skipping the default route template and only add the one with the version. We applied a [ControllerName] attribute to the class because we want it to also be called Product, and not ProductVX. Also, each action method takes its own [MapToApiVersion] attribute, this is how ASP.NET Core knows which one to call when one version is specified. Just out of curiosity, you can also have multiple versions pointing to the same method:

[HttpGet]

[MapToApiVersion("3.0")]

[MapToApiVersion("4.0")]

public ExtendedData GetV34(ApiVersion version)

{

//use version to detect the version that was requested

if (version.MajorVersion == 3) { … }

//not important here

}

Here, we are injecting a parameter of type ApiVersion, which is automatically supplied by ASP.NET Core, and it allows us to retrieve the currently-requested version (in this example, it could either be 3.0 or 4.0).

Finally, we can also declare a controller (or an action method) to be version-neutral, this is achieved by applying an [ApiVersionNeutral] attribute. Essentially, what it means is that the controller/action accepts any version, or no version at all. If you are using a version-neutral API, you can, however, force it to think that it is using the default version, by setting the AddApiVersionParametersWhenVersionNeutral option in the configuration:

.AddApiExplorer(options =>

{

options.GroupNameFormat = "'v'VVV";

options.SubstituteApiVersionInUrl = true;

options.AddApiVersionParametersWhenVersionNeutral = true;

});

From my experience, I never had to use version-neutral APIs, so I think it’s safe to ignore them.

Using Code

You can also use code to configure all of the aforementioned settings:

.AddMvc(options =>

{

options.Conventions.Controller<ProductController>()

.HasDeprecatedApiVersion(2.0)

.HasApiVersion(1.0)

.Action(c => c.GetV2()).MapToApiVersion(2.0)

.Action(c => c.Get());

options.Conventions.Controller<ProductVXController>()

.HasApiVersion(3.0)

.HasApiVersion(4.0)

.Action(c => c.GetV3()).MapToApiVersion(3.0)

.Action(c => c.GetV4()).MapToApiVersion(4.0);

})

As you can see, it’s very simple to understand, as the API is strongly typed.

Using Conventions

The last option we will explore for declaring API versioning is by using conventions. Out of the box, ASP.NET Core versioning only offers one option: to version by namespace name. This is implemented by class VersionByNamespaceConvention, and what it does is, depending on the namespace where our API controller is, it infers its version (nothing is known about individual action methods). So, for example (example adapted from the documentation here):

| Namespace |

Version |

| Contoso.Api.Controllers |

1.0 (default) |

| Contoso.Api.v1_1.Controllers |

1.1 |

| Contoso.Api.v0_9_Beta.Controllers |

0.9-Beta |

| Contoso.Api.v20180401.Controllers |

2018-04-01 |

| Contoso.Api.v2018_04_01.Controllers |

2018-04-01 |

| Contoso.Api.v2018_04_01_Beta.Controllers |

2018-04-01-Beta |

| Contoso.Api.v2018_04_01_1_0_Beta.Controllers |

2018-04-01.1.0-Beta |

The way to register this convention is like this:

.AddMvc(options =>

{

options.Conventions.Add(new VersionByNamespaceConvention());

})

If you want, you can implement your custom convention by implementing IControllerConvention yourself and register it here. The way you’d do is, you’d probably try to make assumptions from the namespace, name of the action methods, and the likes, and add attributes to the model. That is, however, outside the scope of this post.

Using Versioning

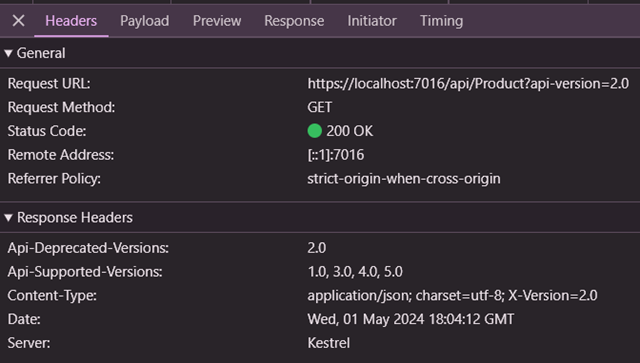

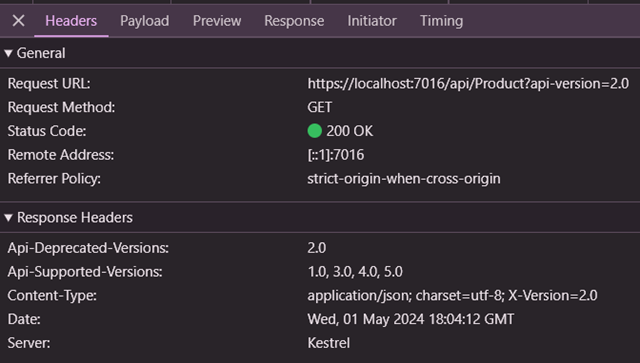

So, after everything is setup, we can now call our versioned APIs and see that, depending on the version we call, different endpoints are reached. If we enabled the ReportApiVersions option, we get the list of available versions, together with the deprecated ones, in the Api-Supported-Versions and Api-Deprecated-Versions headers, when we call an API:

Again, this may be considered a security issue so you may want to disable it in production!

Using Swagger

Swagger is a very popular spec and framework for generating API documentation compliant with the OpenAPI standard. Swashbuckle is a .NET package that generates Swagger/OpenAPI interfaces.

In the first section, we already added the required package Swashbuckle.AspNetCore, now we need to configure a few things; add this to the Program class:

builder.Services.AddSwaggerGen();

builder.Services.ConfigureOptions<ConfigureSwaggerOptions>();

app.UseHttpsRedirection(); //should already be there

app.UseSwagger();

//rest goes here

app.MapDefaultControllerRoute(); //should already be there, or a similar line

app.UseSwaggerUI(options =>

{

var provider = app.Services.GetRequiredService<IApiVersionDescriptionProvider>();

foreach (var description in provider.ApiVersionDescriptions)

{

options.SwaggerEndpoint($"/swagger/{description.GroupName}/swagger.json", description.GroupName.ToUpperInvariant());

}

});

We register the Swagger required services and endpoints and also an options class for, ConfigureSwaggerOptions. Here is the definition of the ConfigureSwaggerOptions class:

internal class ConfigureSwaggerOptions : IConfigureOptions<SwaggerGenOptions>

{

private readonly IApiVersionDescriptionProvider _provider;

private readonly IOptions<ApiVersioningOptions> _options;

public ConfigureSwaggerOptions(IApiVersionDescriptionProvider provider, IOptions<ApiVersioningOptions> options)

{

_provider = provider;

_options = options;

}

public void Configure(SwaggerGenOptions options)

{

foreach (var description in _provider.ApiVersionDescriptions)

{

var xmlFile = $"{Assembly.GetExecutingAssembly().GetName().Name}.xml";

var xmlPath = Path.Combine(AppContext.BaseDirectory, xmlFile);

if (File.Exists(xmlPath))

{

options.IncludeXmlComments(xmlPath);

}

options.SwaggerDoc(description.GroupName, CreateVersionInfo(description));

}

}

private OpenApiInfo CreateVersionInfo(ApiVersionDescription description)

{

var info = new OpenApiInfo()

{

Title = $"API {description.GroupName}",

Version = description.ApiVersion.ToString()

};

if (description.ApiVersion == _options.Value.DefaultApiVersion)

{

if (!string.IsNullOrWhiteSpace(info.Description))

{

info.Description += " ";

}

info.Description += "Default API version.";

}

if (description.IsDeprecated)

{

if (!string.IsNullOrWhiteSpace(info.Description))

{

info.Description += " ";

}

info.Description += "This API version is deprecated.";

}

return info;

}

}

This IConfigureOptions<T> implementation class is part of an implementation of the Options Pattern; essentially, Swashbuckle uses the SwaggerGenOptions class to store configuration and we use this class to modify it before it is actually used. What we do here is, we look at each API endpoint and we document it, we set the title of the API, the version, and we say whether or not it is deprecated or the default, plus we add the XML documentation to the API, if it exists as a XML file. This is a bit outside the scope of this article, but you can output the code comments on your API to an XML file, which can then be used to document the REST API through Swagger.

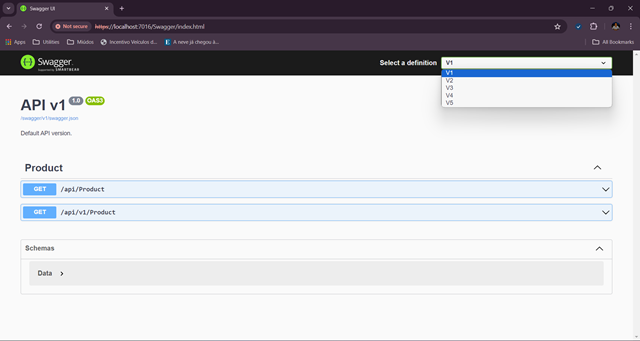

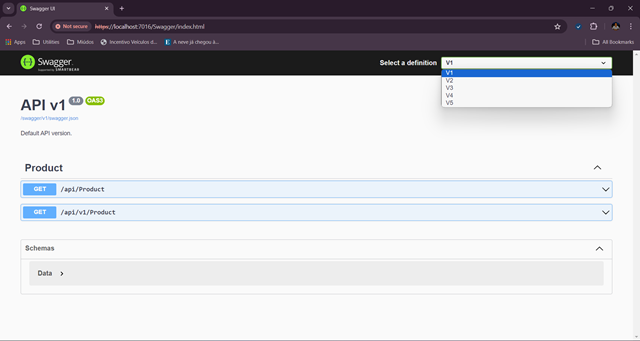

Once we do this, we can now access the /Swagger endpoint and see all the available API endpoints:

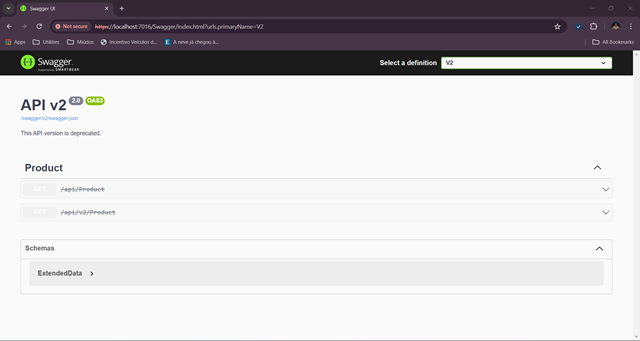

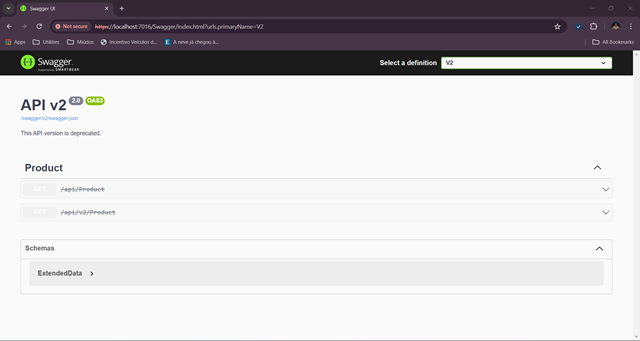

Notice on the top right the list of API versions that we can choose from. For example, if we pick V2:

Swashbuckle allows us to call an endpoint of a specific version without caring about the details, how we actually set the version, which comes very useful.

Conclusion

API versioning is a powerful tool that you should leverage to avoid compatibility issues between your APIs and your clients. It allows your systems to evolve while maintaining compatibility with legacy clients. As always, looking forward for your comments!

See https://github.com/dotnet/aspnet-api-versioning for examples and https://github.com/dotnet/aspnet-api-versioning/wiki for a discussion of these topics.