Three years ago, I published “Making GraphQL Work In WordPress,” where I compared the two leading GraphQL servers available for WordPress at the time: WPGraphQL and Gato GraphQL. In the article, I aimed to delineate the scenarios best suited for each.

Full disclosure: I created Gato GraphQL, originally known as GraphQL API for WordPress, as referenced in the article.

A lot of new developments have happened in this space since my article was published, and it’s a good time to consider what’s changed and how it impacts the way we work with GraphQL data in WordPress today.

This time, though, let’s focus less on when to choose one of the two available servers and more on the developments that have taken place and how both plugins and headless WordPress, in general, have been affected.

Headless Is The Future Of WordPress (And Shall Always Be)

There is no going around it: Headless is the future of WordPress! At least, that is what we have been reading in posts and tutorials for the last eight or so years. Being Argentinian, this reminds me of an old joke that goes, “Brazil is the country of the future and shall always be!” The future is both imminent and far away.

Truth is, WordPress sites that actually make use of headless capabilities — via GraphQL or the WP REST API — represent no more than a small sliver of the overall WordPress market. WPEngine may have the most extensive research into headless usage in its “The State of Headless” report. Still, it’s already a few years old and focused more on both the general headless movement (not just WordPress) and the context of enterprise organizations. But the future of WordPress, according to the report, is written in the clouds:

“Headless is emphatically here, and with the rapid rise in enterprise adoption from 2019 (53%) to 2021 (64%), it’s likely to become the industry standard for large-scale organizations focused on building and maintaining a powerful, connected digital footprint. […] Because it’s already the most popular CMS in the world, used by many of the world’s largest sites, and because it’s highly compatible as a headless CMS, bringing flexibility, extensibility, and tons of features that content creators love, WordPress is a natural fit for headless configurations.”

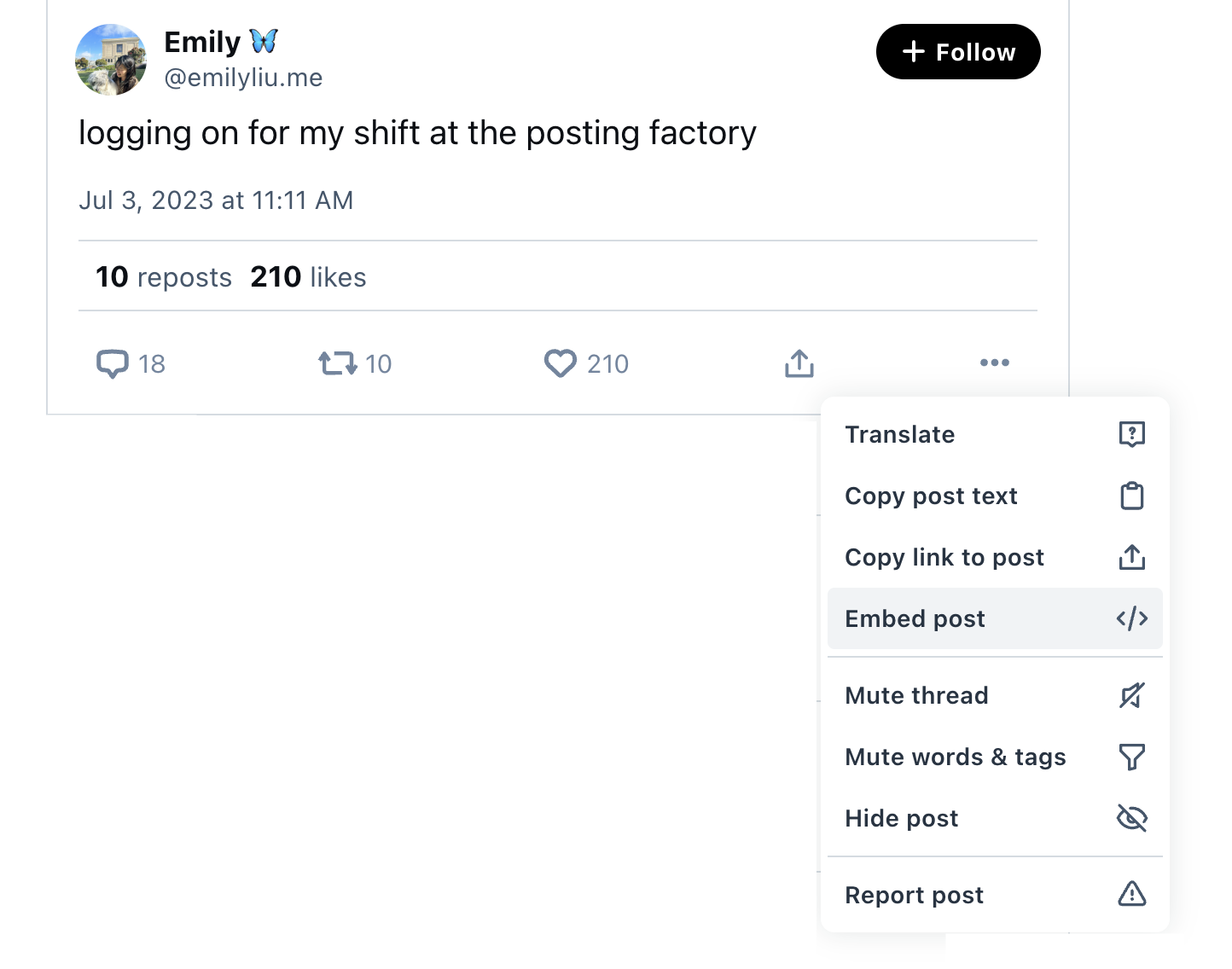

Just a year ago, a Reddit user informally polled people in r/WordPress, and while it’s far from scientific, the results are about as reliable as the conjecture before it:

Headless may very well be the future of WordPress, but the proof has yet to make its way into everyday developer stacks. It could very well be that general interest and curiosity are driving the future more than tangible works, as another of WPEngine’s articles from the same year as the bespoke report suggests when identifying “Headless WordPress” as a hot search term. This could just as well be a lot more smoke than fire.

That’s why I believe that “headless” is not yet a true alternative to a traditional WordPress stack that relies on the WordPress front-end architecture. I see it more as another approach, or flavor, to building websites in general and a niche one at that.

That was all true merely three years ago and is still true today.

WPEngine “Owns” Headless WordPress

It’s no coincidence that we’re referencing WPEngine when discussing headless WordPress because the hosting company is heavily betting on it becoming the de facto approach to WordPress development.

Take, for instance, WPEngine’s launch of Faust.js, a headless framework with WPGraphQL as its foundation. Faust.js is an opinionated framework that allows developers to use WordPress as the back-end content management system and Next.js to render the front-end side of things. Among other features, Faust.js replicates the WordPress template system for Next.js, making the configuration to render posts and pages from WordPress data a lot easier out of the box.

WPEngine is well-suited for this task, as it can offer hosting for both Node.js and WordPress as a single solution via its Atlas platform. WPEngine also bought the popular Advanced Custom Fields (ACF) plugin that helps define relationships among entities in the WordPress data model. Add to that the fact that WPEngine has taken over the Headless WordPress Discord server, with discussions centered around WPGraphQL, Faust, Atlas, and ACF. It could very well be named the WPEngine-Powered Headless WordPress server instead.

But WPEngine’s agenda and dominance in the space is not the point; it’s more that they have a lot of skin in the game as far as anticipating a headless WordPress future. Even more so now than three years ago.

GraphQL API for WordPress → Gato GraphQL

I created a plugin several years ago called GraphQL API for WordPress to help support headless WordPress development. It converts data pulled from the WordPress REST API into structured GraphQL data for more efficient and flexible queries based on the content managed and stored in WordPress.

More recently, I released a significantly updated version of the plugin, so updated that I chose to rename it to Gato GraphQL, and it is now freely available in the WordPress Plugin Directory. It’s a freemium offering like many WordPress plugin pricing models. The free, open-source version in the plugin directory provides the GraphQL server, maps the WordPress data model into the GraphQL schema, and provides several useful features, including custom endpoints and persisted queries. The paid commercial add-on extends the plugin by supporting multiple query executions, automation, and an HTTP client to interact with external services, among other advanced features.

I know this sounds a lot like a product pitch but stick with me because there’s a point to the decision I made to revamp my existing GraphQL plugin and introduce a slew of premium services as features. It fits with my belief that

WordPress is becoming more and more open to giving WordPress developers and site owners a lot more room for innovation to work collaboratively and manage content in new and exciting ways both in and out of WordPress.

JavaScript Frameworks & Headless WordPress

Gatsby was perhaps the most popular and leading JavaScript framework for creating headless WordPress sites at the time my first article was published in 2021. These days, though, Gatsby is in steep decline and its integration with WordPress is no longer maintained.

Next.js was also a leader back then and is still very popular today. The framework includes several starter templates designed specifically for headless WordPress instances.

SvelteKit and Nuxt are surging these days and are considered good choices for establishing headless WordPress, as was discussed during WordCamp Asia 2024.

Today, in 2024, we continue to see new JavaScript framework entrants in the space, notably Astro. Despite Gatsby’s recent troubles, the landscape of using JavaScript frameworks to create front-end experiences from the WordPress back-end is largely the same as it was a few years ago, if maybe a little easier, thanks to the availability of new templates that are integrated right out of the box.

GraphQL Transcends Headless WordPress

The biggest difference between the WPGraphQL and Gato GraphQL plugins is that, where WPGraphQL is designed to convert REST API data into GraphQL data in a single direction, Gato GraphQL uses GraphQL data in both directions in a way that can be used to manage non-headless WordPress sites as well. I say this not as a way to get you to use my plugin but to help describe how GraphQL has evolved to the point where it is useful for more cases than headless WordPress sites.

Managing a WordPress site via GraphQL is possible because GraphQL is an agnostic tool for interacting with data, whatever that interaction may be. GraphQL can fetch data from the server, modify it, store it back on the server, and invoke external services. These interactions can all be coded within a single query.

GraphQL can then be used to regex search and replace a string in all posts, which is practical when doing site migrations. We can also import a post from another WordPress site or even from an RSS feed or CSV source.

And thanks to the likes of WordPress hooks and WP-Cron, executing a GraphQL query can be an automated task. For instance, whenever the publish_post hook is triggered — i.e., a new post on the site is published — we can execute certain actions, like an email notification to the site admin, or generate a featured image with AI if the post lacks one.

In short, GraphQL works both ways and opens up new possibilities for better developer and author experiences!

GraphQL Becomes A “Core” Feature In WordPress 6.5

I have gone on record saying that GraphQL should not be a core part of WordPress. There’s a lot of reasoning behind my opinion, but what it boils down to is that the WP REST API is perfectly capable of satisfying our needs for passing data around, and adding GraphQL to the mix could be a security risk in some conditions.

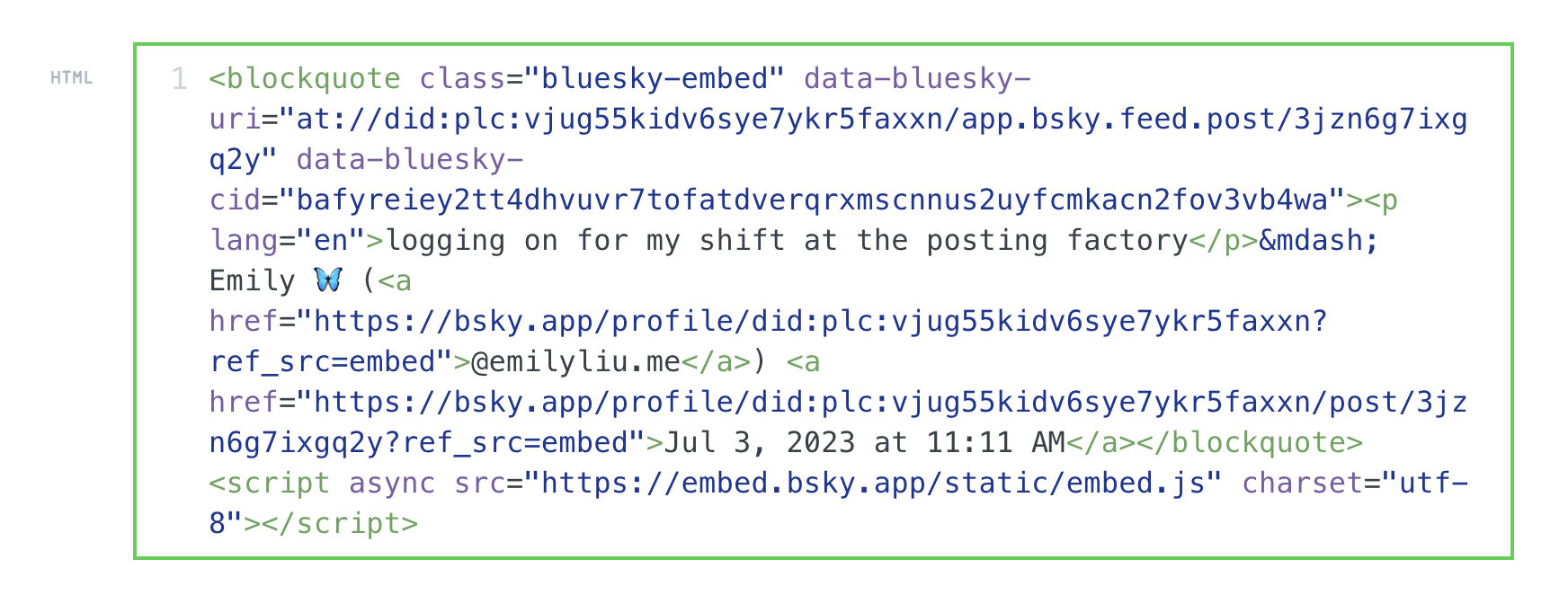

My concerns aside, GraphQL officially became a first-class citizen of WordPress when it was baked into WordPress 6.5 with the introduction of Plugin Dependencies, a feature that allows plugins to identify other plugins as dependencies. We see this in the form of a new “Requires Plugins” comment in a plugin’s header:

/**

* Plugin Name: My Ecommerce Payments for Gato GraphQL

* Requires Plugins: gatographql

*/

WordPress sees which plugins are needed for the current plugin to function properly and installs everything together at the same time, assuming that the dependencies are readily available in the WordPress Plugin Directory.

So, check this out. Since WPGraphQL and Gato GraphQL are in the plugin directory, we can now create another plugin that internally uses GraphQL and distributes it via the plugin directory or, in general, without having to indicate how to install it. For instance, we can now use GraphQL to fetch data to render the plugin’s blocks.

In other words, plugins are now capable of more symbiotic relationships that open even more possibilities! Beyond that, every plugin in the WordPress Plugin Directory is now technically part of WordPress Core, including WPGraphQL and Gato GraphQL. So, yes, GraphQL is now technically a “core” feature that can be leveraged by other developers.

Helping WordPress Lead The CMS Market, Again

While delivering the keynote presentation during WordCamp Asia 2024, Human Made co-founder Noel Tock discussed the future of WordPress. He argues that WordPress growth has stagnated in recent years, thanks to a plethora of modern web services capable of interacting and resulting in composable content management systems tailored to certain developers in a way that WordPress simply isn’t.

Tock continues to explain how WordPress can once again become a growth engine by cleaning up the WordPress plugin ecosystem and providing first-class integrations with external services.

Do you see where I am going with this? GraphQL could play an instrumental role in WordPress’s future success. It very well could be the link between WordPress and all the different services it interacts with, positioning WordPress at the center of the web. The recent Plugin Dependencies feature we noted earlier is a peek at what WordPress could look like as it adopts more composable approaches to content management that support its position as a market leader.

Conclusion

“Headless” WordPress is still “the future” of WordPress. But as we’ve discussed, there’s very little actual movement towards that future as far as developers buying into it despite displaying deep interest in headless architectures, with WordPress purely playing the back-end role.

There are new and solid frameworks that rely on GraphQL for querying data, and those won’t go away anytime soon. And those frameworks are the ones that rely on existing WordPress plugins that consume data from the WordPress REST API and convert it to structured GraphQL data.

Meanwhile, WordPress is making strides toward greater innovation as plugin developers are now able to leverage other plugins as dependencies for their plugins. Every plugin listed in the WordPress Plugin Directory is essentially a feature of WordPress Core, including WPGraphQL and Gato GraphQL. That means GraphQL is readily available for any plugin developer to tap into as of WordPress 6.5.

GraphQL can be used not only for headless but also to manage the WordPress site. Whenever data must be transformed, whether locally or by invoking an external service, GraphQL can be the tool to do it. That even means that data transforms can be triggered automatically to open up new and interesting ways to manage content, both inside and outside of WordPress. It works both ways!

So, yes, even though headless is the future of WordPress (and shall always be), GraphQL could indeed be a key component in making WordPress once again an innovative force that shapes the future of CMS.