In the promising and rapidly evolving field of genetic analysis, the ability to accurately interpret whole genome sequencing data is crucial for diagnosing and improving outcomes for people with rare genetic diseases. Yet despite technological advancements, genetic professionals face steep challenges in managing and synthesizing the vast amounts of data required for these analyses. Fewer than 50% of initial cases yield a diagnosis, and while reanalysis can lead to new findings, the process remains time-consuming and complex.

To better understand and address these challenges, Microsoft Research—in collaboration with Drexel University and the Broad Institute—conducted a comprehensive study titled AI-Enhanced Sensemaking: Exploring the Design of a Generative AI-Based Assistant to Support Genetic Professionals (opens in new tab). The study was recently published in a special edition of ACM Transactions on Interactive Intelligent Systems journal focused on generative AI.

The study focused on integrating generative AI to support the complex, time-intensive, and information-dense sensemaking tasks inherent in whole genome sequencing analysis. Through detailed empirical research and collaborative design sessions with experts in the field, we identified key obstacles genetic professionals face and proposed AI-driven solutions to enhance their workflows. We developed strategies for how generative AI can help synthesize biomedical data, enabling AI-expert collaboration to increase the diagnoses of previously unsolved rare diseases—ultimately aiming to improve patients’ quality of life and life expectancy.

Whole genome sequencing in rare disease diagnosis

Rare diseases affect up to half a billion people globally and obtaining a diagnosis can take multiple years. These diagnoses often involve specialist consultations, laboratory tests, imaging studies, and invasive procedures. Whole genome sequencing is used to identify genetic variants responsible for these diseases by comparing a patient’s DNA sequence to reference genomes. Genetic professionals use bioinformatics tools such as seqr, an open-source, web-based tool for rare disease case analysis and project management to assist them in filtering and prioritizing > 1 million variants to determine their potential role in disease. A critical component of their work is sensemaking: the process of searching, filtering, and synthesizing data to build, refine, and present models from complex sets of gene and variant information.

The multi-step sequencing process typically takes three to 12 weeks and requires extensive amounts of evidence and time to synthesize and aggregate information to understand the gene and variant effects for the patient. If a patient’s case goes unsolved, their whole genome sequencing data is set aside until enough time has passed to warrant a reanalysis. This creates a backlog of patient cases. The ability to easily identify when new scientific evidence emerges and when to reanalyze an unsolved patient case is key to shortening the time patients suffer with an unknown rare disease diagnosis.

The promise of AI systems to assist with complex human tasks

Approximately 87% of AI systems never reach deployment simply because they solve the wrong problems. Understanding the AI support desired by different types of professionals, their current workflows, and AI capabilities is critical to successful AI system deployment and use. Matching technology capabilities with user tasks is particularly challenging in AI design because AI models can generate numerous outputs, and their capabilities can be unclear. To design an effective AI-based system, one needs to identify tasks AI can support, determine the appropriate level of AI involvement, and design user-AI interactions. This necessitates considering how humans interact with technology and how AI can best be incorporated into workflows and tools.

Spotlight: AI-POWERED EXPERIENCE

Microsoft research copilot experience

Discover more about research at Microsoft through our AI-powered experience

Study objectives and co-designing a genetic AI assistant

Our study aimed to understand the current challenges and needs of genetic professionals performing whole genome sequencing analyses and explore the tasks where they want an AI assistant to support them in their work. The first phase of our study involved interviews with 17 genetics professionals to better understand their workflows, tools, and challenges. They included genetic analysts directly involved in interpreting data, as well as other roles participating in whole genome sequencing. In the second phase of our study, we conducted co-design sessions with study participants on how an AI assistant could support their workflows. We then developed a prototype of an AI assistant, which was further tested and refined with study participants in follow-up design walk-through sessions.

Identifying challenges in whole genome sequencing analysis

Through our in-depth interviews with genetic professionals, our study uncovered three critical challenges in whole genome sequencing analysis:

- Information Overload: Genetic analysts need to gather and synthesize vast amounts of data from multiple sources. This task is incredibly time-consuming and prone to human error.

- Collaborative Sharing: Sharing findings with others in the field can be cumbersome and inefficient, often relying on outdated methods that slow the collaborative analysis process.

- Prioritizing Reanalysis: Given the continuous influx of new scientific discoveries, prioritizing unsolved cases to reanalyze is a daunting challenge. Analysts need a systematic approach to identify cases that might benefit most from reanalysis.

Genetic professionals highlighted the time-consuming nature of gathering and synthesizing information about genes and variants from different data sources. Other genetic professionals may have insights into certain genes and variants, but sharing and interpreting information with others for collaborative sensemaking requires significant time and effort. Although new scientific findings could affect unsolved cases through reanalysis, prioritizing cases based on new findings was challenging given the number of unsolved cases and limited time of genetic professionals.

Co-designing with experts and AI-human sensemaking tasks

Our study participants prioritized two potential tasks of an AI assistant. The first task was flagging cases for reanalysis based on new scientific findings. The assistant would alert analysts to unsolved cases that could benefit from new research, providing relevant updates drawn from recent publications. The second task focused on aggregating and synthesizing information about genes and variants from the scientific literature. This feature would compile essential information from numerous scientific papers about genes and variants, presenting it in a user-friendly format and saving analysts significant time and effort. Participants emphasized the need to balance selectivity with comprehensiveness in the evidence they review. They also envisioned collaborating with other genetic professionals to interpret, edit, and verify artifacts generated by the AI assistant.

Genetic professionals require both broad and focused evidence at different stages of their workflow. The AI assistant prototypes were designed to allow flexible filtering and thorough evidence aggregation, ensuring users can delve into comprehensive data or selectively focus on pertinent details. The prototypes included features for collaborative sensemaking, enabling users to interpret, edit, and verify AI-generated information collectively. This approach not only underscores the trustworthiness of AI outputs, but also facilitates shared understanding and decision-making among genetic professionals.

Design implications for expert-AI sensemaking

In the shifting frontiers of genome sequence analysis, leveraging generative AI to enhance sensemaking offers intriguing possibilities. The task of staying current, synthesizing information from diverse sources, and making informed decisions is challenging.

Our study participants emphasized the hurdles in integrating data from multiple sources without losing critical components, documenting decision rationales, and fostering collaborative environments. Generative AI models, with their advanced capabilities, have started to address these challenges by automatically generating interactive artifacts to support sensemaking. However, the effectiveness of such systems hinges on careful design considerations, particularly in how they facilitate distributed sensemaking, support both initial and ongoing sensemaking, and combine evidence from multiple modalities. We next discuss three design considerations for using generative AI models to support sensemaking.

Distributed expert-AI sensemaking design

Generative AI models can create artifacts that aid an individual user’s sensemaking process; however, the true potential lies in sharing these artifacts among users to foster collective understanding and efficiency. Participants in our study emphasized the importance of explainability, feedback, and trust when interacting with AI-generated content. Trust is gained by viewing portions of artifacts marked as correct by other users, or observing edits made to AI-generated information. Some users, however, cautioned against over-reliance on AI, which could obscure underlying inaccuracies. Thus, design strategies should ensure that any corrections are clearly marked and annotated. Furthermore, to enhance distributed sensemaking, visibility of others’ notes and context-specific synthesis through AI can streamline the process.

Initial expert-AI sensemaking and re-sensemaking design

In our fast-paced, information-driven world, it is essential to understand a situation both initially and again when new information arises. Sensemaking is inherently temporal, reflecting and shaping our understanding of time as we revisit tasks to reevaluate past decisions or incorporate new information. Generative AI plays a pivotal role here by transforming static data into dynamic artifacts that evolve, offering a comprehensive view of past rationales. Such AI-generated artifacts provide continuity, allowing users—both original decision-makers or new individuals—to access the rationale behind decisions made in earlier task instances. By continuously editing and updating these artifacts, generative AI highlights new information since the last review, supporting ongoing understanding and decision-making. Moreover, AI systems enhance transparency by summarizing previous notes and questions, offering insights into earlier thought processes and facilitating a deeper understanding of how conclusions were drawn. This reflective capability not only can reinforce initial sensemaking efforts but also equips users with the clarity needed for informed re-sensemaking as new data emerges.

Combining evidence from multiple modalities to enhance AI-expert sensemaking

The ability to combine evidence from multiple modalities is essential for effective sensemaking. Users often need to integrate diverse types of data—text, images, spatial coordinates, and more—into a coherent narrative to make informed decisions. Consider the case of search and rescue operations, where workers must rapidly synthesize information from texts, photographs, and GPS data to strategize their efforts. Recent advancements in multimodal generative AI models have empowered users by incorporating and synthesizing these varied inputs into a unified, comprehensive view. For instance, a participant in our study illustrated this capability by using a generative AI model to merge text from scientific publications with a visual gene structure depiction. This integration could create an image that contextualizes an individual’s genetic variant within the context of documented variants. Such advanced synthesis enables users to capture complex relationships and insights briefly, streamlining decision-making and expanding the potential for innovative solutions across diverse fields.

Sensemaking Process with AI Assistant

Conclusion

We explored the potential of generative AI to support genetic professionals in diagnosing rare diseases. By designing an AI-based assistant, we aim to streamline whole genome sequencing analysis, helping professionals diagnose rare genetic diseases more efficiently. Our study unfolded in two key phases: pinpointing existing challenges in analysis, and design ideation, where we crafted a prototype AI assistant. This tool is designed to boost diagnostic yield and cut down diagnosis time by flagging cases for reanalysis and synthesizing crucial gene and variant data. Despite valuable findings, more research is needed. Future research will involve testing the AI assistant in real-time, task-based user testing with genetic professionals to assess the AI’s impact on their workflow. The promise of AI advancements lies in solving the right user problems and building the appropriate solutions, achieved through collaboration among model developers, domain experts, system designers, and HCI researchers. By fostering these collaborations, we aim to develop robust, personalized AI assistants tailored to specific domains.

Join the conversation

Join us as we continue to explore the transformative potential of generative AI in genetic analysis, and please read the full text publication here (opens in new tab). Follow us on social media, share this post with your network, and let us know your thoughts on how AI can transform genetic research. If interested in our other related research work, check out Evidence Aggregator: AI reasoning applied to rare disease diagnosis. (opens in new tab)

Opens in a new tabThe post Using AI to assist in rare disease diagnosis appeared first on Microsoft Research.

Compose Multiplatform for web, powered by Wasm, is now in Beta! This major milestone shows that Compose Multiplatform for web is no longer just experimental, but ready for real-world use by early adopters.

This is more than a technical step forward. It’s a community achievement, made possible by feedback from early adopters of the Alpha version, the demos they built, and the contributions of open-source projects.

With the Beta release, you can now confidently bring your existing Compose skills and coding patterns to the web with minimal effort, creating new apps or extending ones from mobile and desktop.

Beyond Compose Multiplatform for web going Beta, the Compose Multiplatform 1.9.0 release also brings Android, iOS, and desktop improvements, showing that Compose Multiplatform is maturing into a truly unified UI framework.

Bringing your Compose code and skills to the web

With Compose Multiplatform, you can share most of your UI code and rely on the same Compose skills you already have from working on Android when building for the web – no need to learn a new UI toolkit. Out of the box, you get:

- Material 3 components for design fidelity, so your UI looks polished and modern.

- Adaptive layouts that resize seamlessly between different devices and screen sizes, with animations that make transitions feel smooth and natural.

- Browser navigation integration with forward and back buttons, deep links, and history.

- Support for system and browser preferences, like dark mode.

You can easily bring your Compose experience to the browser and start building web apps quickly.

Everything you need to build modern web apps

Compose Multiplatform for web now includes everything you need to build beautiful, reliable UIs for real-world apps in the browser:

- Core APIs that work on the web, available in common code.

- Interoperability with HTML for mixing Compose UI and native web elements.

- Type-safe navigation with deep linking.

- Fundamental accessibility support for assistive technologies.

- Cross-browser compatibility, including fallback for older browsers.

Compose Multiplatform 1.9.0 ensures the core API surface implementation works on the web and introduces targeted improvements to accessibility and navigation, alongside a wide range of bug fixes and developer experience enhancements (see the 1.9.0 What’s New page for details). With this Beta release of Compose Multiplatform for web, major APIs are stable enough for you to adopt them confidently, with minimal breaking changes expected in the future.

The foundation provided by this release is supported by a growing ecosystem of multiplatform libraries extending to the web. Many popular Kotlin libraries for networking, serialization, coroutines, and dependency injection already work across web platforms, and many community projects have already added support for Wasm. You can explore these in the official Kotlin Multiplatform catalog at klibs.io, where more and more libraries are being marked as web-ready all the time.

Development tools for better productivity

For developing web applications with Compose Multiplatform, you can use IntelliJ IDEA or Android Studio and you’ll get the most benefits with the new Kotlin Multiplatform plugin installed.

In addition to macOS, the Kotlin Multiplatform plugin for IntelliJ IDEA is now available on Linux and Windows, providing the full set of features for web, Android, and desktop development. The only exceptions are iOS and macOS targets, which require Apple hardware due to system limitations.

With the Kotlin Multiplatform plugin, you can:

- Create new projects with a web target, with shared or non-shared UIs, using the integrated project wizard.

- Run your apps in the browser right from the IDE, thanks to automatically created run configurations.

- Use gutter icons for web entry points to launch and debug your Compose apps instantly.

In addition, you can:

- Debug in the browser with custom formatters for a smoother experience in DevTools.

- Debug directly in IntelliJ IDEA Ultimate (2025.3 or later) with the JavaScript Debugger plugin. Note that currently only an EAP version of 2025.3 is available.

Together, these features make it easy to go from project setup to running and debugging your app – all without leaving the IDE.

Compose Multiplatform for web in action

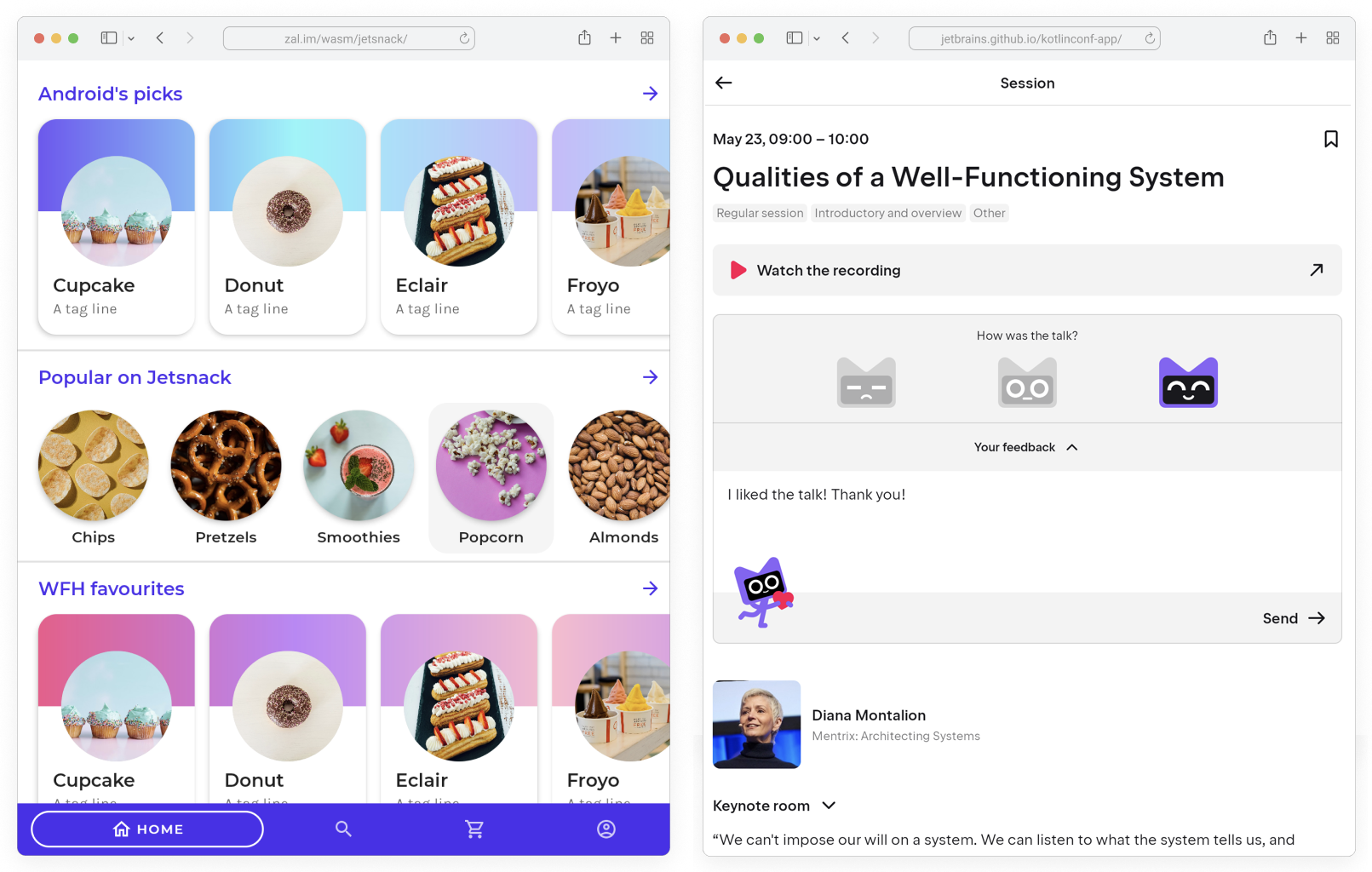

The Kotlin Playground and the KotlinConf app are powerful examples of Compose Multiplatform for web in action, showing how you can bring Compose UIs directly into the browser.

Other demos worth exploring include:

- Rijksmuseum Demo – Museum collections in a rich, interactive UI.

- Jetsnack Wasm Demo – A playful sample e-commerce experience.

- Compose Material 3 Gallery – A showcase of Material 3 components built with Compose.

- Storytale – A storybook-style gallery for exploring, previewing, and sharing UI components.

These projects highlight the flexibility of Compose Multiplatform for web in use cases ranging from interactive prototypes to production-quality apps.

Get started with Compose Multiplatform for web

The easiest way to try Compose Multiplatform for web is with the Kotlin Playground. It doesn’t require any installation or setup. Just open your browser and start writing UI code:

If you’d like to use Compose Multiplatform for web in your IDE, simply follow our step-by-step guide:

Looking for ideas about what to build?

- Quickly build demos and prototypes – Spin up interactive proof-of-concepts, internal tools, or small experiments. You can leverage your existing Compose skills for fast results, which makes this perfect for testing ideas with your team.

- Create UI component galleries – Build living UI libraries in a storybook-style format, showcase reusable components, preview design systems, or share prototypes directly in the browser with no extra setup.

Influence the future of Compose Multiplatform for web

Compose Multiplatform for web is now in Beta. We see this release as the foundation for broader adoption, and with your feedback we’ll keep improving it on the path to a stable release.

Tell us what works well, what needs refinement, and what you’d like to see next. We’ll work alongside the community to polish features, fix bugs, and make Compose Multiplatform for web more reliable and enjoyable to use.

Share your thoughts in the #compose-web and #compose channels of our Kotlin Slack workspace and help shape the future of multiplatform development.

Compose Multiplatform beyond the web

Compose Multiplatform 1.9.0 also brings improvements for iOS, desktop, and common code.

iOS

You now have more control over performance and output. You can configure the frame rate to better balance smoothness and battery life, and also have the ability to customize text input behavior, which makes Compose apps feel more natural on iOS.

Desktop

Desktop apps gain new window management features, including the ability to configure windows before they appear, making it easier to manage multiple windows or custom layouts.

All platforms

For every target, the design and preview experience is becoming more powerful. Variation testing has been simplified thanks to more configurable previews, while deeper shadow customization gives you finer control over UI depth and style.

See the full list of Compose Multiplatform 1.9.0 updates on our What’s new page.

Read more

- Compose Multiplatform 1.9.0 – release notes on GitHub

- What’s new in Compose Multiplatform 1.9.0 – detailed release notes on the documentation portal

- Get started with Compose Multiplatform – a tutorial

- Compose Multiplatform and Jetpack Compose

We built a telemetry pipeline that handles more than 5,400 data points per second with sub-10 millisecond query responses. The techniques we discovered while processing flight simulator data at 60FPS (frames per second) apply to any high-frequency telemetry system, from Internet of Things (IoT) sensors to application monitoring.

Here’s how we got our queries from 30 seconds down to sub-10ms, and why these techniques work for any high-frequency telemetry system.

The Problem With Current Values

Everyone writes this query to get the latest telemetry value:

SELECT * FROM flight_data WHERE time >= now() - INTERVAL '1 minute' ORDER BY time DESC LIMIT 1

This scans recent data, sorts everything, then throws away all but one row. At high frequencies, this pattern completely breaks down. We were generating more than 90 fields at 60 updates per second from Microsoft Flight Simulator 2024 through FSUIPC, a utility that serves as a middleman for communication between the simulator and external apps or hardware controls. Our dashboards were taking 30 seconds or more to refresh.

Stop Querying, Start Caching

InfluxDB 3 Enterprise, a time series database with built-in compaction and caching features, offers something called Last Value Cache (LVC). Instead of searching through thousands of data points every time, it keeps the most recent value for each metric ready in memory.

Here’s how we set it up:

SELECT * FROM last_cache('flight_data', 'flightsim_flight_data_lvc')

Setting it up:

influxdb3 create last_cache \ --database flightsim \ --table flight_data \ --key-columns aircraft_tailnumber \ --value-columns flight_altitude,speed_true_airspeed,flight_heading_magnetic,flight_latitude,flight_longitude \ --count 1 \ --ttl 10s \ flightsim_flight_data_lvc

The query time dropped from 30+ seconds to less than 10ms. Dashboards update at 5FPS and feel pretty instantaneous if you are monitoring a pilot on a separate screen.

Batch Everything

Writing individual telemetry points at high frequency creates thousands of network round trips. The fix is pretty simple:

// Batching configuration MaxBatchSize: 100 MaxBatchAgeMs: 100 milliseconds

Buffer points and flush when you hit either limit. This captures about six complete telemetry snapshots per database write.

Measured performance:

- Write latency: 1.3ms per row.

- Sustained thousands of metrics per second.

- Zero data loss during 24-hour tests.

Aggressive Compaction

High-frequency telemetry creates hundreds of small files. We configured these environment variables in InfluxDB 3 Enterprise to use its compaction feature:

# COMPACTION OPTIMIZATION INFLUXDB3_GEN1_DURATION=5m INFLUXDB3_ENTERPRISE_COMPACTION_GEN2_DURATION=5m INFLUXDB3_ENTERPRISE_COMPACTION_MAX_NUM_FILES_PER_PLAN=100 # REAL-TIME DATA ACCESS INFLUXDB3_WAL_FLUSH_INTERVAL=100ms INFLUXDB3_WAL_MAX_WRITE_BUFFER_SIZE=200000

Smaller time windows (five minutes vs. the default 10 minutes) and more frequent compaction prevent file accumulation, and it worked well enough for our scenario.

24-hour results:

- 142 automatic compaction events.

- From 127 files to 18 optimized files (Parquet).

- Storage from 500MB to 30MB (94% reduction).

Block Reading

We were making 90+ individual API calls through the FSUIPC Client DLL to collect telemetry. At 60FPS, that’s over 5,000 calls per second. The overhead was crushing performance.

Solution: Group related metrics into memory blocks.

_memoryBlocks = new Dictionary<string, MemoryBlock>

{

// Position, attitude, altitude

{ "FlightData", new MemoryBlock(0x0560, 48) },

{ "Engine1", new MemoryBlock(0x088C, 64) },

{ "Engine2", new MemoryBlock(0x0924, 64) },

// Flight controls, trim

{ "Controls", new MemoryBlock(0x0BC0, 44) },

{ "Autopilot", new MemoryBlock(0x07BC, 96) }

};

Each block fetches multiple related parameters in one operation.

The impact went from 2,700 to 5,400 calls/second down to 240 to 480 calls/second (90%+ reduction).

Separating Real-Time From Historical Queries

We built two distinct modes:

- Real time (using Last Value Cache): Current values only, sub-10ms response.

- Historical (traditional SQL): Trends and analysis, acceptable to be slower reads.

This separation seems obvious in hindsight, but most monitoring systems try to serve both needs with the same query patterns.

Patterns and Practices for Real-Time Dashboarding

These aren’t exotic techniques. Whether you’re monitoring manufacturing equipment, tracking application metrics or processing market data feeds, the patterns are the same:

- Cache current values in memory.

- Batch writes at 100-200ms intervals.

- Configure aggressive compaction.

- Read related metrics together.

- Separate real-time from historical queries.

The Results

These techniques work anywhere you’re dealing with high-frequency data:

- IoT sensors: Manufacturing equipment, smart buildings, environmental monitoring.

- Application metrics: Application performance monitoring (APM) data, microservice telemetry, distributed tracing.

- Financial data: Market feeds, trading systems, risk monitoring.

- Gaming: Player telemetry, server metrics, performance monitoring

After implementing these patterns:

- Query time: 30 seconds down to sub-10ms.

- Storage: 94% reduction.

- API calls: 90% fewer.

- Dashboard experience: Actually real time (well, as close as we could get!).

The difference isn’t about faster hardware, it’s about using the right patterns for high-frequency data. I hope you give these techniques a try!

The Code

Complete implementation on GitHub:

- C# data bridge: msfs2influxdb3-enterprise

- Next.js dashboard: FlightSim2024-InfluxDB3Enterprise

Whether you’re building for flight simulators or production monitoring systems, we found these patterns were fun to discover on the path to real-time data handling.

The post How We Cut Telemetry Queries to Under 10 Milliseconds appeared first on The New Stack.