In this post, we will build a real-time FAQ chatbot for a tax management SaaS using Gemini 2.5 Flash and NestJS with Server-Sent Events (SSE) to stream replies to the user. You'll learn how to set up Gemini and understand how context caching works.

In this post, we’ll build a real-time FAQ chatbot for a tax management SaaS using Gemini 2.5 Flash and NestJS with Server-Sent Events (SSE) to stream replies to the user. You’ll learn how to set up Gemini, understand how context caching in Gemini works while using implicit caching in our project, and how to work with SSE, async generators and observables in NestJS.

Prerequisites

To follow along and get the most from this post, you’ll need to have:

- Basic familiarity with cURL

- Google Gemini API key from AI Studio

- Basic understanding of NestJS and TypeScript

What Is Google Gemini?

It’s Google’s multimodal AI model, capable of handling text, images, audio, video, code and embeddings. It’s used in chatbots, summarizations, embeddings for RAG (Retrieval Augmented Generation), code analysis and other forms of reasoning and generation.

Gemini has diverse models that excel at different tasks, the most prominent being:

- 2.5 Pro: Best for complex reasoning, supports explicit caching

- 2.5 Flash: More balanced with less reasoning capacity, but improved speed and lower cost

- 2.5 Flash-8B: Best for high-frequency tasks and is the cheapest

For our FAQ chatbot, we’ll use 2.5 Flash because of its speed. It is well-suited for streaming, and its balanced reasoning makes it great for answering user queries.

Understanding Context Caching in Gemini

Gemini models now feature large context windows of up to 1 million tokens. This allows us to supply Gemini with extensive data for a task, even if only a small portion is needed. It can independently identify and use relevant data.

Context caching in Gemini helps it remember repeated input, which enables it to save input tokens and sometimes reduce latency. This is helpful when working with large prompts that first establish context for Gemini to use in generating responses, such as data for answering FAQ queries.

There are two types of context caching: implicit and explicit caching.

Implicit Caching

This feature is automatically enabled for Gemini 2.5 models. Gemini automatically caches repeated input, and when a request hits the cache, cost savings are automatically passed on without any configuration from us. Although implicit caches aren’t guaranteed, they are available on the Gemini free tier and don’t require any additional setup.

A basic requirement for context caching is that the input token count be at least 1,024 for 2.5 Flash and 4,096 for 2.5 Pro. To increase the chances of an implicit cache hit, Gemini recommends placing large and repeated content at the beginning of the prompt.

We can see the number of tokens that were cache hits in the usage_metadata field (which we’ll log later in our project to verify implicit caching).

We simply call the Gemini API normally when using implicit caching without any additional configurations for caching.

Here is an example where we call the Gemini API, though without streaming:

const response = await this.ai.models.generateContent({

model: "gemini-2.5-pro",

Contents: fullprompt,

});

In the example above, we would need to meet the requirements for implicit caching discussed earlier. If Gemini detects any cached hits, it would automatically apply the cost savings while using the automatically created cache.

In practice, as we’ll see later in our project, usually on the second or third request within short timeframes, we typically start to see implicit caching occurring.

Explicit Caching

With explicit caching, we manually create and manage caches with IDs and expiry times. Unlike implicit caching, we can improve cost savings because we manually create and use the caches.

const cache = await this.ai.caches.create({

model: 'gemini-2.5-pro',

config: {

expireTime: "2025-10-15T17:13:00Z", // Uses RFC 3339 format( in this case it was set to exprire in 2 hrs)

contents: largeFAQ ,

systemInstruction: "You are a helpful assistant that can answer questions about the FAQ.",

}

});

const response = await this.ai.models.generateContent({

model: 'gemini-2.5-pro',

contents: "What is TaxFlow and who is it for?",

config: {

cachedContent: cache.name,

}

});

By manually setting the expiry time for our cache and using it to generate our content, we can save costs through caching.

For our FAQ chatbot, we will use implicit caching because it’s free and easy to set up.

Project Setup

Run the commands below in your terminal to create a NestJS project:

nest new faq-chatbot

cd faq-chatbot

Next, run the command below to install our dependencies:

npm i @google/genai @nestjs/config rxjs

Here, @google/generative-ai is the Gemini SDK, @nestjs/config is used for importing environment variables into our app, and rxjs is used for handling reactive streams. We’ll use it later in our project.

Next, create a .env file and paste your Google API key and the model we’ll be using:

GEMINI_API_KEY=your_api_key

GEMINI_MODEL=gemini-2.5-flash

Now, let’s create the chat module with the following structure:

src/

├── chat/

│ ├── chat.controller.spec.ts

│ ├── chat.controller.ts

│ ├── chat.module.ts

│ ├── chat.service.spec.ts

│ ├── chat.service.ts

│ └── faqs.json

For the faqs.json data, copy the code below and add about 26 more. You should aim to have around 950 words in your faqs.json file to meet the minimum token count of 1,024 for context caching when using Gemini 2.5 Flash.

[

{ "q": "What pricing plans are available?",

"a": "Starter ($19/mo), Growth ($49/mo), and Team ($99/mo). Annual billing saves 20%. Team includes priority support and advanced permissions." },

{ "q": "Is my data secure?",

"a": "Yes. We follow SOC 2 aligned practices and conduct periodic third-party assessments." },

{ "q": "Do you integrate with accounting software like QuickBooks or Xero?",

"a": "Yes. We provide one-way and two-way sync with QuickBooks Online and Xero for accounts, transactions, and categories where supported." },

{ "q": "How does TaxFlow calculate estimated quarterly taxes?",

"a": "We apply safe-harbor rules using year-to-date income and expense data, factoring jurisdiction-specific rates, thresholds, and credits where applicable." },

]

In order to import the faqs.json file into our service, we’ll need to update our tsconfig.json file.

Update it with the following:

{

"compilerOptions": {

"module": "commonjs",

"target": "es2023",

"moduleResolution": "node",

"resolveJsonModule": true,

"esModuleInterop": true,

"strict": true,

"skipLibCheck": true,

"outDir": "./dist",

"declaration": true,

"removeComments": true,

"emitDecoratorMetadata": true,

"experimentalDecorators": true,

"allowSyntheticDefaultImports": true,

"sourceMap": true,

"baseUrl": "./",

"incremental": true,

"strictNullChecks": true,

"forceConsistentCasingInFileNames": true,

"noImplicitAny": false,

"strictBindCallApply": false,

"noFallthroughCasesInSwitch": false

}

}

We’ve turned on JSON imports and other helpful configurations.

Next, update the app module to import the ConfigModule so that we can load environment variables in our app:

import { Module } from '@nestjs/common';

import { ConfigModule } from '@nestjs/config';

import { ChatModule } from './chat/chat.module';

@Module({

imports: [ConfigModule.forRoot({ isGlobal: true }), ChatModule],

})

export class AppModule {}

Building the Chat Service

Our chat service takes the user query and builds a prompt with it, using the FAQ data as a prefix and providing Gemini instructions on how to reply. It then streams the reply and logs usage data metrics for tracking token usage and caching.

Update the chat.service.ts file with the following:

import { Injectable, Logger } from '@nestjs/common';

import { ConfigService } from '@nestjs/config';

import { GoogleGenAI, GenerateContentResponseUsageMetadata, GenerateContentResponse, Part } from '@google/genai';

import FAQ from './faqs.json';

@Injectable()

export class ChatService {

private readonly ai: GoogleGenAI;

private readonly model: string;

private readonly logger = new Logger(ChatService.name);

constructor(private readonly cfg: ConfigService) {

this.ai = new GoogleGenAI({ apiKey: this.cfg.getOrThrow('GEMINI_API_KEY') });

this.model = this.cfg.getOrThrow('GEMINI_MODEL');

}

async *streamAnswer(userQuestion: string): AsyncGenerator<string> {

try {

const fullPrompt = this.buildFullPrompt(userQuestion);

const response = await this.callGeminiStream(fullPrompt);

yield* this.processStreamEvents(response);

} catch (error) {

this.logger.error('Stream error:', error);

yield `Error: Unable to process your question. Please try again or contact support.`;

}

}

private buildFullPrompt(userQuestion: string): string {

const systemInstruction = this.buildSystemInstruction();

const faqBlock = this.buildFaqBlock();

return `${systemInstruction}

# FAQ (ground truth)

${faqBlock}

User question: ${userQuestion}

Instructions:

- Answer strictly from the FAQ.

- If the answer is not in the FAQ, say: "I don't know--please contact support."

- Be concise and factual.`;

}

private buildSystemInstruction(): string {

return `You are "TaxFlow Assistant", a concise FAQ chatbot for a tax management SaaS.

Answer ONLY using the provided FAQ content.

If the answer is not present, respond exactly: "I don't know--please contact support."

FORMATTING REQUIREMENTS:

- Use clear headings with **bold text** for main topics

- Use simple bullet points with - for lists (format: - Item text)

- Use proper line breaks between sections

- Keep responses well-structured and easy to read

- Use short paragraphs or bullet points when appropriate.`;

}

private buildFaqBlock(): string {

return FAQ.map((item, i) => `Q${i + 1}: ${item.q}\nA${i + 1}: ${item.a}`).join('\n\n');

}

private async callGeminiStream(fullPrompt: string): Promise<AsyncGenerator<GenerateContentResponse>> {

return await this.ai.models.generateContentStream({

model: this.model,

contents: fullPrompt,

config: {

temperature: 0.2,

maxOutputTokens: 2048

}

});

}

private async *processStreamEvents(response: AsyncGenerator<GenerateContentResponse>): AsyncGenerator<string> {

let lastUsage: GenerateContentResponseUsageMetadata | null = null;

let sawText = false;

for await (const fragment of response) {

if (fragment.text) {

sawText = true;

yield fragment.text;

}

if (fragment.usageMetadata) lastUsage = fragment.usageMetadata;

}

this.logUsageMetadata(lastUsage);

if (!sawText) {

yield `I don't know--please contact support.`;

}

}

private logUsageMetadata(lastUsage: GenerateContentResponseUsageMetadata | null): void {

if (lastUsage) {

const { promptTokenCount, totalTokenCount, cacheTokensDetails } = lastUsage;

this.logger.log(`usage: prompt=${promptTokenCount}, total=${totalTokenCount}, cached Tokens=${cacheTokensDetails?.[0].tokenCount}`);

}

}

}

In the code above, streamAnswer() first builds the full prompt with the user’s question, incorporating our defined system instructions, formatting guidelines and converting the faqs.json file to a string to use as the prefix. Then, it calls Gemini with the prompt and passes the response to processStreamEvents().

When we call the Gemini service, we set the temperature to 0.2, which makes it less creative in its responses—ideal for a FAQ chatbot. We also set the maximum output tokens to 2,048 to prevent our replies from being truncated.

In our ChatService, streamAnswer() is an async generator. An async generator is a function declared with async function* that yields values over time. Think of it as an ongoing live feed—while each fragment comes one after another, they might arrive at different intervals.

In our case, streamAnswer() yields each response fragment to the controller once it’s ready, which then converts it to SSE (Server-Sent Events).

The yield keyword is used to emit values from a generator, while yield* is used to delegate to another generator, forwarding all of its yielded values. streamAnswer() uses yield* this.processStreamEvents(response) to automatically pass each of its yielded fragments to the controller, meaning that every fragment processed from Gemini by processStreamEvents() is immediately passed to the controller once it’s available. So streamAnswer() is delegating the job of producing actual output to processStreamEvents().

In processStreamEvents(), each fragment is processed once it is obtained from Gemini using:

for await (const fragment of response) {

if (fragment.text) {

sawText = true;

yield fragment.text;

}

if (fragment.usageMetadata) lastUsage = fragment.usageMetadata;

}

Moving on, for await (const fragment of response) {...} means start iterating over the async generator called response, and once a chunk is available, call it fragment and process it. For each fragment, we check if it has text; if it does, we yield it, and if it has usageMetadata, we save it.

Here, our loop is pull-based, meaning, we request each chunk one after another as they become available. We’ll contrast this later in the controller with observables, which are push-based.

The usageMetadata object includes metadata from Gemini, such as promptTokenCount, which represents the total input tokens for the prompt we sent; totalTokenCount, which includes both input and output tokens used for the request and response; and cacheTokensDetails, which contains the cached token count and is undefined unless Gemini finds cached hits. We log all of these details.

Setting Up the Controller

Next, let’s set up our controller with SSE, update your chat.controller.ts file with the following:

import { Controller, Query, Sse } from '@nestjs/common';

import { from, map, Observable, catchError, of } from 'rxjs';

import { ChatService } from './chat.service';

@Controller('chat')

export class ChatController {

constructor(private readonly chatService: ChatService) {}

@Sse('stream')

stream(@Query('q') q: string): Observable<MessageEvent<string>> {

const asyncGenerator = this.chatService.streamAnswer(q ?? '');

return from(asyncGenerator).pipe(

map(piece => ({ data: piece }) as MessageEvent<string>),

catchError(error => {

console.error('Controller stream error:', error);

return of({ data: 'Error: Unable to process your question. Please try again.' } as MessageEvent<string>);

})

);

}

}

SSE is a one-way HTTP stream from server to browser. In NestJS, our server pushes messages as they become available in the form of Observable<MessageEvent>, and we use @Sse() to decorate our route as a Server-Sent Events endpoint.

The async generator returned by streamAnswer is converted into an observable using the from() method from RxJS. An observable, like an async generator, represents a stream of values over time.

However, an async generator is pull-based, with the consumer requesting each value as it becomes available, whereas an observable is push-based, with the producer sending new values to all subscribers as they are emitted.

Next, map(piece => ({ data: piece })) wraps each chunk as a MessageEvent so NestJS can send it as a Server-Sent Event.

Finally, catchError(...) handles errors by pushing a friendly message instead of breaking the stream if an error occurs.

Testing the Chatbot

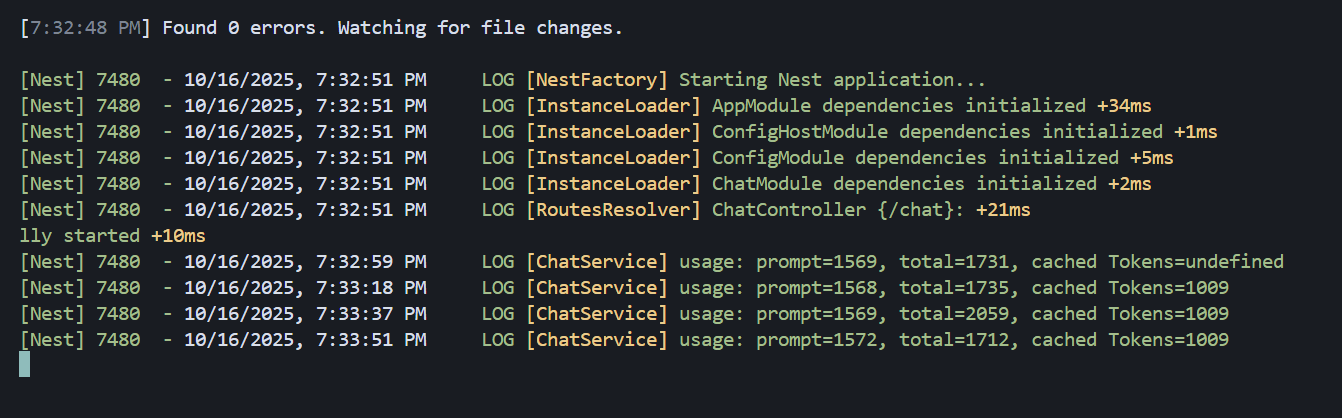

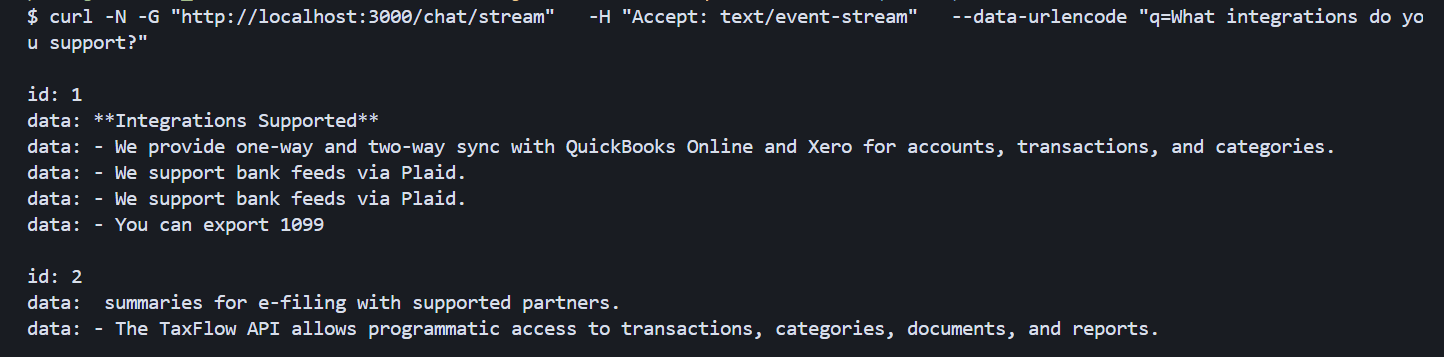

With everything set up, it’s time to start our server and test our chatbot. Try out the following cURL requests and observe the responses and the logs. You should notice that by the second or third request, the cached token count begins to appear in the logs, indicating that implicit caching has kicked in.

curl -N -G "http://localhost:3000/chat/stream" \

-H "Accept: text/event-stream" \

--data-urlencode "q=What pricing plans are available?"

curl -N -G "http://localhost:3000/chat/stream" \

-H "Accept: text/event-stream" \

--data-urlencode "q=Is my data secure?"

curl -N -G "http://localhost:3000/chat/stream" \

-H "Accept: text/event-stream" \

--data-urlencode "q=What integrations do you support?"

curl -N -G "http://localhost:3000/chat/stream" \

-H "Accept: text/event-stream" \

--data-urlencode "q=How does TaxFlow calculate estimated quarterly taxes?"

With everything set up correctly, your logs should look similar to this, showing implicit caching occurring.

Your responses should look like this, with chunks coming one after another, showing the nature of streaming.

Conclusion

In this post, we built a real-time FAQ chatbot for a tax management SaaS using Gemini 2.5 Flash and NestJS with SSE to stream replies to the user. Throughout this project, you’ve learned how to set up Gemini and now understand how context caching in Gemini works. Possible next steps include implementing explicit caching, implementing RAG with embeddings for larger FAQ datasets, and exploring Google’s Search grounding for live data.