Throughout 2025, the PVS-Studio team has been actively checking open-source C# projects. Over the year, we discovered plenty of defects. So, we picked the ten most interesting ones from this huge variety. We hope you find this roundup interesting and useful. Enjoy!

How did we compile the top?

There are several criteria the project code should meet to earn a place in our top list:

- it comes from an open-source project;

- the issues were detected by PVS-Studio;

- the code most likely contains errors;

- the code is interesting to check;

- each error is unique.

Since we regularly put together such lists, we've gathered an impressive collection of curious errors. You can read articles from previous years here:

- top 10 errors in 2024;

- top 10 errors in 2023;

- top 10 errors in 2022;

- top 10 errors in 2021;

- top 10 errors in 2020;

- top 10 errors in 2019;

Now, let's dive into the fascinating abyss of C# errors for 2025!

P. S. The article author selected and grouped the errors based on his subjective opinion. If you think this or that bug deserves another place, feel free to leave a comment :)

10th place. Try to find it

Today's top starts with an error mentioned in the article about checking .NET 9. It feels like .NET 9 was just released, but a little over a month ago, .NET 10 replaced it. We have covered the most significant changes in this article.

Let's get back to the analysis:

public static void SetAsIConvertible(this ref ComVariant variant,

IConvertible value)

{

TypeCode tc = value.GetTypeCode();

CultureInfo ci = CultureInfo.CurrentCulture;

switch (tc)

{

case TypeCode.Empty: break;

case TypeCode.Object:

variant = ComVariant.CreateRaw(....); break;

case TypeCode.DBNull:

variant = ComVariant.Null; break;

case TypeCode.Boolean:

variant = ComVariant.Create<bool>(....)); break;

case TypeCode.Char:

variant = ComVariant.Create<ushort>(value.ToChar(ci)); break;

case TypeCode.SByte:

variant = ComVariant.Create<sbyte>(value.ToSByte(ci)); break;

case TypeCode.Byte:

variant = ComVariant.Create<byte>(value.ToByte(ci)); break;

case TypeCode.Int16:

variant = ComVariant.Create(value.ToInt16(ci)); break;

case TypeCode.UInt16:

variant = ComVariant.Create(value.ToUInt16(ci)); break;

case TypeCode.Int32:

variant = ComVariant.Create(value.ToInt32(ci)); break;

case TypeCode.UInt32:

variant = ComVariant.Create(value.ToUInt32(ci)); break;

case TypeCode.Int64:

variant = ComVariant.Create(value.ToInt64(ci)); break;

case TypeCode.UInt64:

variant = ComVariant.Create(value.ToInt64(ci)); break;

case TypeCode.Single:

variant = ComVariant.Create(value.ToSingle(ci)); break;

case TypeCode.Double:

variant = ComVariant.Create(value.ToDouble(ci)); break;

case TypeCode.Decimal:

variant = ComVariant.Create(value.ToDecimal(ci)); break;

case TypeCode.DateTime:

variant = ComVariant.Create(value.ToDateTime(ci)); break;

case TypeCode.String:

variant = ComVariant.Create(....); break;

default:

throw new NotSupportedException();

}

}

Can you see the issue? It's definitely there!

case TypeCode.Int64:

variant = ComVariant.Create(value.ToInt64(ci)); break;

case TypeCode.UInt64:

variant = ComVariant.Create(value.ToInt64(ci)); break; // <=

PVS-Studio warning: V3139 Two or more case-branches perform the same actions. DynamicVariantExtensions.cs 68

I hope you've given your eyes a good workout, and your keen eyesight hasn't let you down. In case TypeCode.UInt64 instead of value.ToInt64, developers should have used the existing ToUInt64() method. This may be a copy-paste error.

9th place. Invalid format

The ninth place goes to an error described in the article about checking the Neo and NBitcoin projects:

public override string ToString()

{

var sb = new StringBuilder();

sb.AppendFormat("{1:X04} {2,-10}{3}{4}",

Position,

OpCode,

DecodeOperand());

return sb.ToString();

}

PVS-Studio warning: V3025 [CWE-685] Incorrect format. A different number of format items is expected while calling 'AppendFormat' function. Format items not used: {3}, {4}. Arguments not used: 1st. VMInstruction.cs 105

Calling the overridden ToString method inevitably causes an exception. This is due to an incorrect sb.AppendFormat call containing two errors.

- The number of arguments to insert is less than the number of placeholders in the format string, which causes the exception.

- Even if we fix the first issue by matching the number of arguments and placeholders, the call will still throw the exception. This is because placeholder indexing starts at 0, not 1. This means the fifth argument is required for the placeholder with index 4, which is absent.

8th place. Navel-gazing

The next error comes from the article about testing the Lean trading engine:

public override int GetHashCode()

{

unchecked

{

var hashCode = Definition.GetHashCode();

var arr = new int[Legs.Count];

for (int i = 0; i < Legs.Count; i++)

{

arr[i] = Legs[i].GetHashCode();

}

Array.Sort(arr);

for (int i = 0; i < arr.Length; i++)

{

hashCode = (hashCode * 397) ^ arr[i];

}

return hashCode;

}

}

public override bool Equals(object obj)

{

....

return Equals((OptionStrategyDefinitionMatch) obj);

}

PVS-Studio warning: V3192 The 'Legs' property is used in the 'GetHashCode' method but is missing from the 'Equals' method. OptionStrategyDefinitionMatch.cs 176

The analyzer ran an interprocedural check on the Equals method, which calls an overridden Equals, and found that it doesn't use the Legs property, even though GetHashCode relies on it.

Let's take a closer look at the Equals method:

public bool Equals(OptionStrategyDefinitionMatch other)

{

....

var positions = other.Legs

.ToDictionary(leg => leg.Position,

leg => leg.Multiplier);

foreach (var leg in other.Legs) // <=

{

int multiplier;

if (!positions.TryGetValue(leg.Position, out multiplier))

{

return false;

}

if (leg.Multiplier != multiplier)

{

return false;

}

}

return true;

}

Note that the method iterates over other.Legs. For each element in that collection, the code tries to find it in the positions dictionary—but that dictionary also comes from other.Legs. As a result, the code checks whether elements of a collection exist in the same collection.

We can fix the code by replacing other.Legs with Legs at the marked location.

7th place. Tricky Equals

The seventh place goes to an error from an article about checking ScottPlot:

public class CoordinateRangeMutable : IEquatable<CoordinateRangeMutable>

{

....

public bool Equals(CoordinateRangeMutable? other)

{

if (other is null)

return false;

return Equals(Min, other.Min) && Equals(Min, other.Min); // <=

}

public override bool Equals(object? obj)

{

if (obj is null)

return false;

if (obj is CoordinateRangeMutable other)

return Equals(other);

return false;

}

public override int GetHashCode()

{

return Min.GetHashCode() ^ Max.GetHashCode(); // <=

}

}

PVS-Studio warnings:

V3192 The 'Max' property is used in the 'GetHashCode' method but is missing from the 'Equals' method. ScottPlot CoordinateRangeMutable.cs 198

V3001 There are identical sub-expressions 'Equals(Min, other.Min)' to the left and to the right of the '&&' operator. ScottPlot CoordinateRangeMutable.cs 172

The analyzer issued two warnings for this code fragment. Let's see why this happened.

We'll start with the V3192. The analyzer warning says that the Max property is used in the GetHashCode method but not in the Equals method. If we look at the overridden Equals method, we can see that another Equals is called in its body. There we can see the following: Equals(Min, other.Min) && Equals(Min, other.Min). The V3001 diagnostic rule highlighted this fragment.

Clearly, one of the && operands must have the Equals(Max, other.Max) form.

So, the analyzer is right—Max doesn't appear in the Equals method.

6th place. Bit tricks

An error that, like the previous one, is taken from the article on checking ScottPlot, concludes the first half of the top:

public static Interactivity.Key GetKey(this Keys keys)

{

Keys keyCode = keys & ~Keys.Modifiers; // <=

Interactivity.Key key = keyCode switch

{

Keys.Alt => Interactivity.StandardKeys.Alt, // <=

Keys.Menu => Interactivity.StandardKeys.Alt,

Keys.Shift => Interactivity.StandardKeys.Shift, // <=

Keys.ShiftKey => Interactivity.StandardKeys.Shift,

Keys.LShiftKey => Interactivity.StandardKeys.Shift,

Keys.RShiftKey => Interactivity.StandardKeys.Shift,

Keys.Control => Interactivity.StandardKeys.Control, // <=

Keys.ControlKey => Interactivity.StandardKeys.Control,

Keys.Down => Interactivity.StandardKeys.Down,

Keys.Up => Interactivity.StandardKeys.Up,

Keys.Left => Interactivity.StandardKeys.Left,

Keys.Right => Interactivity.StandardKeys.Right,

_ => Interactivity.StandardKeys.Unknown,

};

....

}

PVS-Studio warning: V3202 Unreachable code detected. The 'case' value is out of range of the match expression. ScottPlot.WinForms FormsPlotExtensions.cs 106

Multiple pattern values within switch are impossible in the current context. Let's see what's going on here.

First, we should look at the values that correspond to the erroneous elements of the enumeration.

[Flags]

[TypeConverter(typeof(KeysConverter))]

[Editor(....)]

public enum Keys

{

/// <summary>

/// The bit mask to extract modifiers from a key value.

/// </summary>

Modifiers = unchecked((int)0xFFFF0000),

....

/// <summary>

/// The SHIFT modifier key.

/// </summary>

Shift = 0x00010000,

/// <summary>

/// The CTRL modifier key.

/// </summary>

Control = 0x00020000,

/// <summary>

/// The ALT modifier key.

/// </summary>

Alt = 0x00040000

}

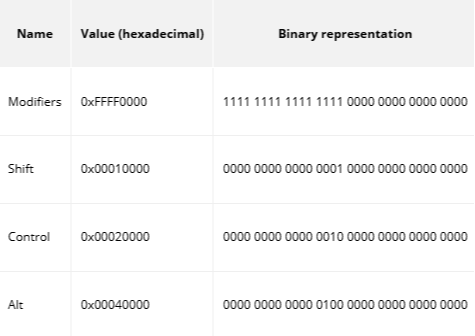

Next, let's convert them to binary:

\

It's clear now that Modifiers include each of the erroneous enumeration elements.

The value passed to switch is obtained from the keys & ~Keys.Modifiers expression. This expression excludes the Keys.Modifiers value from keys. In addition to Keys.Modifiers, however, Shift, Control, and Alt will also be excluded, since Modifiers already include these values (Modifiers have a non-zero bit for each non-zero bit of the erroneous enumeration elements).

From all this we can conclude that the bit combination that produces Shift, Control, or Alt for the keys & ~Keys.Modifiers operation doesn't exist.

The issue may lie in the switch implementation rather than the enumeration values.

5th place. All boxed up

The top five starts with an error mentioned in an article about checking .NET 9:

struct StackValue

{

....

public override bool Equals(object obj)

{

if (Object.ReferenceEquals(this, obj))

return true;

if (!(obj is StackValue))

return false;

var value = (StackValue)obj;

return this.Kind == value.Kind

&& this.Flags == value.Flags

&& this.Type == value.Type;

}

}

PVS-Studio warning: V3161 Comparing value type variables with 'ReferenceEquals' is incorrect because 'this' will be boxed. ILImporter.StackValue.cs 164

The ReferenceEquals method takes parameters of the Object type. When a value type is passed, it gets boxed. The reference created on the heap won't match any other reference.

Since this is passed as the first argument, boxing will occur every time the Equals method is called. So, checking via ReferenceEquals always returns false.

Note that this issue doesn't affect how the method works. However, the check using the ReferenceEquals method was performed to avoid further comparisons if the references were equal. In other words, this is a kind of optimization. In reality, however, the situation is completely opposite:

- the code always executes after the check;

- each call to

Equalsresults in a boxing operation.

It's funny that the analyzer built into .NET (rule CA2013) also detects this issue. However, the developers of .NET themselves couldn't avoid it :)

Perhaps this rule was disabled for the project. In addition, CA2013 is enabled by default starting with .NET 5.

4th place. Unsubscribing

The fourth place goes to an error from an article about checking MSBuild:

private static void SubscribeImmutablePathsInitialized()

{

NotifyOnScopingReadiness?.Invoke();

FileClassifier.Shared.OnImmutablePathsInitialized -= () =>

NotifyOnScopingReadiness?.Invoke();

}

PVS-Studio warning: V3084. Anonymous function is used to unsubscribe from 'OnImmutablePathsInitialized' event. No handlers will be unsubscribed, as a separate delegate instance is created for each anonymous function declaration. CheckScopeClassifier.cs 67

In this case, unsubscribing from a delegate doesn't take effect because each time an anonymous function is declared, a new delegate instance is created. As a result, an attempt to unsubscribe from this instance won't take the expected effect. OnImmutablePathsInitialized is subscribed to delegate 1 but unsubscribes from delegate 2, which has no effect.

3rd place. Confusion over operator precedence

So, we've reached the top three. The error from an article about checking the Neo and NBitcoin projects takes the honorable third place:

public override int Size => base.Size

+ ChangeViewMessages?.Values.GetVarSize() ?? 0

+ 1 + PrepareRequestMessage?.Size ?? 0

+ PreparationHash?.Size ?? 0

+ PreparationMessages?.Values.GetVarSize() ?? 0

+ CommitMessages?.Values.GetVarSize() ?? 0;

PVS-Studio warning: V3123 [CWE-783] Perhaps the '??' operator works in a different way than it was expected. Its priority is lower than priority of other operators in its left part. RecoveryMessage.cs 35

The analyzer issued several V3123 warnings for this code, but I've included only one for brevity. The ??operator has lower precedence than the + operator. However, the formatting of this expression suggests developers expected the opposite.

Does the order of operations matter here? To answer the question, let's look at the example of a sub-expression addition if ChangeViewMessages is null:

base.Size + ChangeViewMessages?.Values.GetVarSize() ?? 0

Enter fullscreen mode Exit fullscreen mode

Regardless of the base.Size value, the sub-expression result is always 0 because adding base.Size to null results in null.

If we place ChangeViewMessages?.Values.GetVarSize() ?? 0 in parentheses, changing the operation order, the result becomes base.Size.

2nd place. The treacherous pattern

The second place goes to an error from an article about checking the Files file manager:

protected void ChangeMode(OmnibarMode? oldMode, OmnibarMode newMode)

{

....

var modeSeparatorWidth =

itemCount is not 0 or 1

? _modesHostGrid.Children[1] is FrameworkElement frameworkElement

? frameworkElement.ActualWidth

: 0

: 0;

....

}

PVS-Studio warning: V3207 [CWE-670] The 'not 0 or 1' logical pattern may not work as expected. The 'not' pattern is matched only to the first expression from the 'or' pattern. Files.App.Controls Omnibar.cs 149

Let's look closer at the itemCount is not 0 or 1 part. Already guessed what's the issue? This pattern is redundant. Its second part affects nothing.

When saying "x is not 0 or 1", people usually imply that x is neither 0 nor 1. However, in C#, operator precedence works differently—x is not 0 or 1 actually means x is (not 0) or 1. Such mistakes can lead not only to redundancy but also to errors like NullReferenceException: list is not null or list.Count == 0. This issue was even discussed in a meeting that explored potential solutions. Based on the discussion, either the compiler will likely catch this issue in the future, or the built-in static analysis will flag it as a warning.

1st place. How does LINQ work?

And the winner is an error from an article about checking the Lean trading engine. This error ranks first due to its subtlety. Some developers may not consider the effects of using deferred execution methods in combination with captured variables. All the details are below:

public void FutureMarginModel_MarginEntriesValid(string market)

{

....

var lineNumber = 0;

var errorMessageTemplate = $"Error encountered in file " +

$"{marginFile.Name} on line ";

var csv = File.ReadLines(marginFile.FullName)

.Where(x => !x.StartsWithInvariant("#")

&& !string.IsNullOrWhiteSpace(x))

.Skip(1)

.Select(x =>

{

lineNumber++; // <=

....

});

lineNumber = 0; // <=

foreach (var line in csv)

{

lineNumber++; // <=

....

}

}

PVS-Studio warning: V3219 The 'lineNumber' variable was changed after it was captured in a LINQ method with deferred execution. The original value will not be used when the method is executed. FutureMarginBuyingPowerModelTests.cs 720

The lineNumber variable is captured and incremented in the delegate that is passed to the LINQ method. Since Select is a deferred method, the delegate code runs while iterating over the resulting collection, not when Select gets called.

While iterating over the csv collection, the lineNumber variable is also incremented. As a result, each iteration increases lineNumber by 2: when the delegate runs and inside the foreach, which looks odd.

Note the lineNumber = 0 assignment before foreach. It's likely that developers expected that this variable could hold a non-zero value before the loop. However, that is impossible: lineNumber starts at zero, and the only place that changes it before the foreach sits in the delegate. As mentioned above, the delegate runs during iteration, not before it. Apparently, the developers expected the delegate to execute prior to entering the loop.

Conclusion

That's it! We've gone through the most interesting warnings from the author's point of view :)

I hope you found this collection interesting and thought-provoking in terms of code development.

If you'd like to check whether your project has similar issues, now's the time to use a static analyzer. Here's the download link.

Steve Martin: Making Scrum Master Success Visible with OKRs That Actually Work

Read the full Show Notes and search through the world's largest audio library on Agile and Scrum directly on the Scrum Master Toolbox Podcast website: http://bit.ly/SMTP_ShowNotes.

"It is not the retrospective that is the success of the retrospective. It is the ownership and accountability where you take improvements after the session." - Steve Martin

The biggest problem for Scrum Masters isn't just defining success—it's being able to shout it from the rooftops with tangible evidence. Steve champions OKRs as an amazing way to define and measure success, but with a critical caveat: they've historically been poorly written and implemented in dark rooms by executives, then cascaded down to teams who never bought in. Steve's approach is radically different. Create OKRs collectively with the team, stakeholders, and end users. Start by focusing on the pain—what problems or pain points do customers, users, and stakeholders actually experience? Make the objective the goal to solve that problem, then define how to measure progress with key results. When everyone is bought in—Scrum Master, engineers, Product Owner, stakeholders, leaders—all pulling in the same direction, magic happens. Make progress visible on the wall like a speedometer, showing exactly where you are at any moment. For an e-commerce checkout, the problem might be too many steps. The objective: reduce pain for users checking out quickly. The baseline: 15 steps today. The target: 5 clicks in three months. Everyone can see the dial moving. Everything should focus on the customer as the endpoint. The challenge is distinguishing between targets imposed from above ("increase sales by 10%") and objectives created collaboratively based on factors the team can actually control. Find what you can control first, work with customers to understand their pain, and start from there.

Self-reflection Question: Can you articulate your team's success with specific, measurable outcomes that everyone—from developers to executives—understands and owns?

Featured Retrospective Format for the Week: Post-Retro Actions and Ownership

The success of a retrospective isn't the retrospective itself—it's what happens after. Steve emphasizes that ownership and accountability matter more than the format of the session. Take improvements from the retrospective and bring them into the sprint as user stories with clear structure: this is the problem, how we'll solve it, and how we'll measure impact. Assign collective ownership—not just a single person, but the whole team owns the improvement. Then bring improvements into the demo so the team showcases what changed. This creates cultural transformation: the team themselves want to bring improvements, not just because the Scrum Master pushed them. For ongoing impediments, conduct root cause analysis. Create a system to escalate issues beyond the team's control—make these visible on another board or with the leadership team. Find peers in pain: teams with the same problems can work together collectively. The retrospective format matters less than this system of ownership, action, measurement, and visibility. Stop retrospective theatre—going through the motions without taking action. Make improvements real by treating them like any other work: visible, measured, owned, and demonstrated.

[The Scrum Master Toolbox Podcast Recommends]

🔥In the ruthless world of fintech, success isn't just about innovation—it's about coaching!🔥

Angela thought she was just there to coach a team. But now, she's caught in the middle of a corporate espionage drama that could make or break the future of digital banking. Can she help the team regain their mojo and outwit their rivals, or will the competition crush their ambitions? As alliances shift and the pressure builds, one thing becomes clear: this isn't just about the product—it's about the people.

🚨 Will Angela's coaching be enough? Find out in Shift: From Product to People—the gripping story of high-stakes innovation and corporate intrigue.

[The Scrum Master Toolbox Podcast Recommends]

About Steve Martin

You can link with Steve Martin on LinkedIn.

Steve is an Agile Coach, mentor, and founder of The Agile Master Academy. After over 14 years leading Agile transformation programmes, he's on a mission to elevate Scrum Masters—building high-performing teams, measurable impact, and influence—and raising industry standards of Agile mastery through practical, evidence-led coaching. You can also find Steve's insights on his YouTube channel: Agile Mastery Show.

Download audio: https://traffic.libsyn.com/secure/scrummastertoolbox/20260101_Steve_Martin_Thu.mp3?dest-id=246429

1147. In this bonus segment that originally ran in October, we look at the fascinating history of the "new letters" of the alphabet — V, W, X, Y, and Z. Danny Bate explains why T was the original end of the alphabet and how letters were added by the Greeks and Romans. We also look at the origin of the letter Y, which was originally a vowel, and the two historical reasons we call the final letter “zee” or “zed.”

Find Danny Bate on his website, Bluesky or on X.

Get the book, "Why Q Needs U."

Listen to Danny's podcast, "A Language I Love Is..."

Links to Get One Month Free of the Grammar Girl Patreon (different links for different levels)

- Order of the Snail ($1/month level): https://www.patreon.com/grammargirl/redeem/687E4

- Order of the Aardvark ($5/month level): https://www.patreon.com/grammargirl/redeem/07205

- Keeper of the Commas ($10/month level): https://www.patreon.com/grammargirl/redeem/50A0B

- Guardian of the Grammary ($25/month level): https://www.patreon.com/grammargirl/redeem/949F7

🔗 Share your familect recording in Speakpipe or by leaving a voicemail at 833-214-GIRL (833-214-4475)

🔗 Watch my LinkedIn Learning writing courses.

🔗 Subscribe to the newsletter.

🔗 Take our advertising survey.

🔗 Get the edited transcript.

🔗 Get Grammar Girl books.

🔗 Join Grammarpalooza. Get ad-free and bonus episodes at Apple Podcasts or Subtext. Learn more about the difference.

| HOST: Mignon Fogarty

| Grammar Girl is part of the Quick and Dirty Tips podcast network.

- Audio Engineer: Dan Feierabend

- Director of Podcast: Holly Hutchings

- Advertising Operations Specialist: Morgan Christianson

- Marketing and Video: Nat Hoopes, Rebekah Sebastian

| Theme music by Catherine Rannus.

| Grammar Girl Social Media: YouTube. TikTok. Facebook. Threads. Instagram. LinkedIn. Mastodon. Bluesky.

Hosted by Simplecast, an AdsWizz company. See pcm.adswizz.com for information about our collection and use of personal data for advertising.

Download audio: https://dts.podtrac.com/redirect.mp3/media.blubrry.com/grammargirl/stitcher.simplecastaudio.com/e7b2fc84-d82d-4b4d-980c-6414facd80c3/episodes/ef9aa383-0db9-4ae5-8524-8acaf27c3694/audio/128/default.mp3?aid=rss_feed&awCollectionId=e7b2fc84-d82d-4b4d-980c-6414facd80c3&awEpisodeId=ef9aa383-0db9-4ae5-8524-8acaf27c3694&feed=XcH2p3Ah

Observability is not living up to expectations. As prices continue to rise, the pain is increasingly felt.

This year has a foreboding sense of increased infrastructure complexity to manage at scale. In reaction to skyrocketing public cloud pricing, many organizations have shifted toward private cloud, often resulting in even more complexity.

At scale, organizations now increasingly face multi-cloud and heterogeneous environments spanning public cloud, private cloud and on-premises systems. In many cases, the cost buildup is seen as prohibitive.

At the same time, observability remains a necessity for organizations both large and small. In 2025, observability players and suppliers reflected a shift in how they help customers use their solutions, particularly by helping them avoid surprise observability bills of $130,000 per month or more. (Stay tuned about how this happens, since observability metrics generally scale much more than compute does.)

Up to 84% of current observability users struggle with the costs and complexity of their daily monitoring responsibilities, Gartner analysts Pankaj Prasad and Matt Crossley wrote in their annual hype cycle report on observability and monitoring, published in July. Still, they concluded, observability is uniquely positioned to address these technology challenges.

“The core issue is that the economics of observability have been upside-down for years. Costs grow linearly with telemetry volume, but the value doesn’t,” Tom Wilkie, CTO at Grafana Labs, told me. “What we need, and what we’re now finally seeing, is a model where cost scales with insight, not ingestion.”

Rethinking the ‘Big Data Lake’ Model

In 2025, the observability market was often seen as top-heavy and centralized, creating high levels of frustration and cost issues for customers while the value seemed to be declining.

Large vendors charge for indexing and retention by throwing everything into a big system or data lake, Bob Quillin, founder and CEO of ControlTheory, told me. A more contrarian model, he said, involves putting more intelligence at the edge and using a feedback system.

“By distilling data at the edge, companies only send up what the AI tools need, allowing the system to run next to existing tools instead of trying to sit on top of them,” Quillin said. “The way the market has developed over the last 20 years has been to throw everything in a big system and users pay for indexing, retention, all that. But now that we have more intelligent systems, you don’t need to put all the data up there.”

Indeed, many platforms still encourage teams to collect everything while providing limited or no transparency into which telemetry data is actually valuable.

Bill Hineline, field CTO for Chronosphere, told me: “The resulting surprise bills and increased operational overhead required to manage them have created the impression that observability itself was a bad investment, when in reality the investment was poorly governed.”

How AI (Plus Context) Can Help

AI, of course, come into play in 2025, forcing organizations and platform engineering teams to choose how to integrate AI. At the very least, and especially in the tools I’ve tested — such as Grafana’s AI — it does no harm. In fact, it can help, if used properly.

However, AI can lend particularly good support for interpreting log data. Logs are essentially a language, and “if you throw the right information into a large language model,” Quillin said, it can help explain what happened.

Users should be able to “throw a log into their favorite LLM via back-end APIs,” Quillin said. “This helps bubble up patterns, such as repeated critical metrics, and assists in pattern analysis and heat charts to separate the signal from the noise.

“As more code is created by AI, the complexity of logs is not getting simpler, necessitating a step back to look at the fundamentals of how data is analyzed. Since logs are language and if you throw the right information into an LLM, it can actually help explain what happened with the log – it looks across all your logs and bubbles that up and looks at a lot of pattern analysis.”

In many ways, Wilkie said, AI further “inflated and mis-set expectations,” in 2025.

“AI can be truly helpful, but only when it’s grounded in context rather than treated as a magic layer sprinkled on top of observability,” he said. “The mistake many vendors make is assuming AI adds value simply because it exists, when in practice, AI only works if the underlying telemetry is high-quality, well-structured and connected across systems.”

The real opportunity is what was observed in 2025, Wilkie said: “AI that turns observability from a specialist-only discipline into something every engineer — and even non-engineers — can use.”

“And importantly, instead of burying teams under more data, AI helps them extract more value from the data they already have,” he said. “AI won’t replace operators in 2026, but it will finally help them keep up with the complexity curve.”

AI applied superficially can feel like a productivity boost without delivering real operational clarity, David Jones, vice president of NORAM solution engineering at Dynatrace, told me.

“Many tools layer generative AI on top of raw telemetry, which may assist with querying but doesn’t fundamentally improve understanding,” Jones said. “That’s where confusion creeps in: AI without context amplifies uncertainty rather than reducing it.”

OpenTelemetry: Poised to Make a Big Impact

With the added complexity at every level, and with environments becoming harder to manage through observability, the barrier to entry remains high—not only to understand how to make use of logs, metrics and traces, which I would argue are not so-called pillars of observability, but essential, intertwined components, along with other metrics, that must be used together.

This is where OpenTelemetry (OTel) has made huge strides in 2025, becoming more accessible not only across different programming languages, but also across metrics, logs and now traces. Thanks to standardization, it has significantly lowered the entry threshold, which is why we are seeing a number of new and growing observability players offering their solutions.

Modern systems are becoming harder to operate, and that rising complexity is a big reason the barrier to entry has stayed high.

“OpenTelemetry has genuinely helped here: by standardizing instrumentation across languages and signal types, it’s removed a tremendous amount of friction,” Wilkie said. “Teams no longer have to piece together vendor-specific SDKs, and new observability players can enter the market more easily because instrumentation is no longer the moat.”

OpenTelemetry helps by giving organizations vendor-neutrality, Wilkie said, allowing orgs to switch more easily to vendors that actually listen to and act on their customers’ cost and value concerns.

Indeed, OpenTelemetry helps “break the old dependence on proprietary agents and opaque pricing,” he said. “It’s when you pair OTel with intelligent systems — which can reduce data by 80 to 90% while increasing the value of what remains — you start to flip the equation so that cost scales with value, not telemetry volume. Observability hasn’t failed; the business model did.”

However, standards are only useful if they gain wide adoption, while legacy systems “stick around in organizations for decades,” Wilkie said.

“The cost of re-instrumentation is prohibitive,” he said. “OTel is making greenfield work easier, but customers still need to weave together new and old systems into a coherent picture – and need observability systems that don’t lock customers into one way of doing things.”

My bet is that OpenTelemetry will play a significant role if its experience can be improved. The complexity of the tools themselves also needs to be reduced so that observability is not usable only by an organization’s star in-house observability expert.

Vendors are beginning to wake up to the fact that non-technical users should be able to make use of observability and telemetry data as well — but we are not there yet. Maybe, one day, AI agents will monitor observability metrics and troubleshoot and predict future failures with no input from humans. But that day is not going to come in 2026.

Closing the Usability Gap

In the meantime, the non-technical stakeholders must also invest time and effort into learning to interpret telemetry data, regardless of how simple to use observability tools become.

“OpenTelemetry is foundationally changing the potential for the future by democratizing how observability is done. It has become a de facto standard that lowers the barrier to entry, as organizations are now standardizing on it rather than just considering it,” Quillin said. “Using an open source tool built on these standards allows for a less commercial, more authoritative conversation about the broader problems in the industry.”

Wilkie echoed the notion that OpenTelemetry solves one part of the problem: standardizing and generating telemetry.

“But the usability gap, especially for non-experts, is still there,” he said. “Instrumentation today still feels too much like a tax: too many configuration knobs, too many semantic conventions to remember, too many edge cases when services behave in surprising ways. Standards solve interoperability, not usability. So yes, OTel will play a bigger role, but only if it becomes simpler.”

OpenTelemetry may not ultimately “save” observability, but it should go a long way by improving standardization for instrumentation and interoperability between different tools. It should also serve as a springboard for providers as they worry less about integration and can develop real solutions that empower what observability can and should do, for not only the in-house expert but all stakeholders.

“When OpenTelemetry is treated as foundational plumbing across the enterprise, observability platforms can deliver more consistent, out-of-the-box insights without relying on heavily customized dashboards,” Hineline said. “Today, many dashboards are still built by experts for experts, which leads to dashboard sprawl and limits who can effectively use observability. When combined with a strong strategy, OpenTelemetry can materially improve usability by enabling more consistent, reusable views across teams.”

The post Can OpenTelemetry Save Observability in 2026? appeared first on The New Stack.

Aurelia 2 brings faster builds, modern tooling, and clearer composition patterns, but many teams still run production workloads on Aurelia 1. If your app has outlived three laptops, you are in good company. This guide is a practical roadmap you can revisit whenever your team is ready to upgrade. It mixes planning steps with technical checkpoints so you can move steadily without losing momentum.

Migration at a glance

- Discover: inventory routes, global resources, plugins, bundlers, and UX-critical flows.

- Decide: pick a migration strategy (strangler shell, vertical slice, or in-place upgrade).

- Prepare: scaffold Aurelia 2 with modern tooling, shared design tokens, and DI modules.

- Port: move features in thin slices, paying attention to routing, composition, and state.

- Validate: keep automated tests, visual diffs, and build checks green for both versions.

- Decommission: remove Aurelia 1 dependencies after traffic fully shifts to Aurelia 2.

Keep this loop visible in your project tracker so stakeholders always know the current phase.