Static analysis in Flutter (and Dart) is the process of examining code for errors and style issues without running the app. It allows you to catch bugs and enforce best practices early, before executing a single line of code.

Adopting static analysis represents a fundamental "shift left" in the software development lifecycle (SDLC). This philosophy advocates for moving quality assurance and testing activities to the earliest possible stages of development.

Linting is a subset of static analysis that focuses on style and best-practice rules. Flutter’s analysis tool (often called the Dart/Flutter analyzer) uses a set of rules (called lints) to ensure your code follows the Dart style guide and Effective Dart recommendations.

This guide will walk you through setting up and mastering Flutter's linting and static analysis capabilities, from the basics to advanced configurations.

The Anatomy of Flutter Static Analysis

Let's start by exploring different parts of static analysis in Flutter.

The Core Engine: The Dart Analyzer

Everything starts with the Dart Analyzer, the engine that analyzes your code. It’s not just a command-line tool; it’s the backbone behind the instant feedback you see in your IDE. When you mistype a variable or mix incompatible types, it’s the analyzer that notices.

It does this by turning your source code into an Abstract Syntax Tree (AST), a structured model of your code. From there, it scans and validates everything against a defined set of rules. This tight connection between the analyzer, your IDE, and your project setup creates an immediate feedback loop where that familiar wavy underline appears the moment something's off.

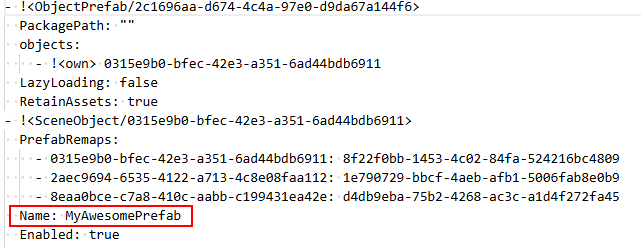

The Rulebook: analysis_options.yaml

The behavior of the analyzer is governed by one file: analysis_options.yaml. Sitting at the root of your Flutter project, it tells the analyzer what to enforce and what to ignore. You can enable or disable rules, adjust their severity, and even exclude certain files or directories.

In teams, this file becomes the single source of truth for maintaining consistent code quality and style across all contributors.

The Official Starting Point: flutter_lints

Beyond language-level checks, Flutter also encourages best practices through linter rules. The easiest way to get started is with the flutter_lints package, Google’s officially recommended rule set for Flutter apps.

Built on top of the general Dart lints, it gives you a strong foundation that matches the Dart Style Guide and Effective Dart recommendations. Instead of debating style or rule configurations, you can start coding with confidence, knowing your project already follows community standards for readability and maintainability.

Going Beyond Defaults to Build Incredible Applications

While flutter_lints provides an excellent foundation, production-grade applications and growing teams quickly hit its limits. You need more than just style checking; you need to enforce architecture, manage code complexity, and ensure long-term maintainability. This is where dcm.dev comes in a complete static analysis designed specifically for Dart and Flutter.

DCM is a productivity and efficiency tool. Instead of spending weeks developing rules or searching for rules that probably don't exist, you can plug DCM in and gain access to:

-

450+ Pre-Built Rules in Addition to Flutter Lint Rules: A massive, well-tested library of rules covering performance, style, leaks, and best practices for both Dart and Flutter. Many of the rules comes with auto-fix that can save hours of effort!

-

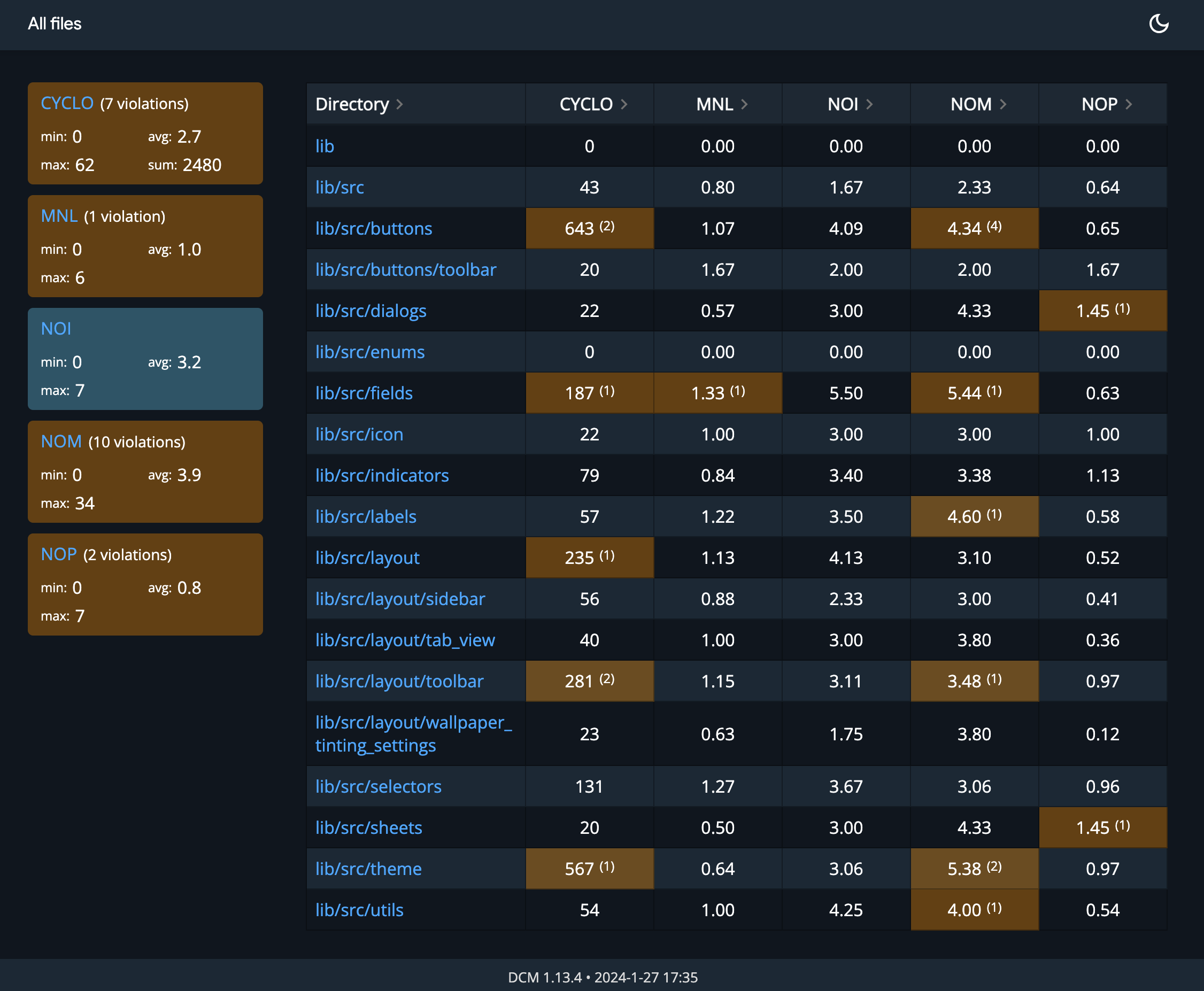

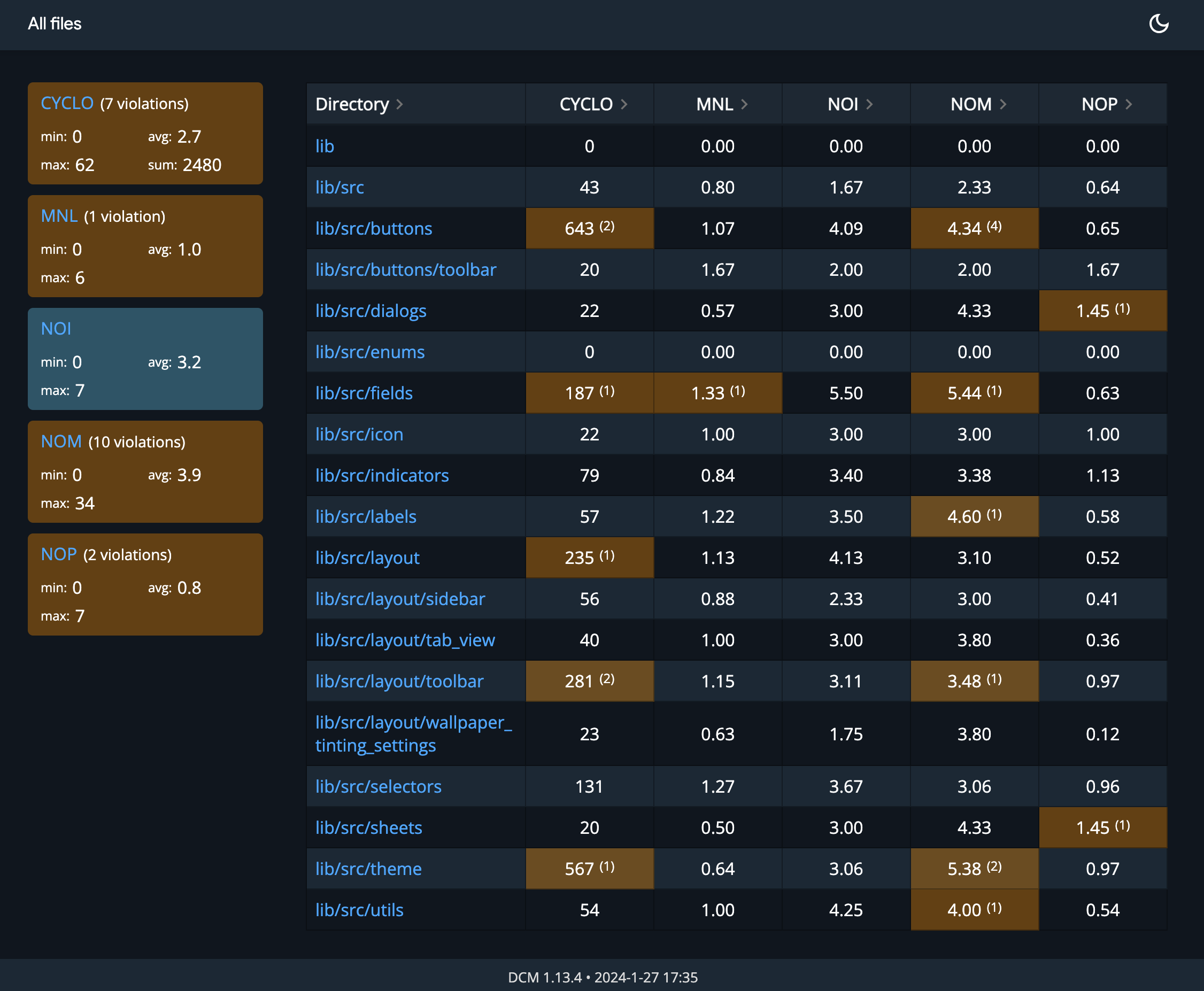

Advanced Code Metrics: Go beyond simple lints with metrics like Cyclomatic Complexity, Lines of Code, and others to objectively measure code health and identify areas for refactoring.

-

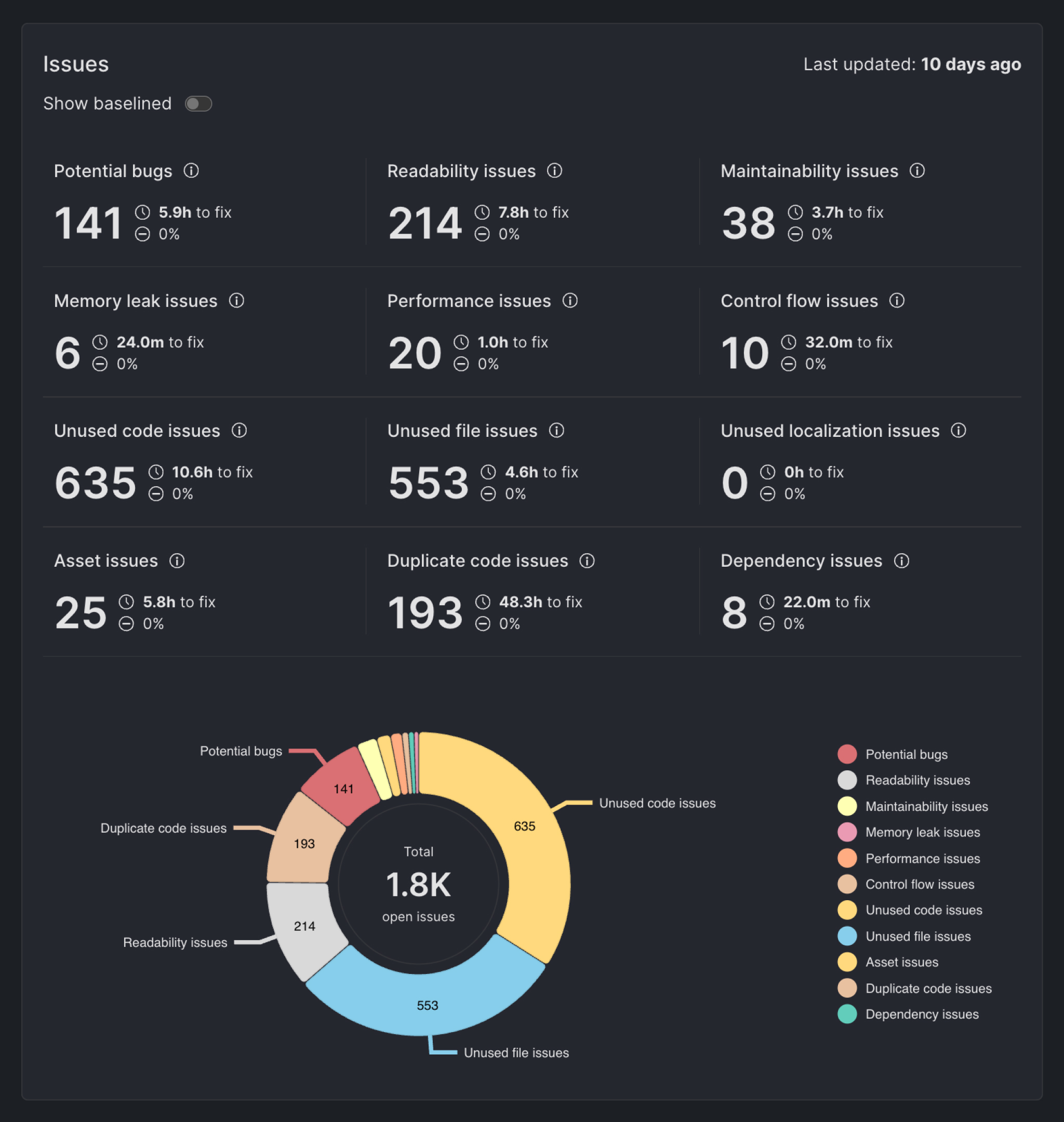

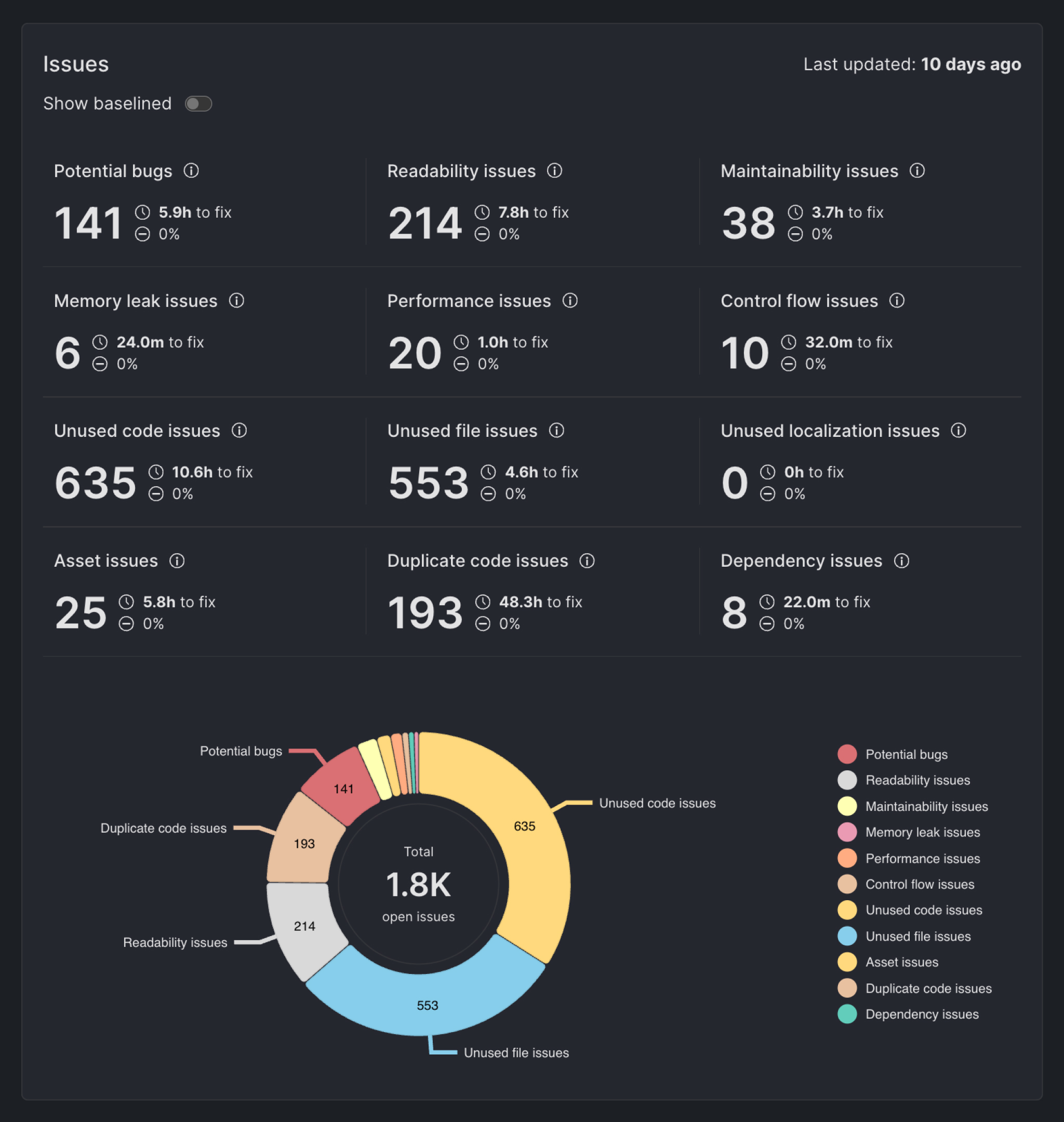

Monitor Your Code Quality Trends: With DCM Dashboard, quickly access the latest state of all open issues across all your projects with your organization and observe changes over time to easily spot unexpected changes and act quickly to resolve them.

- More Features: That's not all, DCM has loads of other features including assets quality check, health commands including finding unused code and files, integrating with AI assisted tooling to ensure high quality code generation via MCP server and more to explore and more to come!

In short, dcm.dev allows your team to stand on the shoulders of static analysis experts, letting you focus your valuable time and energy on what matters most: building incredible applications.

DCM is a code quality tool that helps your team move faster by reducing the time spent on code reviews, finding tricky bugs, identifying complex code, and unifying code style.

Setting Up Flutter Static Analysis (Flutter lint rules)

Setting up static analysis (linting) in Flutter is straightforward, especially with the official lint rule sets provided by Dart and Flutter. Recent versions of Flutter come with a default set of lint rules out-of-the-box. If you created your project with Flutter 2.5 (stable) or newer, you likely already have static analysis enabled by default.

You can verify this by checking your pubspec.yaml for flutter_lints

pubspec.yaml

dev_dependencies:

flutter_test:

sdk: flutter

flutter_lints: ^6.0.0

and looking for an analysis_options.yaml file in the project root (next to pubspec).

...

├── android

├── assets

├── build

├── ios

├── lib

├── linux

├── macos

├── pubspec.lock

├── pubspec.yaml

├── analysis_options.yaml

├── test

├── web

└── windows

If your project is older or missing these, follow these steps to enable the latest Flutter lints:

-

In your project root, run the command:

flutter pub add --dev flutter_lints

-

Create a file named analysis_options.yaml at the root of your project (in the same directory as your pubspec.yaml). In this file, include the recommended Flutter lint rules by adding a single line:

include: package:flutter_lints/flutter.yaml

-

After adding the file, run flutter pub get (if you added the dependency manually) to ensure the package is installed. The analyzer will automatically start using these rules in your IDE. You can also run the analyzer manually (see next section) to verify everything is set up correctly.

Below is an example analysis_options.yaml content for a Flutter project. This is similar to what Flutter’s template provides by default.

analysis_options.yaml

include: package:flutter_lints/flutter.yaml

linter:

rules:

Running the Analyzer and Interpreting Results

With the setup complete, the analysis can be run in two primary ways, both of which yield the same results.

From the Command Line

To perform a static analysis of the entire project from the terminal, run the following command from the project's root directory:

This command invokes the Dart analyzer, which will scan all Dart files in the project according to the rules defined in analysis_options.yaml.

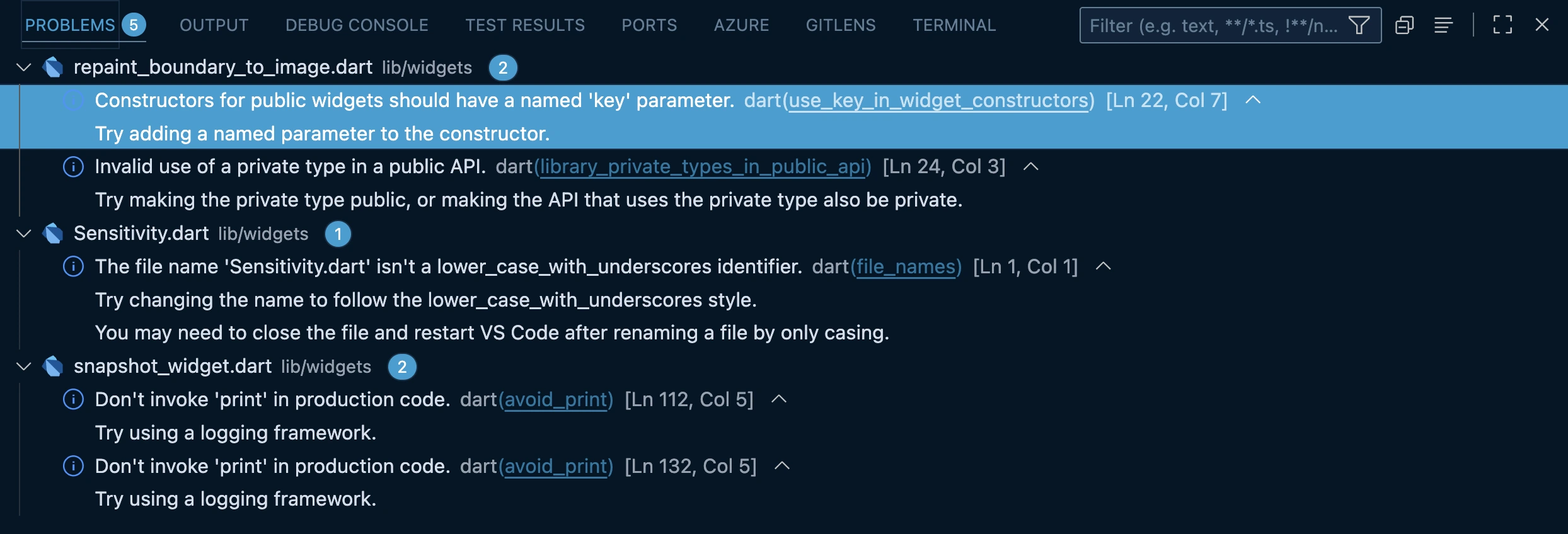

For example, if you had a stray semicolon after an if statement, the analyzer might output a warning like this:

Analyzing flutter_test_cases...

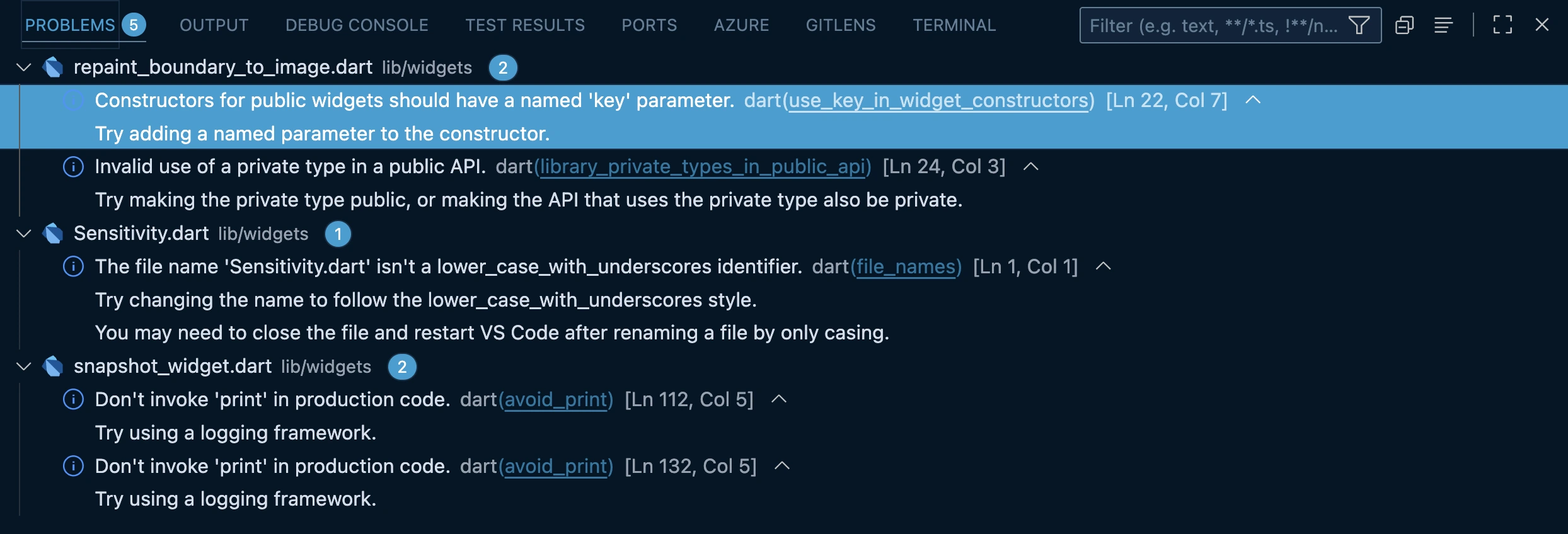

info • Constructors for public widgets should have a named 'key' parameter •

lib/widgets/repaint_boundary_to_image.dart:22:7 • use_key_in_widget_constructors

info • Invalid use of a private type in a public API • lib/widgets/repaint_boundary_to_image.dart:24:3

• library_private_types_in_public_api

info • Don't invoke 'print' in production code • lib/widgets/snapshot_widget.dart:112:5 • avoid_print

info • Don't invoke 'print' in production code • lib/widgets/snapshot_widget.dart:132:5 • avoid_print

4 issues found. (ran in 1.5s)

In this example, it flags an unnecessary semicolon and cites the empty_statements rule. Similarly, if you use a print statement and the avoid_print lint is enabled, you would see a message warning:

info • Don't invoke 'print' in production code • lib/widgets/snapshot_widget.dart:132:5 • avoid_print

Each issue includes the file, line number, and the name of the lint rule (so you can look up details if needed).

Running flutter analyze regularly (or having your IDE’s analysis on) is highly recommended. It gives you quick feedback on code quality and potential errors. If issues are found, you can fix them as you go. In fact, some lint warnings have automated fixes, you can run

dart fix --dry-run

dart fix --apply

to auto-apply trivial fixes suggested by the analyzer. This can update your code (for example, adding missing const keywords or removing unused imports) according to the linter’s recommendations.

Getting Started with DCM

You might want to go beyond defaults and start with DCM as explained earlier. Getting started with DCM is super simple!

-

Get a proper license that works for you and your team. You can start for free or request a trial for your team

-

Install DCM depending on your operating system

-

Setup your IDE whether is VS Code or IntelliJ

-

Activate your DCM license dcm activate --license-key=YOUR_KEY

-

And finally you can simply integrate with DCM by simply starting with our recommended set of rules or enable over 450+ rule, run advanced code health commands, enable metrics, integrate with your AI assisted tool and many more.

analysis_options.yaml

dcm:

extends:

- recommended

Now go ahead and run DCM commands.

Available commands:

analyze Analyze Dart code for lint rule violations.

analyze-assets Analyze image assets for incorrect formats, names, exceeding size, and missing high-resolution images.

analyze-structure Analyze Dart project structure.

analyze-widgets Analyze Flutter widgets for quality, usages, and duplication.

calculate-metrics Collect code metrics for Dart code.

check-code-duplication Check for duplicate functions, methods, and test cases.

check-dependencies Check for missing, under-promoted, over-promoted, and unused dependencies.

check-exports-completeness Check for exports completeness in *.dart files.

check-parameters Check for various issues with function, method and constructor parameters.

check-unused-code Check for unused code in *.dart files.

check-unused-files Check for unused *.dart files.

check-unused-l10n Check for unused localization in *.dart files.

fix Apply fixes for fixable analysis issues.

format Format *.dart files.

init Set up DCM.

run Run multiple passed DCM commands at once.

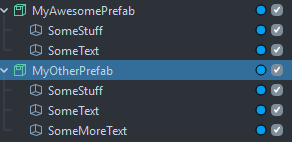

Inside the IDE

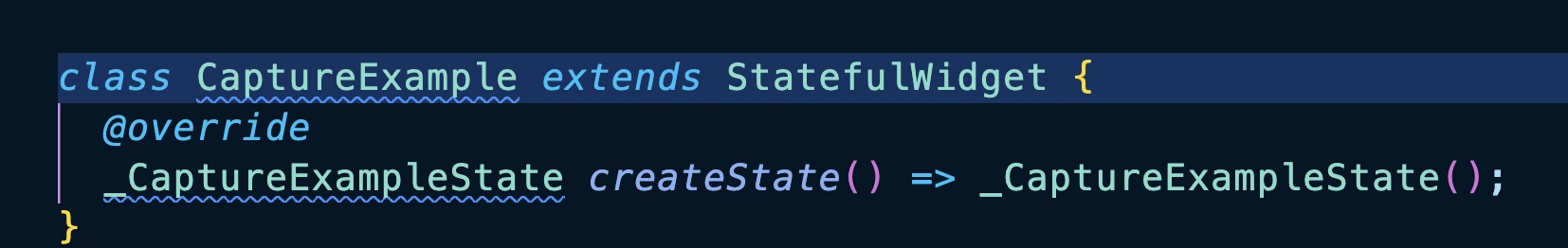

The true power of the analyzer is its real-time integration with IDEs. As code is written in VS Code or Android Studio, the analyzer runs continuously in the background. Any violations of the rules in analysis_options.yaml will appear almost instantly:

- Inline Highlighting: The specific line of code with the issue will be underlined. Hovering over the underlined code will display a tooltip with the same error message seen on the command line.

- Problems/Dart Analysis Tab: A dedicated panel in the IDE (e.g., the "Problems" tab in VS Code) will aggregate all issues across the entire project, allowing for easy navigation to each problem area.

From Defaults to Custom Configuration

While the flutter_lints package provides an excellent default, professional development often requires a more tailored approach. Customizing the analysis_options.yaml file allows a team to enforce stricter checks, adopt specific coding conventions, and ultimately take full ownership of their code quality standards.

This file becomes more than just a configuration; it evolves into a living document that codifies a team's development philosophy, defining "what good code looks like" for their specific project.

Let's see what we can do!

Enforcing Maximum Type Safety

Dart's type system is powerful, but the analyzer can be configured to be even stricter, catching potential runtime errors during development. This is done using the language key under the analyzer entry in analysis_options.yaml.

analysis_options.yaml

analyzer:

language:

strict-casts: true

strict-inference: true

strict-raw-types: true

-

strict-casts: true: This flag ensures that the analyzer reports a potential error when an implicit downcast might fail at runtime. For example, if a List<Object> is implicitly cast to a List<String>, this flag will raise an issue because the cast could fail if the list contains non-string elements.

-

strict-inference: true: This forces the type inference engine to be more conservative. It will report an issue if it cannot determine a precise type and would otherwise default to dynamic. This encourages developers to be more explicit with their type annotations, reducing ambiguity.

-

strict-raw-types: true: This flag ensures that when a generic class is used, a type argument is always provided. For example, it would flag List myList and encourage the developer to specify the type, such as List<int> or List<dynamic>, making the code's intent maintainable.

Customizing Flutter Linter Rules

Beyond the top-level analyzer settings, individual linter rules can be managed under the linter key. This allows for fine-grained control over the project's coding style and conventions.

analysis_options.yaml

include: package:flutter_lints/flutter.yaml

linter:

rules:

avoid_print: false

require_trailing_commas: true

avoid_positional_boolean_parameters: true

Managing Code Exclusions and Severity

Sometimes, a specific rule must be violated in a controlled manner, or a rule's importance needs to be elevated. The analyzer provides mechanisms for both scenarios.

Suppressing Flutter Rules

There are two ways to exclude code from a specific analysis rule:

Changing Lint Rule Severity

It is also possible to change the severity of a rule. For example, a team might decide that a specific style violation is so important that it should be treated as an error, not just a warning. This is configured under the analyzer.errors key.

analysis_options.yaml

analyzer:

errors:

missing_required_param: error

prefer_const_constructors: warning

lines_longer_than_80_chars: info

Exclude files or directories

Sometimes you might want to ignore generated code or specific files (say, your .g.dart files or build/ directory) from analysis to avoid false warnings. You can add an exclude list under the analyzer section:

analysis_options.yaml

analyzer:

exclude:

- build/**

- lib/generated_plugin_registrant.dart

This level of control allows teams to fine-tune the analyzer's behavior to match their project's specific quality gates and priorities.

DCM Rule and Metric Configuration

Going beyond defaults and using an advanced lint tool for Flutter like DCM also comes with huge benefits of configuring lots of rules and metrics to tailor to your specific needs.

Here is just one example from all those 450+ rules which avoid-banned-imports:

analysis_options.yaml

dcm:

rules:

- avoid-banned-imports:

entries:

- paths: ['some/folder/.*\.dart', 'another/folder/.*\.dart']

deny: ['package:flutter/material.dart']

message: 'Do not import Flutter Material Design library, we should not depend on it!'

severity: error

- paths: ['core/.*\.dart']

deny: ['package:flutter_bloc/flutter_bloc.dart']

message: 'State management should be not used inside "core" folder.'

Integrating Static Analysis into Your Workflow

Once the ruleset is defined in analysis_options.yaml, the next step is to integrate static analysis deeply into the development workflow. This involves providing more context to the analyzer through code annotations and automating checks to ensure that quality standards are consistently upheld by the entire team.

The Power of Annotations

The meta package provides a set of special annotations that allow developers to communicate their intentions directly to the analysis tools. These annotations provide hints that the analyzer cannot deduce on its own, enabling it to provide more accurate and helpful warnings.

To use them, first add the package as a dependency:

Key annotations include:

-

@immutable: When applied to a class, this annotation asserts that all of its fields are final. The analyzer will then flag any subclasses that are not also marked as immutable. This is essential for Flutter widgets to ensure their properties cannot change after construction, which is a core principle of the framework's declarative UI.

-

@mustCallSuper: When a method in a subclass overrides a method from a superclass that is annotated with @mustCallSuper, the analyzer will issue a warning if the subclass's method does not include a call to the superclass's method (e.g., super.dispose()). This is critical for preventing resource leaks in StatefulWidget lifecycle.

Read more about annotations in the Flutter meta package documentation.

Preparing for Publication: The pana Score

For developers who intend to publish packages to the official Dart package repository, pub.dev, static analysis plays a direct and visible role in how the package is perceived by the community. When a package is published, an automated tool called pana (Package ANAlysis) runs a series of checks to generate a quality score, which is prominently displayed on the package's page

A key category in this evaluation is "Pass static analysis," which executes dart analyze (or flutter analyze) on the package's code. A clean analysis report is essential for achieving a high score. This score acts as a powerful social signal; it tells other developers that the package author is disciplined, adheres to community best practices, and has produced code that is likely to be reliable and well-maintained.

Therefore, mastering static analysis is not just about internal code quality; it is a critical component of building a positive public reputation within the Flutter ecosystem.

Flutter Lint Rules and Automation with Continuous Integration (CI/CD)

The ultimate safety net for maintaining code quality is to automate the analysis process using a Continuous Integration (CI) pipeline. The goal is to create an automated, impartial gatekeeper that prevents code violating the established analysis rules from ever being merged into the project's main branch.

For teams using platforms like GitHub, this can be achieved with a GitHub Actions workflow. The following is a simple, copy-paste-ready example of a workflow file (.github/workflows/analyze.yml) that runs flutter analyze on every push and pull request to the main branch:

name: Flutter Analyze

on:

push:

branches: [main]

pull_request:

branches: [main]

jobs:

analyze:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- uses: subosito/flutter-action@v2

with:

channel: 'stable'

- run: flutter pub get

- run: flutter analyze

You can repeat this workflow on your desired and favorite CI tool as well.

DCM also works seamlessly with major CI/CD platforms like GitHub Actions, GitLab CI/CD, Azure DevOps, Bitbucket Pipelines and Codemagic.

More importantly, DCM provides flexible output formats for CI pipelines: console, JSON, Checkstyle, Code Climate, GitLab, GitHub, so you can integrate into your existing build dashboards or pipelines easily.

Check out more details on our DCM CI/CD Integrations.

Conclusion

By properly configuring package:flutter_lints and customizing your analysis_options.yaml file, you create a robust development environment that catches issues early and guides your team toward Flutter best practices.

Remember that linting is not about rigid enforcement but about creating a consistent, readable, and maintainable codebase that your entire team can work with effectively. Choose rules that make sense for your project and team, and don't hesitate to adjust them as your project evolves.