This post continues our series (previous post) on agentic AI research methodology. Building on our previous discussion on AI system design, we now shift focus to an evaluation-first perspective.

Tamara Gaidar, Data Scientist, Defender for Cloud Research

Fady Copty, Principal Researcher, Defender for Cloud Research

TL;DR:

Evaluation-First Approach to Agentic AI Systems

This blog post advocates for evaluation as the core value of any AI product. As generic models grow more capable, their alignment with specific business goals remains a challenge - making robust evaluation essential for trust, safety, and impact.

This post post presents a comprehensive framework for evaluating agentic AI systems, starting from business goals and responsible AI principles to detailed performance assessments. It emphasizes using synthetic and real-world data, diverse evaluation methods, and coverage metrics to build a repeatable, risk-aware process that highlights system-specific value.

Why evaluate at all?

While issues like hallucinations in AI systems are widely recognized, we propose a broader and more strategic perspective:

Evaluation is not just a safeguard - it is the core value proposition of any AI product.

As foundation models grow increasingly capable, their ability to self-assess against domain-specific business objectives remains limited. This gap places the burden of evaluation on system designers. Robust evaluation ensures alignment with customer needs, mitigates operational and reputational risks, and supports informed decision-making. In high-stakes domains, the absence of rigorous output validation has already led to notable failures - underscoring the importance of treating evaluation as a first-class concern in agentic AI development.

Evaluating an AI system involves two foundational steps:

- Developing an evaluation plan that translates business objectives into measurable criteria for decision-making.

- Executing the plan using appropriate evaluation methods and metrics tailored to the system’s architecture and use cases.

The following sections detail each step, offering practical guidance for building robust, risk-aware evaluation frameworks in agentic AI systems.

Evaluation plan development

The purpose of an evaluation plan is to systematically translate business objectives into measurable criteria that guide decision-making throughout the AI system’s lifecycle. Begin by clearly defining the system’s intended business value, identifying its core functionalities, and specifying evaluation targets aligned with those goals. A well-constructed plan should enable stakeholders to make informed decisions based on empirical evidence. It must encompass end-to-end system evaluation, expected and unexpected usage patterns, quality benchmarks, and considerations for security, privacy, and responsible AI. Additionally, the plan should extend to individual sub-components, incorporating evaluation of their performance and the dependencies between them to ensure coherent and reliable system behavior.

Example - Evaluation of a Financial Report Summarization Agent

To illustrate the evaluation-first approach, consider the example from the previous post of an AI system designed to generate a two-page executive summary from a financial annual report. The system was composed of three agents: split report into chapters, extract information from chapters and tables, and summarize the findings. The evaluation plan for this system should operate at two levels: end-to-end system evaluation and agent-level evaluation.

End-to-End Evaluation

At the system level, the goal is to assess the agent’s ability to accurately and efficiently transform a full financial report into a concise, readable summary. The business purpose is to accelerate financial analysis and decision-making by enabling stakeholders - such as executives, analysts, and investors - to extract key insights without reading the entire document. Key objectives include improving analyst productivity, enhancing accessibility for non-experts, and reducing time-to-decision.

To fulfill this purpose, the system must support several core functionalities:

- Natural Language Understanding: Extracting financial metrics, trends, and qualitative insights.

- Summarization Engine: Producing a structured summary that includes an executive overview, key financial metrics (e.g., revenue, EBITDA), notable risks, and forward-looking statements.

The evaluation targets should include:

- Accuracy: Fidelity of financial figures and strategic insights.

- Readability: Clarity and structure of the summary for the intended audience.

- Coverage: Inclusion of all critical report elements.

- Efficiency: Time saved compared to manual summarization.

- User Satisfaction: Perceived usefulness and quality by end users.

- Robustness: Performance across diverse report formats and styles.

These targets inform a set of evaluation items that directly support business decision-making. For example, high accuracy and readability in risk sections are essential for reducing time-to-decision and must meet stringent thresholds to be considered acceptable. The plan should also account for edge cases, unexpected inputs, and responsible AI concerns such as fairness, privacy, and security.

Agent-Level Evaluation

Suppose the system is composed of three specialized agents:

- Chapter Analysis

- Tables Analysis

- Summarization

Each agent requires its own evaluation plan. For instance, the chapter analysis agent should be tested across various chapter types, unexpected input formats, and content quality scenarios. Similarly, the tables analysis agent must be evaluated for its ability to extract structured data accurately, and the summarization agent for coherence and factual consistency.

Evaluating Agent Dependencies

Finally, the evaluation must address inter-agent dependencies. In this example, the summarization agent relies on outputs from the chapter and tables analysis agents. The plan should include dependency checks such as local fact verification - ensuring that the summarization agent correctly cites and integrates information from upstream agents. This ensures that the system functions cohesively and maintains integrity across its modular components.

Executing the Evaluation Plan

Once the evaluation plan is defined, the next step is to operate it using appropriate methods and metrics. While traditional techniques such as code reviews and manual testing remain valuable, we focus here on simulation-based evaluation - a practical and scalable approach that compares system outputs against expected results. For each item in the evaluation plan, this process involves:

- Defining representative input samples and corresponding expected outputs

- Selecting simulation methods tailored to each agent under evaluation

- Measuring and analyzing results using quantitative and qualitative metrics

This structured approach enables consistent, repeatable evaluation across both individual agents and the full system workflow.

Defining Samples and Expected Outputs

A robust evaluation begins with a representative set of input samples and corresponding expected outputs. Ideally, these should reflect real-world business scenarios to ensure relevance and reliability. While a comprehensive evaluation may require hundreds or even thousands of real-life examples, early-stage testing can begin with a smaller, curated set - such as 30 synthetic input-output pairs generated via GenAI and validated by domain experts.

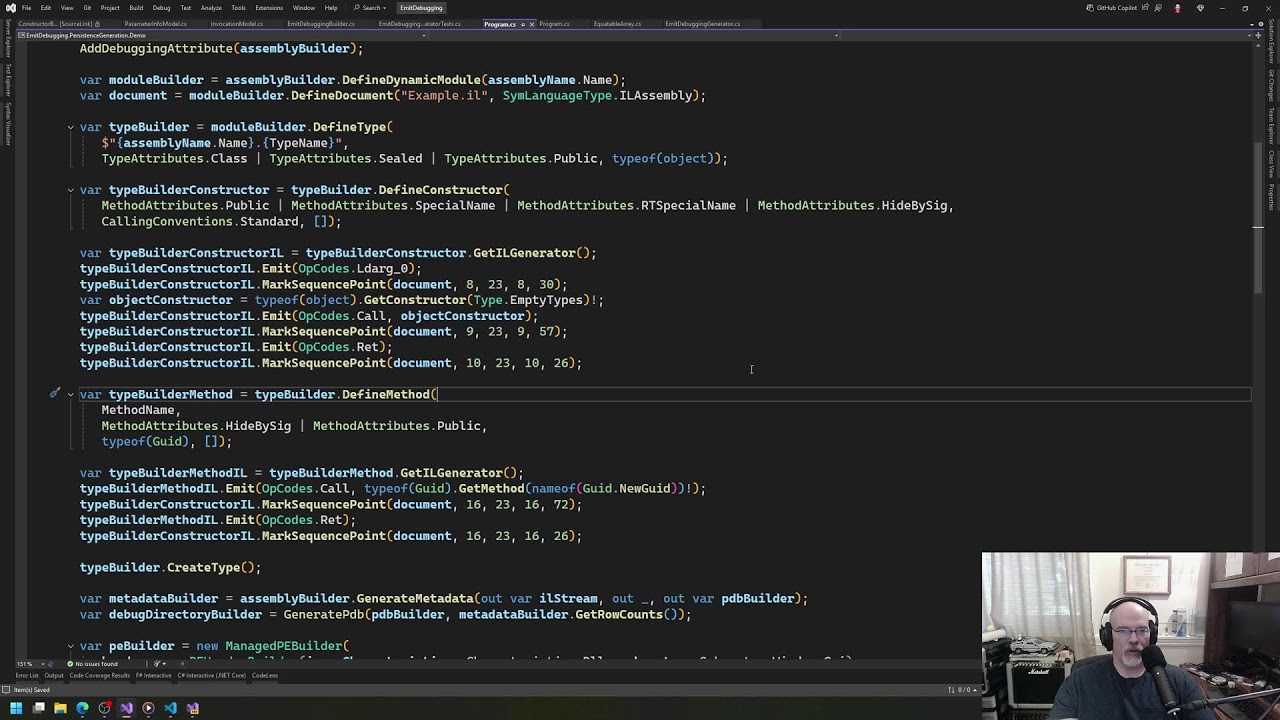

Simulation-Based Evaluation Methods

Early-stage evaluations can leverage lightweight tools such as Python scripts, LLM frameworks (e.g., LangChain), or platform-specific playgrounds (e.g., Azure OpenAI). As the system matures, more robust infrastructure is required to support production-grade testing. It is essential to design tests with reusability in mind - avoiding hardcoded samples and outputs - to ensure continuity across development stages and deployment environments.

Measuring Evaluation Outcomes

Evaluation results should be assessed in two primary dimensions:

- Output Quality: Comparing actual system outputs against expected results.

- Coverage: Ensuring all items in the evaluation plan are adequately tested.

Comparing Outputs

Agentic AI systems often generate unstructured text, making direct comparisons challenging. To address this, we recommend a combination of:

- LLM-as-a-Judge: Using large language models to evaluate outputs based on predefined criteria.

- Domain Expert Review: Leveraging human expertise for nuanced assessments.

- Similarity Metrics: Applying lexical and semantic similarity techniques to quantify alignment with reference outputs.

Using LLMs as Evaluation Judges

Large Language Models (LLMs) are emerging as a powerful tool for evaluating AI system outputs, offering a scalable alternative to manual review. Their ability to emulate domain-expert reasoning enables fast, cost-effective assessments across a wide range of criteria - including correctness, coherence, groundedness, relevance, fluency, hallucination detection, sensitivity, and even code readability. When properly prompted and anchored to reliable ground truth, LLMs can deliver high-quality classification and scoring performance.

For example, consider the following prompt used to evaluate the alignment between a security recommendation and its remediation steps:

“Below you will find a description of a security recommendation and relevant remediation steps. Evaluate whether the remediation steps adequately address the recommendation. Use a score from 1 to 5:

- 1: Does not solve at all

- 2: Poor solution

- 3: Fair solution

- 4: Good solution

- 5: Exact solution

Security recommendation: {recommendation}

Remediation steps: {steps}”

While LLM-based evaluation offers significant advantages, it is not without limitations. Performance is highly sensitive to prompt design and the specificity of evaluation criteria. Subjective metrics - such as “usefulness” or “helpfulness” - can lead to inconsistent judgments depending on domain context or user expertise. Additionally, LLMs may exhibit biases, such as favoring their own generated responses, preferring longer answers, or being influenced by the order of presented options.

Although LLMs can be used independently to assess outputs, we strongly recommend using them in comparison mode - evaluating actual outputs against expected ones - to improve reliability and reduce ambiguity. Regardless of method, all LLM-based evaluations should be validated against real-world data and expert feedback to ensure robustness and trustworthiness.

Domain expert evaluation

Engaging domain experts to assess AI output remains one of the most reliable methods for evaluating quality, especially in high-stakes or specialized contexts. Experts can provide nuanced judgments on correctness, relevance, and usefulness that automated methods may miss. However, this approach is inherently limited in scalability, repeatability, and cost-efficiency. It is also susceptible to human biases - such as cultural context, subjective interpretation, and inconsistency across reviewers—which must be accounted for when interpreting results.

Similarity techniques

Similarity techniques offer a scalable alternative by comparing AI-generated outputs against reference data using quantitative metrics. These methods assess how closely the system’s output aligns with expected results, using measures such as exact match, lexical overlap, and semantic similarity. While less nuanced than expert review, similarity metrics are useful for benchmarking performance across large datasets and can be automated for continuous evaluation. They are particularly effective when ground truth data is well-defined and structured.

Coverage evaluation in Agentic AI Systems

A foundational metric in any evaluation framework is coverage - ensuring that all items defined in the evaluation plan are adequately tested. However, simple checklist-style coverage is insufficient, as each item may require nuanced assessment across different dimensions of functionality, safety, and robustness.

To formalize this, we introduce two metrics inspired by software engineering practices:

- Prompt-Coverage: Assesses how well a single prompt invocation addresses both its functional objectives (e.g., “summarize financial risk”) and non-functional constraints (e.g., “avoid speculative language” or “ensure privacy compliance”). This metric should reflect the complexity embedded in the prompt and its alignment with business-critical expectations.

- Agentic-Workflow Coverage: Measures the completeness of evaluation across the logical and operational dependencies within an agentic workflow. This includes interactions between agents, tools, and tasks - analogous to branch coverage in software testing. It ensures that all integration points and edge cases are exercised.

We recommend aiming for the highest possible coverage across evaluation dimensions. Coverage gaps should be prioritized based on their potential risk and business impact, and revisited regularly as prompts and workflows evolve to ensure continued alignment and robustness.

Closing Thoughts

As agentic AI systems become increasingly central to business-critical workflows, evaluation must evolve from a post-hoc activity to a foundational design principle. By adopting an evaluation-first mindset - grounded in structured planning, simulation-based testing, and comprehensive coverage - you not only mitigate risk but also unlock the full strategic value of your AI solution. Whether you're building internal tools or customer-facing products, a robust evaluation framework ensures your system is trustworthy, aligned with business goals, and differentiated from generic models.