SharedTree is a data structure that allows users to collaboratively edit hierarchical data, such as documents, spreadsheets, or outlines. SharedTree is part of the Fluid Framework, which is a platform for building distributed applications that enable real-time collaboration and data synchronization.

The basic unit of a SharedTree is a node. SharedTree supports four types of nodes:

- Object nodes contain named fields that can hold any type of node. Object nodes are like JSON or JavaScript objects.

- Map nodes are a collection of key-value pairs, where the keys are strings, and the values can be any type of node. Map nodes are like object nodes, but they allow more flexibility in adding, removing, and updating keys and values.

- Array nodes are a collection of nodes that are ordered by index. Array nodes are like JSON arrays or JavaScript arrays.

- Leaf nodes are nodes that have no children and only a single value as their content. The value can be a primitive type, such as a string, a number, a boolean, or null. Leaf nodes are like JSON values or JavaScript primitives.

What are merge semantics?

Merge semantics define how SharedTree reconciles concurrent edits – most importantly, those that may conflict. Concurrent edits are changes that are made by different users or processes at the same time, or in an overlapping time window. More specifically, two changes are concurrent if the clients making each change have not received the other’s change. For example, if Alice and Bob are both editing the same tree, and Alice adds a new node while Bob removes an existing node, these are concurrent edits.

Concurrent edits can lead to situations where two or more edits change the same part of the tree. For example, if Alice and Bob both try to edit the same node, or if Alice tries to add a child to a node that Bob removed. Merge semantics determine how these situations are handled.

How edits are sequenced

Sequencing impacts merge semantics by determining the order in which edits are applied to the tree. Sequencing is determined by the Fluid relay service, which assigns a sequence number to each edit based on the order in which it receives them. The sequence number determines the logical order of edits for all clients.

When edits occur concurrently, each editor has no knowledge of the other editor’s concurrent edit. Once concurrent edits have been sequenced and the clients have received that sequence, the SharedTree merge semantics will determine the correct state of the tree based on the assigned sequence.

Handling concurrent edits

Reconciling concurrent edits is trivial when they affect independent parts of the tree. However, it’s possible for some concurrent edits to affect overlapping parts of the tree. This leads to a situation where there may be multiple reasonable outcomes.

For example, if Alice and Bob concurrently change the color of the same item such that Alice would change it from yellow to red and Bob would change it from yellow to blue, then one could imagine multiple possible outcomes:

- change the color to red

- change the color to blue

- keep the color yellow

Different merge semantics may lead to different outcomes. In this case, the item will either be red or blue depending on how the changes are sequenced.

SharedTree never models edits (or documents) as being “conflicted”, even in the scenario just described. In fact, currently SharedTree never surfaces a conflict where one of two states or one of two edits must be selected as the winner or manually reconciled. Instead of treating edits as conflicted, SharedTree always applies each edit one after the other in sequencing order as long as the edit is valid. For the example above, this means that it is the last edit to be sequenced that determines the final color for the item.

This approach works because SharedTree’s edits work to capture precise intentions, which enable SharedTree to resolve potential conflicts either by accommodating all concurrent intentions, or by picking one to override the others in a deterministic way.

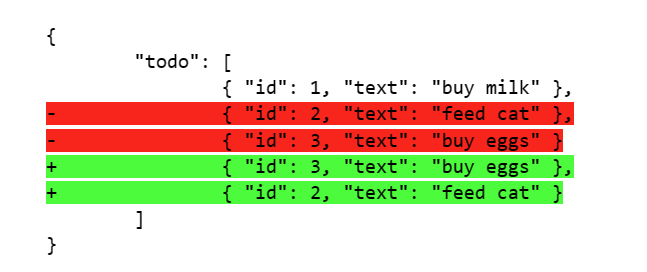

For example, consider the following array, managed by a collaborative TODO application:

Alice might reorder the items so that purchases are grouped together. The resulting state would be as follows:

Concurrently, Bob may update item #2 to call out the cat by name. The resulting state would be as follows:

SharedTree understands that Alice’s intent is to move item 2 to the end of the array and that Bob’s intent is to change the text of item 2 and that these two changes are both valid and mutually compatible.

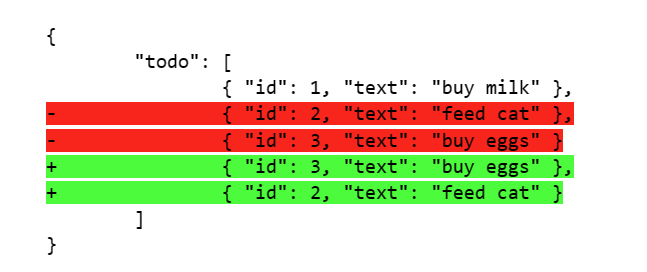

Now consider how a system like Git sees each change. Here is the diff for reordering the items:

Here is the diff for updating the text property on item #2:

Unlike SharedTree, Git only understands the changes through diffing, which makes it unable to perceive the fact that the intentions behind the changes are not in conflict and can both be accommodated. While this makes Git very flexible, it is forced to rely on human intervention in all but trivial merges.

Moving is not copying

SharedTree allows an item to be moved from one location to another in the tree. Edits made to the item before it is moved will still apply even if they end up (because of concurrency) being applied after the item moves. This works because the move doesn’t involve a new copy of the moved item at the destination and the deletion of the original at the source. It is a true move of the item.

Consider the example above: Alice moves the to do item from one position to another, while Bob concurrently edits the text of the item. If the move were just a copy, then, if Alice’s move were to be sequenced first, Bob’s edit would not apply to the copy at the destination. By contrast, SharedTree’s move semantics ensure that Bob’s edit will be visible on the item at the destination no matter the sequencing order.

Removal is movement

SharedTree allows items to be removed from the tree. This occurs when an element is removed from an array node, when a key is deleted from a map node, or when the field on an object is overwritten or cleared.

Consider the following scenario: Alice removes a whole array of to do items, while Bob concurrently moves an item to a different array. SharedTree’s removal semantics ensure that Bob’s move will still apply, regardless of whether it ends up being sequenced before or after Alice removed the list where the item came from.

If that weren’t the case, then there would be a race between Alice’s and Bob’s edits, where Bob’s edit would not apply if Alice’s edit were sequenced first, and Bob would lose the item he moved.

In the case where an item is changed as it is removed, those modifications may end up being invisible. However, they will be preserved, so that if the removal is undone the changes will be present.

Last write wins

It’s possible for concurrent edits to represent fundamentally incompatible user intentions. Whenever that happens, the edit that is sequenced last will win.

Example 1: Alice and Bob concurrently change the background color of the same item such that Alice changes it from yellow to red, and Bob changes it from yellow to blue. If the edits are sequenced such that Alice’s edit is applied first and Bob’s edit is applied second, then the background color of the item will change from yellow to red and then from red to blue. If the edits are sequenced in the opposite order, the item’s background color will change from yellow to blue and then from blue to red.

Example 2: Alice and Bob concurrently move the same item such that Alice moves it from location X to location A, and Bob moves it from location X to location B. If the edits are sequenced such that Alice’s edit is applied first and Bob’s edit is applied second, then the item will first be moved from X to A and then from A to B. If the edits are sequenced in the opposite order, then the note will first be moved from X to B and then from B to A. Note that, because we treat removal as movement, this is true even when removals are involved: if the removal is sequenced last, then the node will be moved and then removed. If the move is sequenced last, then the node will be removed and then moved.

Constraints

There are some base conditions that SharedTree uses to ensure that a change is valid – these primarily focus on ensuring that the tree is always compliant with its schema after each change. SharedTree is also designed to allow developers to apply additional constraints to a change to help developers preserve their own application’s invariants. This feature is currently still being developed and we are always listening to feedback on what constraints would be helpful. For example, a developer might want to prevent the removal of an item if the item was concurrently changed or moved. If so, they could use a constraint that tests for these cases and drops the removal if the node has been changed or moved.

To learn more about SharedTree merge semantics see: https://aka.ms/fluid/tree/merge_semantics

The post Fluid Framework: How SharedTree merges changes appeared first on Microsoft 365 Developer Blog.