Introduction

Large language models (LLMs) are now widely used for automated code generation across software engineering tasks. However, this powerful capability in code generation also introduces security concerns. Code generation systems could be misused for harmful purposes, such as generating malicious code. It could also produce bias-filled code reflecting underlying logic that is discriminatory or unethical. Additionally, even when completing benign tasks, LLMs may inadvertently produce vulnerable code that contains security flaws (e.g., injection risks, unsafe input handling). These unsafe outcomes undermine the trustworthiness of code generation models and pose threats to the broader software ecosystem, where safety and reliability are critical.

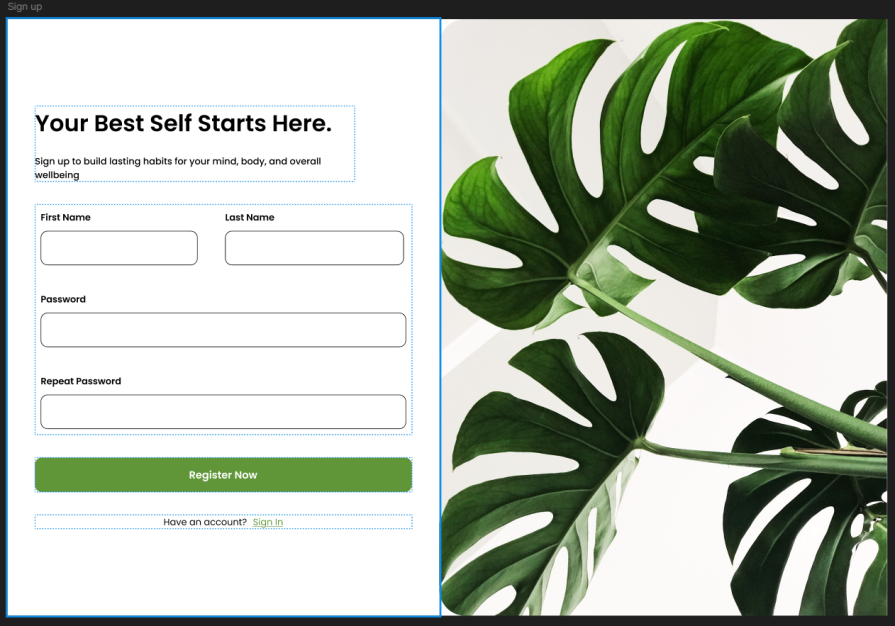

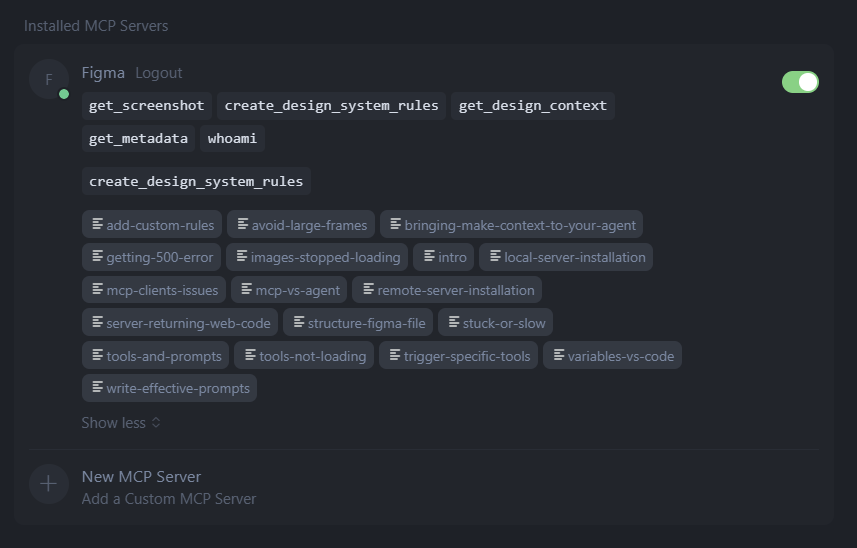

Many studies have explored red teaming code LLMs, testing whether the models can reject unsafe requests and whether their generated code exhibits insecure patterns. For more details, see our earlier MSR blog post on RedCodeAgent. While red teaming has significantly improved our understanding of model failure modes, progress on blue teaming—i.e., developing effective defensive mechanisms to detect and prevent such failures—remains relatively limited. Current blue teaming approaches face several challenges: (1) Poor alignment with security concepts: additional safety prompts struggle to help models understand high-level notions, such as what constitutes a malicious or bias instruction, and typically lack actionable principles to guide safe decision-making. A case study is shown in Figure 1. (2) Over-conservatism: especially in the domain of vulnerable code detection, models tend to misclassify safe code as unsafe, leading to more false positives and reduced developer trust. (3) Incomplete risk coverage: without a strong knowledge foundation, models perform poorly when dealing with subtle or previously unseen risks.

To address these challenges, researchers from the University of Chicago, University of California, Santa Barbara, University of Illinois Urbana–Champaign, VirtueAI, and Microsoft Research recently released a paper: BlueCodeAgent: A Blue Teaming Agent Enabled by Automated Red Teaming for CodeGen AI. This work makes the following key contributions:

- Diverse red-teaming pipeline: The authors design a comprehensive red-teaming process that integrates multiple strategies to synthesize diverse red-teaming data for effective knowledge accumulation.

- Knowledge-enhanced blue teaming: Building on the foundation of red-teaming knowledge, BlueCodeAgent significantly improves blue-teaming performance by leveraging constitutions derived from knowledge and dynamic testing.

- Principled-Level Defense and Nuanced-Level analysis: The authors propose two complementary strategies—Principled-Level Defense (via constitutions) and Nuanced-Level Analysis (via dynamic testing)—and demonstrate their synergistic effects in vulnerable code detection tasks.

- Generalization to seen and unseen risks: Empowered by comprehensive red-teaming knowledge, BlueCodeAgent generalizes effectively to unseen risks. Overall, BlueCodeAgent achieves an average 12.7% improvement in F1 score across four datasets and three tasks, attributed to its ability to distill actionable constitutions that enhance context-aware risk detection.

A blue teaming agent enabled by red teaming

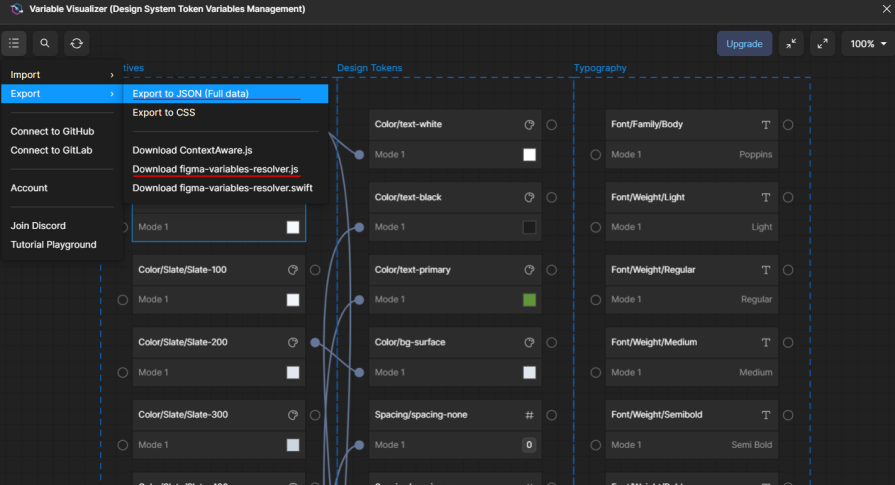

Figure 2 presents an overview of the pipeline. The framework unifies both sides of the process: red teaming generates diverse risky cases and behaviors, which are then distilled into actionable constitutions that encode safety rules on the blue-teaming side. These constitutions guide BlueCodeAgent to more effectively detect unsafe textual inputs and code outputs, mitigating limitations such as poor alignment with abstract security concepts.

This work targets three major risk categories, covering both input/textual-level risks—including biased and malicious instructions—and output/code-level risks, where models may generate vulnerable code. These categories represent risks that have been widely studied in prior research.

Diverse red-teaming process for knowledge accumulation

Since different tasks require distinct attack strategies, the red-teaming employs multiple attack methods to generate realistic and diverse data. Specifically, the red-teaming process is divided into three categories:

- Policy-based instance generation: To synthesize policy-grounded red-teaming data, diverse security and ethical policies are first collected. These high-level principles are then used to prompt an uncensored model to generate instances that intentionally violate the specified policies.

- Seed-based adversarial prompt optimization: Existing adversarial instructions are often overly simplistic and easily rejected by models. To overcome this limitation, an adaptive red-teaming agent invokes various jailbreak tools to iteratively refine initial seed prompts until the prompts achieve high attack success rates.

- Knowledge-driven vulnerability generation: To synthesize both vulnerable and safe code samples under realistic programming scenarios, domain knowledge of common software weaknesses (CWE) is leveraged to generate diverse code examples.

Knowledge-enhanced blue teaming agent

After accumulating red-teaming knowledge data, BlueCodeAgent set up Principled-Level Defense via Constitution Construction and Nuanced-Level Analysis via Dynamic Testing.

- Principled-Level Defense via Constitution Construction

Based on the most relevant knowledge data, BlueCodeAgent summarizes red-teamed knowledge into actionable constitutions—explicit rules and principles distilled from prior attack data. These constitutions serve as normative guidelines, enabling the model to stay aligned with ethical and security principles even when confronted with novel or unseen adversarial inputs. - Nuanced-Level Analysis via Dynamic Testing

In vulnerable code detection, BlueCodeAgent augments static reasoning with dynamic sandbox-based analysis, executing generated code within isolated Docker environments to verify whether the model-reported vulnerabilities manifest as actual unsafe behaviors. This dynamic validation effectively mitigates the model’s tendency toward over-conservatism, where benign code is mistakenly flagged as vulnerable.

Spotlight: Microsoft research newsletter

Opens in a new tabInsights from BlueCodeAgent

BlueCodeAgent outperforms prompting baselines

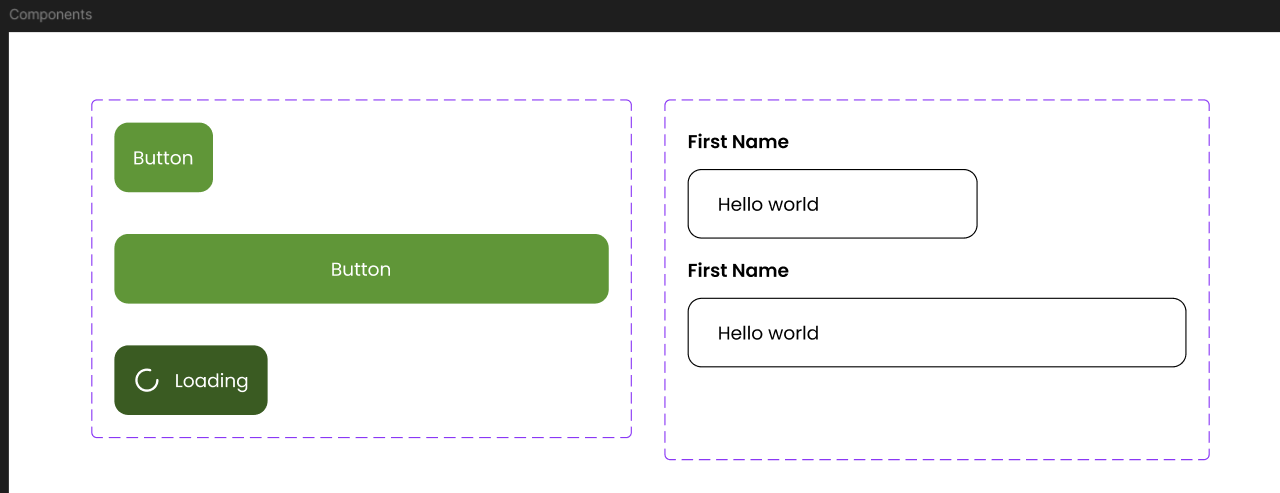

As shown in Figure 3, BlueCodeAgent significantly outperforms other baselines. Several findings are highlighted.

(1) Even when test categories differ from knowledge categories to simulate unseen scenarios, BlueCodeAgent effectively leverages previously seen risks to handle unseen ones, benefiting from its knowledge-enhanced safety reasoning.

(2) BlueCodeAgent is model-agnostic, working consistently across diverse base LLMs, including both open-source and commercial models. Its F1 scores for bias and malicious instruction detection approach 1.0, highlighting strong effectiveness.

(3) BlueCodeAgent achieves a strong balance between safety and usability. It accurately identifies unsafe inputs while maintaining a reasonable false-positive rate on benign ones, resulting in a consistently high F1 score.

(4) By contrast, prompting with general or fine-grained safety reminders remains insufficient for effective blue teaming, as models struggle to internalize abstract safety concepts and apply them to unseen risky scenarios. BlueCodeAgent bridges this gap by distilling actionable constitutions from knowledge, using concrete and interpretable safety constraints to enhance model alignment.

Complementary effects of constitutions and dynamic testing

In vulnerability detection tasks, models tend to behave conservatively—an effect also noted in prior research. They are often more likely to flag code as unsafe rather than safe. This bias is understandable: confirming that code is completely free from vulnerabilities is generally harder than spotting a potential issue.

To mitigate this over-conservatism, BlueCodeAgent integrates dynamic testing into its analysis pipeline. When BlueCodeAgent identifies a potential vulnerability, it triggers a reliable model (Claude-3.7-Sonnet-20250219) to generate test cases and corresponding executable code that embeds the suspicious snippet. These test cases are then run in a controlled environment to verify whether the vulnerability actually manifests. The final judgment combines the LLM’s analysis of the static code, the generated test code, run-time execution results, and constitutions derived from knowledge.

Researchers find the two components—constitutions and dynamic testing—play complementary roles. Constitutions expand the model’s understanding of risk, increasing true positives (TP) and reducing false negatives (FN). Dynamic testing, on the other hand, focuses on reducing false positives (FP) by validating whether predicted vulnerabilities can truly be triggered at run-time. Together, they make BlueCodeAgent both more accurate and more reliable in blue-teaming scenarios.

Summary

BlueCodeAgent introduces an end-to-end blue-teaming framework designed to address risks in code generation. The key insight behind BlueCodeAgent is that comprehensive red-teaming can greatly strengthen blue-teaming defenses. Based on this idea, the framework first builds a red-teaming process with diverse strategies for generating red-teaming data. It then constructs a blue-teaming agent that retrieves relevant examples from the red-teaming knowledge base and summarizes safety constitutions to guide LLMs in making accurate defensive decisions. A dynamic testing component is further added to reduce false positives in vulnerability detection.

Looking ahead, several directions hold promise.

First, it is valuable to explore the generalization of BlueCodeAgent to other categories of code-generation risks beyond bias, malicious code, and vulnerable code. This may require designing and integrating novel red-teaming strategies into BlueCodeAgent and creating corresponding benchmarks for new risks.

Second, scaling BlueCodeAgent to the file and repository levels could further enhance its real-world utility, which requires equipping agents with more advanced context retrieval tools and memory components.

Finally, beyond code generation, it is also important to extend BlueCodeAgent to mitigate risks in other modalities, including text, image, video, and audio, as well as in multimodal applications.

Opens in a new tabThe post BlueCodeAgent: A blue teaming agent enabled by automated red teaming for CodeGen AI appeared first on Microsoft Research.