This blog post covers how to build a chat history implementation using Azure Cosmos DB for NoSQL Go SDK and langchaingo. If you are new to the Go SDK, the sample chatbot application presented in the blog serves as a practical introduction, covering basic operations like read, upsert, etc. It also demonstrates using the Azure Cosmos DB Linux-based emulator (in preview at the time of writing) for integration tests with Testcontainers for Go.

Go developers looking to build AI applications can use langchaingo, which is a framework for LLM-powered (Large Language Model) applications. It provides pluggable APIs for components like vector store, embedding, loading documents, chains (for composing multiple operations), chat history and more.

Before diving in, let’s take a step back to understand the basics.

What is chat history and why it’s important for modern AI applications?

A common requirement for conversational AI applications is to be able to store and retrieve messages exchanged as part of conversations. This is often referred to as “chat history”. If you have used applications like ChatGPT (which also uses Azure Cosmos DB by the way!), you may be familiar with this concept. When a user logs in, they can start chatting and the messages exchanged as part of the conversation are saved. When they log in again, they can see their previous conversations and can continue from where they left off.

Chat history is obviously important for application end users, but let’s not forget about LLMs! As smart as LLMs might seem, they cannot recall past interactions due to lack of built-in memory (at least for now). Using chat history bridges this gap by providing previous conversations as additional context, enabling LLMs to generate more relevant and high-quality responses. This enhances the natural flow of conversations and significantly improves the user experience.

A simple example illustrates this: Suppose you ask an LLM via an API, "Tell me about Azure Cosmos DB" and it responds with a lengthy paragraph. If you then make another API call saying, "Break this down into bullet points for easier reading", the LLM might get confused because it lacks context from the previous interaction. However, if you include the earlier message as part of the context in the second API call, the LLM is more likely to provide an accurate response (though not guaranteed, as LLM outputs are inherently non-deterministic).

How to run the chatbot

Like I mentioned earlier, the sample application is a useful way for you to explore langchaingo, the Azure Cosmos DB chat history implementation, as well as the Go SDK.

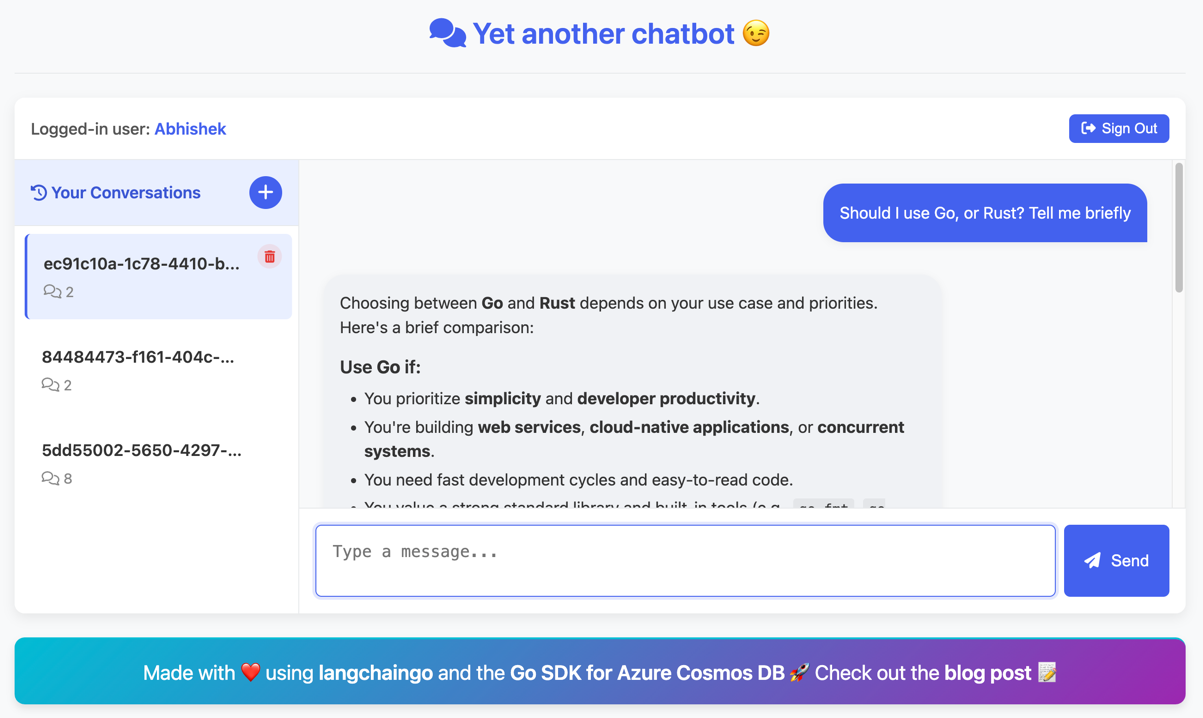

Before exploring the implementation details, it’s a good idea to see the application in action. Refer to the README section of the GitHub repository that provides instructions on how to configure, run and start conversing with the chatbot.

Application overview

The chat application follows a straightforward domain model: users can initiate multiple conversations, and each conversation can contain multiple messages. Built in Go, the application includes both backend and frontend components.

- Backend: It has multiple sub-parts:

- The Azure Cosmos DB chat history implementation.

- Core operations like starting a chat, sending/receiving messages, and retrieving conversation history are exposed via a REST API.

- The REST API leverages a

langchaingochain to handle user messages. The chain automatically incorporates chat history to ensure past conversations are sent to the LLM.langchaingohandles all orchestration – LLM invocation, chat history inclusion, and more without requiring manual implementation.

- Frontend: It is built using JavaScript, HTML, and CSS. It is packaged as part of the Go web server (using the embed package), and invokes the backend REST APIs in response to user interactions.

Chat history implementation using Azure Cosmos DB

langchaingo is a pluggable framework, including its chat history (or memory) component. To integrate Azure Cosmos DB, you need to implement the schema.ChatMessageHistory interface, which provides methods to manage the chat history:

AddMessageto add messages to a conversation (or start a new one).Messagesto retrieve all messages for a conversation.Clearto delete all messages in a conversation.

While you can directly instantiate a CosmosDBChatMessageHistory instance and use these methods, the recommended approach is to integrate it into the langchaingo application. Below is an example of using Azure Cosmos DB chat history with a LLMChain:

// Create a chat history instance

cosmosChatHistory, err := cosmosdb.NewCosmosDBChatMessageHistory(cosmosClient, databaseName, containerName, req.SessionID, req.UserID)

if err != nil {

log.Printf("Error creating chat history: %v", err)

sendErrorResponse(w, "Failed to create chat session", http.StatusInternalServerError)

return

}

// Create a memory with the chat history

chatMemory := memory.NewConversationBuffer(

memory.WithMemoryKey("chat_history"),

memory.WithChatHistory(cosmosChatHistory),

)

// Create an LLM chain

chain := chains.LLMChain{

Prompt: promptsTemplate,

LLM: llm,

Memory: chatMemory,

OutputParser: outputparser.NewSimple(),

OutputKey: "text",

}From an Azure Cosmos DB point of view, note that the implementation in this example is just one of many possible options. The one shown here is based on a combination of userid as the partition key and conversation ID (also referred to as the session ID sometimes) being the unique key (id of an Azure Cosmos DB item).

This allows an application to:

- Get all the messages for a conversation – This is a point read using the unique id (conversation ID) and partition key (user ID).

- Add a new message to a conversation – It uses an upsert operation (instead of create) to avoid the need for a read before write.

- Delete a specific conversation – It uses the delete operation to remove a conversation (and all its messages).

langchaingo interface does not expose it, when integrating this as a part of an application, you can also issue a separate query to get all the conversations for a user. This is also efficient since its scoped to a single partition.Simplify testing with Azure Cosmos DB emulator and testcontainers

The sample application includes basic test cases for both Azure Cosmos DB chat history and the main application. It is worth highlighting the use of testcontainers-go to integrate the Azure Cosmos DB Linux-based emulator docker container.

This is great for integration tests since the database is available locally and the tests run much faster (let’s not forget the cost savings as well!). An icing on top is that you do not need to manage the Docker container lifecycle manually. This is taken care as part of the test suite, thanks to the testcontainers-go API which makes it convenient to start the container before the tests run and terminate it once they complete.

You can refer to the test cases in the sample application for more details. Here is a snippet of how testcontainers-go is used:

func setupCosmosEmulator(ctx context.Context) (testcontainers.Container, error) {

req := testcontainers.ContainerRequest{

Image: emulatorImage,

ExposedPorts: []string{emulatorPort + ":8081", "1234:1234"},

WaitingFor: wait.ForListeningPort(nat.Port(emulatorPort)),

}

container, err := testcontainers.GenericContainer(ctx, testcontainers.GenericContainerRequest{

ContainerRequest: req,

Started: true,

})

if err != nil {

return nil, fmt.Errorf("failed to start container: %w", err)

}

// Give the emulator a bit more time to fully initialize

time.Sleep(5 * time.Second)

return container, nil

}Wrapping Up

Being able to store chat history is an important part of conversational AI apps. They can serve as a great add-on to existing techniques such as RAG (Retrieval Augmented Generation). Do try out the chatbot application and let us know what you think!

While the implementation in the sample application is relatively simple, how you model the chat history data depends on the requirements. One such scenario has been presented is this excellent blog post on How Microsoft Copilot scales to millions of users with Azure Cosmos DB.

Some of your requirements might include:

- Storing metadata, such as reactions (in addition to messages)

- Showing top N recent messages

- Considering chat history data retention period (using TTL)

- Incorporating additional analytics (on user interactions) based on the chat history data, and more.

Irrespective of the implementation, always make sure to incorporate best practices for data modeling. Refer How to model and partition data on Azure Cosmos DB using a real-world example for guidelines.

Leave a review

Tell us about your Azure Cosmos DB experience! Leave a review on PeerSpot and we’ll gift you $50. Get started here.

About Azure Cosmos DB

Azure Cosmos DB is a fully managed and serverless NoSQL and vector database for modern app development, including AI applications. With its SLA-backed speed and availability as well as instant dynamic scalability, it is ideal for real-time NoSQL and MongoDB applications that require high performance and distributed computing over massive volumes of NoSQL and vector data.

Try Azure Cosmos DB for free here. To stay in the loop on Azure Cosmos DB updates, follow us on X, YouTube, and LinkedIn.

The post Implementing Chat History for AI Applications Using Azure Cosmos DB Go SDK appeared first on Azure Cosmos DB Blog.