Introduction

As generative AI's adoption rapidly expands across various industries, integrating it into products, services, and operations becomes increasingly commonplace. However, it's crucial to address the environmental implications of such advancements, including their energy consumption, carbon footprint, water usage, and electronic waste, throughout the generative AI lifecycle. This lifecycle, often referred to as large language model operations (LLMOps), encompasses everything from model development and training to deployment and ongoing maintenance, all of which demand diligent resource optimisation.

This guide aims to extend Azure’s Well-Architected Framework (WAF) for sustainable workloads to the specific challenges and opportunities presented by generative AI. We'll explore essential decision points, such as selecting the right models, optimising fine-tuning processes, leveraging Retrieval Augmented Generation (RAG), and mastering prompt engineering, all through a lens of environmental sustainability. By providing these targeted suggestions and best practices, we equip practitioners with the knowledge to implement generative AI not only effectively, but responsibly.

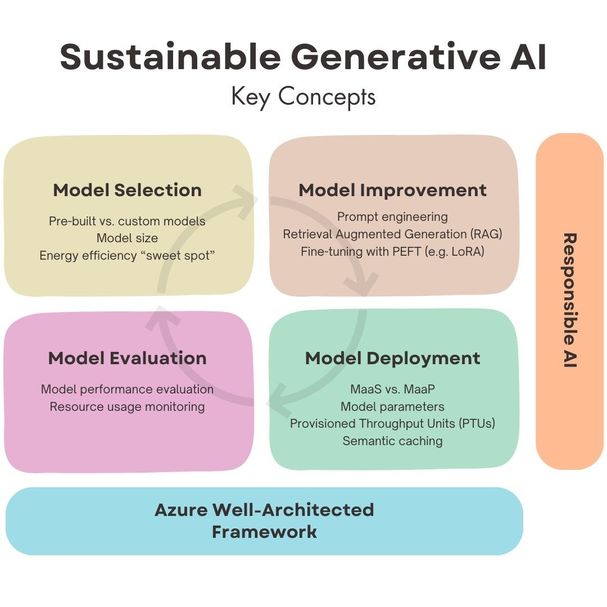

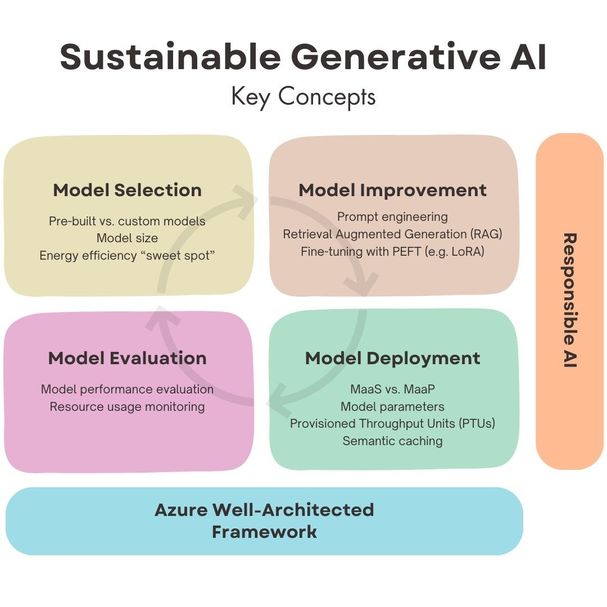

Image Description: A diagram titled "Sustainable Generative AI: Key Concepts" divided into four quadrants. Each quadrant contains bullet points summarising the key aspects of sustainable AI discussed in this article.

Select the foundation model

Choosing the right base model is crucial to optimising energy efficiency and sustainability within your AI initiatives. Consider this framework as a guide for informed decision-making:

Pre-built vs. Custom Models

When embarking on a generative AI project, one of the first decisions you'll face is whether to use a pre-built model or train a custom model from scratch. While custom models can be tailored to your specific needs, the process of training them requires significant computational resources and energy, leading to a substantial carbon footprint. For example, training an LLM the size of GPT-3 is estimated to consume nearly 1,300 megawatt hours (MWh) of electricity. In contrast, initiating projects with pre-built models can conserve vast amounts of resources, making it an inherently more sustainable approach.

Azure AI Studio's comprehensive model catalogue is an invaluable resource for evaluating and selecting pre-built models based on your specific requirements, such as task relevance, domain specificity, and linguistic compatibility. The catalogue provides benchmarks covering common metrics like accuracy, coherence, and fluency, enabling informed comparisons across models. Additionally, for select models, you can test them before deployment to ensure they meet your needs. Choosing a pre-built model doesn't limit your ability to customise it to your unique scenarios. Techniques like fine-tuning and retrieval augmented generation (RAG) allow you to adapt pre-built models to your specific domain or task without the need for resource-intensive training from scratch. This enables you to achieve highly tailored results while still benefiting from the sustainability advantages of using pre-built models, striking a balance between customisation and environmental impact.

Model Size

The correlation between a model's parameter count and its performance (and resource demands) is significant. Before defaulting to the largest available models, consider whether more compact alternatives, such as Microsoft’s Phi-2, Mistral AI’s Mixtral 8x7B or similar sized models, could suffice for your needs. The efficiency "sweet spot"—where performance gains no longer justify the increased size and energy consumption—is critical for sustainable AI deployment. Opting for smaller, fine-tuneable models (known as small language models—or SLMs) can result in substantial energy savings without compromising effectiveness.

|

Model Selection

|

Considerations

|

Sustainability Impact

|

|

Pre-built Models

|

Leverage existing models and customise with fine-tuning, RAG and prompt engineering

|

Reduces training-related emissions

|

|

Custom Models

|

Tailor models to specific needs and customise further if needed

|

Higher carbon footprint due to training

|

|

Model Size

|

Larger models offer better output performance but require more resources

|

Balancing performance and efficiency is crucial

|

Improve the model’s performance

Improving your AI model's performance involves strategic prompt engineering, grounding the model in relevant data, and potentially fine-tuning for specific applications. Consider these approaches:

Prompt Engineering

The art of prompt engineering lies in crafting inputs that elicit the most effective and efficient responses from your model, serving as a foundational step in customising its output to your needs. Beyond following the detailed guidelines from the likes of Microsoft and OpenAI, understanding the core principles of prompt construction—such as clarity, context, and specificity—can drastically improve model performance. Well-tuned prompts not only lead to better output quality but also contribute to sustainability by reducing the number of tokens required and the overall compute resources consumed. By getting the desired output in fewer input-output cycles, you inherently use less carbon per interaction. Orchestration frameworks like prompt flow and Semantic Kernel facilitate experimentation and refinement, enhancing prompt effectiveness with version control and reusability with templates.

Retrieval Augmented Generation (RAG)

Integrating RAG with your models taps into existing datasets, leveraging organisational knowledge without the extensive resources required for model training or extensive fine-tuning. This approach underscores the importance of how and where data is stored and accessed since its effectiveness and carbon efficiency is highly dependent on the quality and relevance of the retrieved data. End-to-end solutions like Microsoft Fabric facilitate comprehensive data management, while Azure AI Search enhances efficient information retrieval through hybrid search, combining vector and keyword search techniques. In addition, frameworks like prompt flow and Semantic Kernel enable you to successfully build RAG solutions with Azure AI Studio.

Fine-tuning

For domain-specific adjustments or to address knowledge gaps in pre-trained models, fine-tuning is a tailored approach. While involving additional computation, fine-tuning can be a more sustainable option than training a model from scratch or repeatedly passing large amounts of context via prompts and organisational data for each query. Azure OpenAI’s use of PEFT (parameter-efficient fine-tuning) techniques, like LoRA (low-rank approximation) uses far fewer computational resources over full fine-tuning. Not all models support fine-tuning so consider this in your base model selection.

|

Model Improvement

|

Considerations

|

Sustainability Impact

|

|

Prompt Engineering

|

Optimise prompts for more relevant output

|

Low carbon impact vs. fine-tuning, but consistently long prompts may reduce efficiency

|

|

Retrieval Augmented Generation (RAG)

|

Leverages existing data to ground model

|

Low carbon impact vs. fine-tuning, depending on relevance of retrieved data

|

|

Fine-tuning (with PEFT)

|

Adapt to specific domains or tasks not encapsulated in base model

|

Carbon impact depends on model usage and lifecycle, recommended over full fine-tuning

|

Deploy the model

Azure AI Studio simplifies model deployment, offering various pathways depending on your chosen model. Embracing Microsoft's management of the underlying infrastructure often leads to greater efficiency and reduced responsibility on your part.

MaaS vs. MaaP

Model-as-a-Service (MaaS) provides a seamless API experience for deploying models like Llama 3 and Mistral Large, eliminating the need for direct compute management. With MaaS, you deploy a pay-as-you-go endpoint to your environment, while Azure handles all other operational aspects. This approach is often favoured for its energy efficiency, as Azure optimises the underlying infrastructure, potentially leading to a more sustainable use of resources. MaaS can be thought of as a SaaS-like experience applied to foundation models, providing a convenient and efficient way to leverage pre-trained models without the overhead of managing the infrastructure yourself.

On the other hand, Model-as-a-Platform (MaaP) caters to a broader range of models, including those not available through MaaS. When opting for MaaP, you create a real-time endpoint and take on the responsibility of managing the underlying infrastructure. This approach can be seen as a PaaS offering for models, combining the ease of deployment with the flexibility to customise the compute resources. However, choosing MaaP requires careful consideration of the sustainability trade-offs outlined in the WAF, as you have more control over the infrastructure setup. It's essential to strike a balance between customisation and resource efficiency to ensure a sustainable deployment.

Model Parameters

Tailoring your model's deployment involves adjusting various parameters—such as temperature, top p, frequency penalty, presence penalty, and max response—to align with the expected output. Understanding and adjusting these parameters can significantly enhance model efficiency. By optimising responses to reduce the need for extensive context or fine-tuning, you lower memory use and, consequently, energy consumption.

Provisioned Throughput Units (PTUs)

Provisioned Throughput Units (PTUs) are designed to improve model latency and ensure consistent performance, serving a dual purpose. Firstly, by allocating dedicated capacity, PTUs mitigate the risk of API timeouts—a common source of inefficiency that can lead to unnecessary repeat requests by the end application. This conserves computational resources. Secondly, PTUs grant Microsoft valuable insight into anticipated demand, facilitating more effective data centre capacity planning.

Semantic Caching

Implementing caching mechanisms for frequently used prompts and completions can significantly reduce the computational resources and energy consumption of your generative AI workloads. Consider using in-memory caching services like Azure Cache for Redis for high-speed access and persistent storage solutions like Azure Cosmos DB for longer-term storage. Ensure the relevance of cached results through appropriate invalidation strategies. By incorporating caching into your model deployment strategy, you can minimise the environmental impact of your deployments while improving efficiency and response times.

|

Model Deployment

|

Considerations

|

Sustainability Impact

|

|

MaaS

|

Serverless deployment, managed infrastructure

|

Lower carbon intensity due to optimised infrastructure

|

|

MaaP

|

Flexible deployment, self-managed infrastructure

|

Higher carbon intensity, requires careful resource management

|

|

PTUs

|

Dedicated capacity for consistent performance

|

Improves efficiency by avoiding API timeouts and redundant requests

|

|

Semantic Caching

|

Store and reuse frequently accessed data

|

Reduces redundant computations, improves efficiency

|

Evaluate the model’s performance

Model Evaluation

As base models evolve and user needs shift, regular assessment of model performance becomes essential. Azure AI Studio facilitates this through its suite of evaluation tools, enabling both manual and automated comparison of actual outputs against expected ones across various metrics, including groundedness, fluency, relevancy, and F1 score. Importantly, assessing performance also means scrutinising your model for risk and safety concerns, such as the presence of self-harm, hateful, and unfair content, to ensure compliance with an ethical AI framework.

Model Performance

Model deployment strategy—whether via MaaS or MaaP—affects how you should monitor resource usage within your Azure environment. Key metrics like CPU, GPU, memory utilisation, and network performance are vital indicators of your infrastructure's health and efficiency. Tools like Azure Monitor and Azure carbon optimisation offer comprehensive insights, helping you check that your resources are allocated optimally. Consult the Azure Well-Architected Framework for detailed strategies on balancing performance enhancements with cost and energy efficiency, such as deploying to low-carbon regions, ensuring your AI implementations remain both optimal and sustainable.

A Note on Responsible AI

While sustainability is the main focus of this guide, it's important to also consider the broader context of responsible AI. Microsoft's Responsible AI Standard provides valuable guidance on principles like fairness, transparency, and accountability. Technical safeguards, such as Azure AI Content Safety, play a role in mitigating risks but should be part of a comprehensive approach that includes fostering a culture of responsibility, conducting ethical reviews, and combining technical, ethical, and cultural considerations. By taking a holistic approach, we can work towards the responsible development and deployment of generative AI while addressing potential challenges and promoting its ethical use.

Conclusion

As we explore the potential of generative AI, it’s clear that its use cases will continue to grow quickly. This makes it crucial to keep the environmental impact of our AI workloads in mind.

In this guide, we’ve outlined some key practices to help prioritise the environmental aspect throughout the lifecycle. With the field of generative AI changing rapidly, make sure to say up to its latest developments and keep learning.

Contributions

Special thanks to the UK GPS team who reviewed this article before it was published. In particular, Michael Gillett, George Tubb, Lu Calcagno, Sony John, and Chris Marchal.