Peter Wang can see the parallels between the adoption of open source software by enterprises years ago and what many of these organizations are doing today as they embrace AI. As they did with Windows and other vendor-driven software at the time, companies today want to control their own destiny when it comes to AI and are again turning to open source.

“That’s very much the same thing we saw with open source software, where people said, ‘This is great. I can hack on stuff, but I need to know what the bits are, and I’m enterprise-consuming open source,’” Wang, co-founder and chief AI and innovation officer at Python and R distribution vendor Anaconda, told The New Stack. “There are all sorts of companies and vendors and products they use to manage that. They want the innovation that comes from the open source ecosystem, but they have to do it on their own terms.”

Peter Wang

The Austin, Texas-based company wants to help them do that with the Anaconda AI Platform, a new offering designed to give enterprises all the tools and capabilities they need to build, deploy and secure production-level AI systems in an open source environment.

“It basically builds on the learnings that we’ve had from decades in the open source world at bridging the open source and enterprise needs,” Wang said. “It provides a secure distribution. It’s a trusted platform where people can go and get the models they want. They can have a private model repository where the IT organization or the enterprise itself gets to govern and say these are the models that you can go and use. It’s actually very much what we did for Python. We’ve made it easier to use, we made it consistent, we made it enterprise-ready and we made it secure.”

Open Source AI is on an Upward Trend

The innovation around AI continues at a rapid pace, with a little more than two years separating the introduction of OpenAI’s ChatGPT chatbot, which brought generative AI (GenAI) to the mainstream, and the age of agentic AI and reasoning AI, which brings even more automation and autonomy to the technology.

Anaconda’s platform is designed to give enterprises the tools to adopt these innovations while protecting the proprietary data that these AI models increasingly rely upon. It also comes at a time when companies are turning an eye to open source.

According to a report by global consultancy McKinsey and Co. released last month, more than half of the 700-plus tech leaders and senior developers surveyed said their companies are using open source AI tools — alongside proprietary technologies from such companies as OpenAI, Google and Anthropic — and that organizations that put a high priority on AI are more than 40% more likely to use open source AI models and tools.

In particular, “developers … increasingly view experience with open source AI as an important part of their overall job satisfaction,” the report’s authors wrote. “While open source solutions come with concerns about security and time to value, more than three-quarters of survey respondents expect to increase their use of open source AI in the years ahead.”

Bringing AI In-House

Wang said the Anaconda AI Platform was made for those people. Companies, whether highly established or startups, increasingly are looking at AI in self-hosted or on-premises environments. That comes with a range of challenges. Not only do they need the technology to be easy to use, but it needs to be secure. The AI models that businesses are building rely on proprietary data. Security is critical.

It’s a key difference between the adoption years ago of open source software and, now, open source AI, a still hazily defined but increasingly popular concept.

“The one thing that really jams the enterprise brain around this is that enterprises, even with open source software, generally didn’t consume open source data sets,” Wang said.

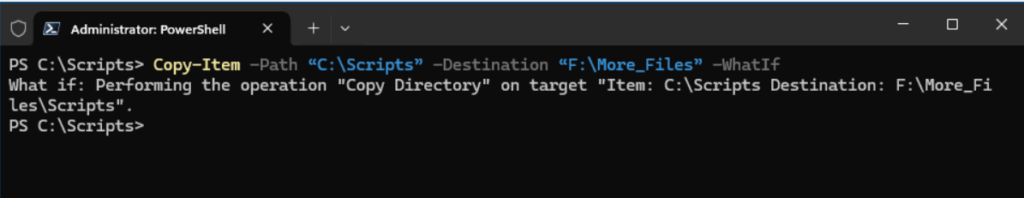

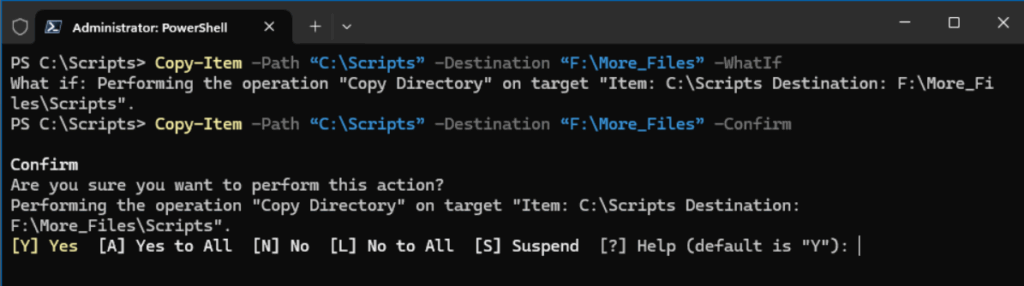

AI models are a fusion of data and code, he said, and because of this, they are attractive targets for threat groups running complex, long-term, multistage attacks, often leveraging AI themselves. Security is key, and features within the Anaconda AI Platform — including Unified CLI Authentication and Enterprise Single Sign-On — are designed to address security concerns.

Other features in the platform include the Quick Start Environment, which delivers preconfigured and security-vetted areas aimed at Python, finance and AI and machine learning (ML) development, while error tracking and logging capabilities bring real-time monitoring of workflows, enabling developers to more quickly detect and resolve issues. There are also governance features to ensure compliance with such regulations as the EU’s General Data Protection Regulation (GDPR) and the Health Insurance Portability and Accountability Act (HIPAA) in the United States.

Anaconda Dashboard.

Serving the Underserved

Through the AI platform, Anaconda is looking to do for AI developers what it did for those adopting Python for the first time, Wang said. It wasn’t only about using cool software but understanding that there was a demographic of users — like domain pros and experts with numerical computing — that were underserved and adopting the programming language in a grassroots way.

It took years for IT teams to see this as more than just shadow IT and to understand that a point-and-click business intelligence tool did nothing for those who needed to run quantitative analysis jobs, he said.

“There is still a sort of conceit or arrogance of IT saying, ‘We’re the technology organization,’” he said. “’We have the MLOps and the DevOps and sysadmins. We have the software developers, we have the DBAs, and all of you in the lines of business, leave this to the adults.’”

Python gave those underserved groups the ability to run their own high-end data analytics and ML jobs, empowering end user programming and lines of business. The programming language will do the same for AI. Programmers can just tell large-language models (LLMs) to look at business data in a particular database, write a query to pull the data out and then write Python code to transform the data. But it can also write the wrong code or pull the wrong data.

The Need for Security

That’s where the danger lies, particularly given the large number of end users of the technology. Because it’s so new and innovation is happening so quickly, many times these people can create new technologies and deploy software but have no idea what they’re doing, Wang said.

“So not only is the thing they’re playing with more dangerous, but the sheer number of people that are going to be doing this is way more,” he said. “That’s why with our AI platform, we’re hoping to take the learnings we had from onboarding a set of non-developer programmers in the data science and machine learning world and say, ‘All these other non-developer vibe coders — that’s literally everyone in the business — how do we help some of the adults in the room actually manage their experience, give them working agents with well-defined sandboxes and dev containers and these other kinds of things, a valid set of libraries that the LLM agent is allowed to use?’”

Anaconda ToolBox in Notebooks.

Python’s Not Going Anywhere

Wang also pushed back at the notion that Java is on its way to dethroning Python as the programming language of choice for AI. Simon Ritter, deputy CTO at Java platform developer Azul Systems, told The New Stack earlier this year that Java could cut into Python’s lead within the next 18 months to three years, based on a survey of Java developers.

He noted that increasingly, code is going to be written more by machines than by people. Already, AI companies are touting that. Anthropic lead engineer Boris Cherny said that 80% of the code the company uses is written by its Claude AI model. Microsoft CEO Satya Nadella said that 20–30% of code in the company’s repositories was written by AI.

However, people will still need to look at AI-written code, deploy it and tweak it themselves, Wang said. Given that, it’s going to be more important for languages to be easy to read by as many people as possible than to have particular characteristics for writing and executing them.

“Python is easy to write, easy to learn,” he said. “That’s a great differentiating feature. … It’s pretty concise, even though people make fun of it sometimes as a teaching language: ‘It’s slow, it’s a scripting language, not a real systems language.’ The thing is, if you want to express some numerical ideas, if you want to express data transformations, Python is pretty darn concise compared to doing a bunch of for loops over strings in C++ or Java.”

That will make it a key programming language for AI.

“The conciseness of Python, the adaptability of it, the readability, all of these things I think factor into what will make it the most widely read language, if not the most widely written,” he said. “It’ll also be very widely written, but I think it’ll certainly be the most widely read.”

The post Python’s Open Source DNA Powers Anaconda’s New AI Platform appeared first on The New Stack.