Prompt engineering is the practice of designing effective inputs to guide AI systems toward more accurate, useful, and context-aware outputs. It is increasingly applied in areas such as business automation, creative work, research, and education, offering clear benefits in efficiency and accessibility. At the same time, challenges such as scalability, bias, and the trial-and-error nature of prompting underscore the need for structured approaches and best practices. Looking ahead, advancements in automation, ethical frameworks, and industry-specific tools will shape the future of prompt engineering, making it a critical skill for AI-driven innovation.

What is prompt engineering?

Prompt engineering acts as a bridge between humans and machines. To be more precise, prompt engineering is the process of creating and refining the instructions given to an AI model to improve the accuracy and relevance of its responses. Because systems like ChatGPT and Claude generate outputs based on the way prompts are phrased, even small changes in wording, structure, or context can have a significant impact on results.

By designing high-quality prompts, users can help AI models produce outputs that align with specific goals, whether that’s generating content, automating business tasks, or solving technical problems.

What is prompt engineering used for?

Prompt engineering can be used for a variety of different purposes. Some of these include:

-

- Content creation: Writing articles, marketing copy, or social media posts for a specific audience.

- Software development: Assisting with code generation, debugging, and explaining complex programming concepts in natural language.

- Customer support: Helping chatbots and virtual assistants provide accurate, empathetic, and context-aware responses.

- Education and training: Creating study guides, practice problems, or simplified explanations for learners at different levels.

- Research and analysis: Summarizing documents, highlighting key insights, or comparing data from multiple sources.

- Business operations: Drafting emails, creating reports, or automating repetitive tasks.

Benefits of prompt engineering

A good prompt can make the difference between a vague, useless AI response and a clear, actionable one. Some specific benefits of prompt engineering include:

-

- Improved accuracy: Well-crafted prompts reduce misunderstandings and guide AI toward outputs that align closely with user intent.

- Efficiency and productivity: Clear instructions reduce the need for repeated edits or regenerations, saving time and effort.

- Versatility across domains: From business operations to creative writing, prompt engineering enables AI to be applied to a wide variety of uses.

- Accessibility for non-experts: Users without coding or data science backgrounds can still achieve high-quality results through carefully designed prompts.

- Consistency in outputs: Standardized prompts facilitate repeatable results, particularly in enterprise or team settings.

- Improved creativity: By framing prompts in innovative ways, users can encourage AI to generate fresh ideas and perspectives.

Ultimately, prompt engineering helps transform AI from an unpredictable tool into a trustworthy partner for problem solving and creative endeavors.

Challenges of prompt engineering

Understanding the challenges associated with prompt engineering is key to using prompts effectively and responsibly. Here are some potential issues you may encounter:

-

- Trial and error required: Crafting effective prompts often involves multiple iterations before achieving the desired outcome.

- Model limitations: Even with well-structured prompts, AI models may still produce errors, hallucinations, or irrelevant results.

- Scalability issues: Designing consistent, high-quality prompts for enterprise-level use cases can be time consuming and difficult to maintain.

- Context window constraints: AI models can only process a limited amount of information at once, which restricts the depth of input.

- Bias and fairness risks: Poorly phrased prompts may unintentionally reinforce stereotypes, misinformation, or harmful content.

- Overreliance on prompts: Users may depend too heavily on clever prompting instead of combining it with other strategies like fine-tuning or retrieval-augmented generation (RAG).

By recognizing these challenges, prompt engineers can strike a balance between experimentation and structured best practices to make AI outputs more ethical and reliable.

Prompt engineering techniques

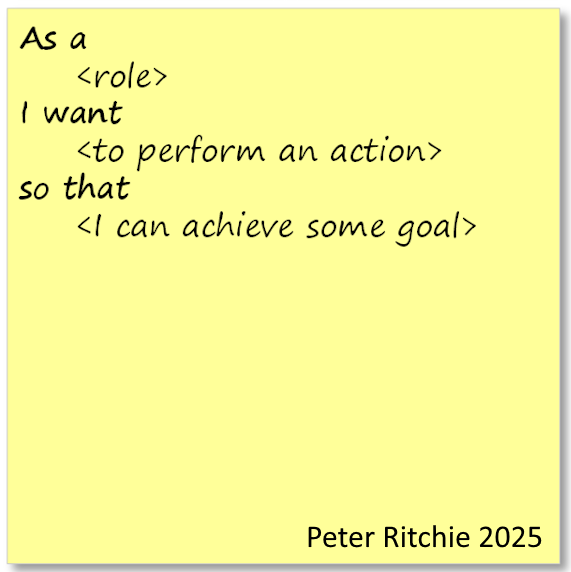

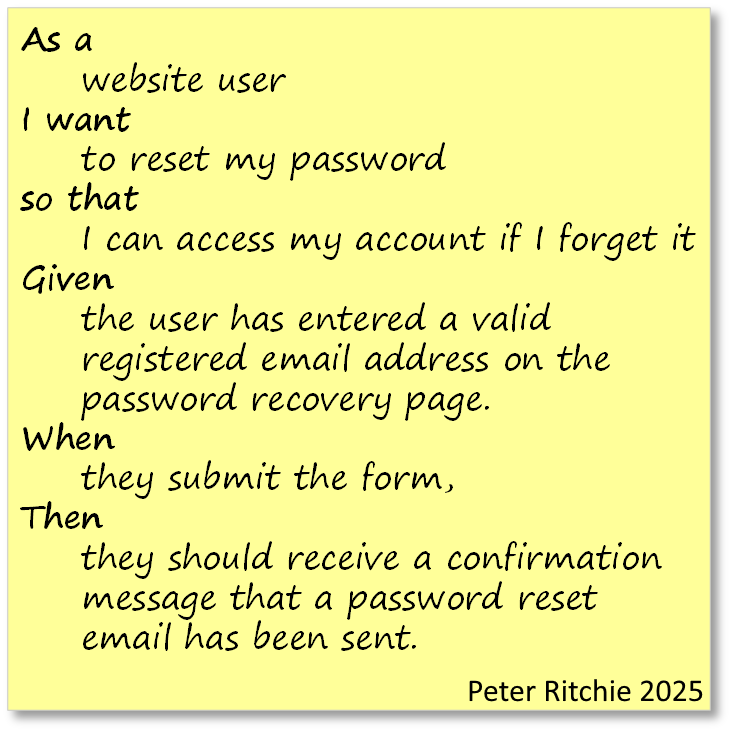

Prompt engineering is more than just asking questions; it’s also about the way you structure prompts. Some commonly used techniques include:

-

- Zero-shot prompting: Asking the model to perform a task without providing examples, relying only on clear instructions.

- Few-shot prompting: Including a handful of examples in the prompt to show the model the desired style, format, or logic.

- Role prompting: Assigning the AI a persona or perspective (e.g., “You are a CMO”) to influence tone and expertise.

- Chain-of-thought prompting: Encouraging the AI to explain reasoning step by step to improve accuracy in complex problem-solving.

- Instruction-based prompting: Using explicit, structured commands such as “List three advantages and disadvantages of…” or “Summarize the key takeaways using bullet points.”

- Context-rich prompting: Supplying additional background information, constraints, or data so the AI can tailor responses more precisely.

- Iterative refinement: Adjusting and rephrasing prompts based on initial outputs until the AI consistently produces the desired result.

Prompt engineering best practices

Getting high-quality results from AI models is dependent on the ability to craft clear, detailed prompts. Here are some best practices you should utilize to help you do that:

Start with clear objectives

Before writing, define your goal. Are you in search of ideas, facts, or solutions? A vague prompt, such as “Tell me about marketing,” produces generic results, while a more detailed prompt, like “Explain three digital marketing strategies for B2B SaaS companies with under 50 employees,” yields a more detailed output.

Be specific about format

AI performs better with clear instructions, so it’s crucial to include details like length, tone, and format. Instead of requesting “A social media post about productivity,” you should ask for “A LinkedIn post under 150 words sharing three time-management tips for remote workers in a professional but conversational tone.”

Use examples to guide

Providing examples improves results. This technique, known as “few-shot prompting,” enables the AI to produce what you need.

Example structure:

I need product descriptions like this:

[Product Name] - [One-sentence benefit] [Two key features] [Price point]

Example: "Noise-Canceling Headphones - Block out distractions and focus on what matters. Features active noise cancellation and 30-hour battery life. Starting at $199."

Now write descriptions for: [your products]

Break complex tasks into steps

Large requests can lead to scattered results. Break tasks into steps or outline a process. Instead of: “Create a complete marketing plan for my startup,” try: “Help me create a marketing plan by identifying my target audience, suggesting three marketing channels, and outlining a content calendar.”

Iterate and refine

The first response isn’t always perfect, which is why it’s helpful to request follow-ups to refine the AI model’s output. Some examples of how you might do this include:

-

- “Make this more conversational.”

- “Add examples to point #2.”

- “Shorten to 100 words but maintain the key message.”

Provide context

Provide the AI model with background information to deliver better-tailored responses. You can do this by taking the basic prompt, “Write a project update email,” and adding context like: “Write a project update email for our mobile app redesign. We’re two weeks behind schedule due to technical challenges; however, core features are 80% complete. The audience is our executive team.”

Experiment with approaches

Don’t just stop at the first working prompt. It’s important to try out different phrasings or structures for better results. You can utilize role-playing prompts like “Act as a marketing expert and analyze…” or “Explain this to a beginner vs. an expert.”

Prompt engineering examples

Here are a few more examples of well-structured prompts:

-

- Technical documentation: Instead of simply asking, “Write API documentation,” a refined prompt might specify the target audience, preferred format, necessary sections, and examples, resulting in professional-grade output.

- Code review: A basic “Review this code” prompt can be enhanced by asking the AI model to “Identify security vulnerabilities, ensure compliance with coding standards, and suggest performance improvements.”

- Data analysis: A generic “Analyze this data” prompt becomes more effective when it includes specific business goals, key metrics, and visualization preferences.

- Customer service: A strong customer service prompt includes tone guidelines, company policies, and clear escalation paths to ensure consistent, professional interactions.

Prompt engineering tools

It’s important to select tools that align with your workflow and project objectives, and scale up as your needs evolve. Here’s a quick overview of some options available to you:

Experimentation

OpenAI Playground: Provides a user-friendly interface for testing and refining prompts. You can tweak variables such as temperature, frequency penalty, and max tokens, making it ideal for both beginners and advanced users who want to see how different settings affect AI responses.

Google AI Studio: Allows you to experiment with different prompt templates and provides built-in evaluation metrics, making it easy to compare outputs and choose the most effective approach for your specific use case.

Tracking and organization

PromptLayer: Acts as a logging and analytics layer between your applications and language models. It saves all prompts and responses, allowing you to analyze which prompting strategies work best and build a searchable prompt library for your team.

Prompt Genius: This browser extension enables you to save, categorize, and access your most effective prompts instantly. It’s especially useful for users who work with prompts regularly and want an easy way to organize and retrieve them as needed.

Advanced development

Langchain: A developer framework for building applications with language models. It supports prompt templating, chaining of multiple model calls, and integrating memory, making it a powerful framework for creating complex AI workflows.

Prompt Perfect: Automatically improves and optimizes your prompts by suggesting edits based on best practices. This tool is highly beneficial when you want to enhance prompt clarity or effectiveness without spending excessive time on trial and error.

Prompt libraries

PromptHero: Hosts a curated gallery of top-performing prompts for different models and tasks. You can browse prompts by use case and model type, making it a good source of inspiration and potential starting points.

Awesome ChatGPT Prompts: A widely used, open-source collection on GitHub featuring a large variety of creative and practical prompt ideas contributed by the community. It’s updated regularly and covers everything from productivity tasks to language learning.

Automation

Zapier’s AI integrations: Connects AI-powered prompts to your everyday business tools and processes, allowing you to automate tasks like email generation, data summaries, or responding to customer queries.

Make: Formerly Integromat, this automation tool allows users to build complex workflows integrating prompt-based actions with branching logic. It’s well suited for advanced users looking to automate multi-step processes using AI.

The future of prompt engineering

More intuitive AI interactions

Prompt engineering is shifting from a niche discipline to a vital skill across many professions. As AI models become smarter, prompts will get shorter and more natural, with systems understanding intent and context more easily.

Automated tools and everyday integration

New tools will help refine and optimize prompts automatically, enabling anyone, regardless of experience, to achieve quality results. In the future, much of this optimization will be built directly into everyday software, simplifying user experience.

Growing collaboration and industry-specific tools

Teams will increasingly share prompt libraries and refine prompts together, while specialized tools for different industries will make prompt design faster and more targeted to particular needs.

Greater emphasis on ethical practices

With more powerful AI comes greater focus on responsible use, which means reducing bias, improving transparency, and following ethical standards in prompt development.

Expanding accessibility

Most importantly, prompt engineering will become more accessible to non-experts, empowering more people to use AI effectively. This ongoing shift will make working with AI easier, more responsible, and open to everyone.

Key takeaways and additional resources

Prompt engineering makes the most of AI systems by transforming them into reliable partners for creativity and problem solving. Understanding the techniques, tools, and best practices covered in this blog post will allow you to adapt and grow alongside advancing AI capabilities and effectively leverage them for personal or professional use.

You can review the key takeaways and resources listed below for a quick summary of what was discussed and to continue exploring concepts related to AI advancements.

Key takeaways

-

- Prompt engineering refines AI instructions to improve response accuracy and align outputs with specific goals.

- Methods like zero-shot, few-shot, and role-based prompting improve results by introducing structure to AI prompts.

- Prompt engineering is versatile, supporting tasks like content creation, coding, customer support, and research.

- Clear prompts save time, improve accuracy, and make AI accessible to non-experts while ensuring consistent outputs.

- Issues such as trial and error, model limitations, and ethical concerns necessitate careful experimentation and adherence to best practices.

- Platforms like OpenAI Playground and Langchain simplify prompt creation, while automation tools streamline workflows.

- The field is evolving toward intuitive AI, automated optimization, and greater accessibility for all users.

Additional resources

FAQs

What is a prompt in AI? A prompt is the text, instruction, or query provided to an AI system that guides its response. Prompts can range from a simple question to thorough instructions for generating code.

What is a prompt engineer? A prompt engineer is someone who designs effective prompts for AI models to improve output quality and usability.

Does prompt engineering require coding? Not necessarily. While coding can facilitate the development of advanced applications, many prompt engineering techniques can be applied using natural language alone.

Why is prompt engineering important? It ensures that AI outputs are accurate, reliable, and aligned with human goals, thereby reducing the time spent editing or correcting results.

What does prompt engineering entail? It involves crafting, testing, and refining instructions to optimize AI model responses across different use cases.

How does prompt engineering differ across various AI models? Because different models interpret prompts in slightly different ways, prompt structures may require adjustments depending on the system used.

How is prompt engineering different from fine-tuning? Prompt engineering works by adjusting inputs, while fine-tuning changes the model itself with additional training data.

What are some ethical concerns for prompt engineering? Concerns include reinforcing bias, generating misinformation, or misusing prompts for harmful purposes. Responsible prompt design helps mitigate these risks.

The post What is Prompt Engineering? Techniques, Examples, and Tools appeared first on The Couchbase Blog.

An Open Book: Evaluating AI Agents with ADK

An Open Book: Evaluating AI Agents with ADK