I started testing sites in late 2024 and in just a year, it’s come a long way. Here are just a few of the AI models out there.

The post Generating 3D Model Figures with AI appeared first on Make: DIY Projects and Ideas for Makers.

I started testing sites in late 2024 and in just a year, it’s come a long way. Here are just a few of the AI models out there.

The post Generating 3D Model Figures with AI appeared first on Make: DIY Projects and Ideas for Makers.

I’m off to Arizona for a couple of days to watch Spring Training baseball with my son. And to hang out with my brother and friends. Back here on Monday!

[blog] You can’t stream the energy: A developer’s guide to Google Cloud Next ’26 in Vegas. If you’re procrastinating, stop it. I saw the numbers this week and the event is close to selling out. Get yourself to the premium dev and AI event of the year.

[blog] Vibe Coding to Production. Even now, you’re probably not pushing production apps from your IDE. Ankur connects his AI-built app to GitHub, Cloud Build, and Cloud Run.

[blog] Does AI Make Us Smarter or Dumber? Yes? We’re losing some “primitive” abilities but unlocking new superpowers.

[article] OpenAI launches GPT-5.4 with Pro and Thinking versions. Plenty of new models this week, including fresh ones from OpenAI.

[blog] Look What You Made Us Patch: 2025 Zero-Days in Review. The zero-day landscape was different last year. Even more enterprise tech attacks, with browser-based exploitation dropping.

[blog] How Google Does It: Applying SRE to cybersecurity. SRE applies to security too, of course. I like these details of how we think about it.

[article] The Pulse: Cloudflare rewrites Next.js as AI rewrites commercial open source. I guess we can just rewrite stuff now? It’s not difficult to regenerate entire projects, build compatibility layers, etc.

[blog] Can coding agents relicense open source through a “clean room” implementation of code? Continuing that thought, how legal is it? That question is being tested here as debate arises over a rebuild and relicense.

[blog] GitOps architecture, patterns and anti-patterns. Are you following good practices, or anti-patterns? A lot of specifics here.

[article] Cursor is rolling out a new kind of agentic coding tool. Looks cool. Always-on fleets of agents will be a commonplace thing in twelve months. Maybe six.

[blog] Practical Guide to Evaluating and Testing Agent Skills. You know how to build the skill, but can you test the skill? It’s really the most important part, the testing. Anybody can just build them. Yes, I’m ripping off a Seinfeld bit.

Want to get this update sent to you every day? Subscribe to my RSS feed or subscribe via email below:

Jenkins has always been defined by its extensibility. With more than 1,800 available plugins, there’s rarely a CI/CD problem without a plugin that addresses it. That same extensibility, however, is also the most common source of instability, security exposure, and operational overhead in Jenkins environments.

This guide explains how Jenkins plugins work under the hood, what tends to go wrong, and how to build a governance process that keeps things manageable, whether you’re running Jenkins at small scale or across a large enterprise.

Each Jenkins plugin runs in its own classloader, which theoretically isolates it from other plugins. In practice, this isolation is incomplete. Plugins interact through shared APIs, and when those APIs drift between versions, conflicts emerge that can cause runtime errors, mysterious crashes, or subtle breakage that’s difficult to trace.

Plugins are also tied to a minimum Jenkins core version. A plugin requiring Jenkins 2.3 or later will refuse to install on older LTS releases, which means core upgrades often drive plugin upgrade timing, not the other way around. This creates a cascading dependency problem that grows harder to manage as your plugin count increases.

Most plugin installs and upgrades also require a Jenkins restart. At small scale this is manageable. At enterprise scale, with dozens of plugins and continuous delivery requirements, it becomes a significant uptime planning concern.

The most frequent failure mode: upgrading Plugin A causes it to require a new version of Plugin B, which breaks Plugin C that depended on the older version of Plugin B. This is not an edge case: it’s a predictable consequence of how plugin dependencies are resolved in Jenkins.

A well-known example is the Git plugin upgrade path. Upgrading the Git plugin sometimes forces a new SCM API version, which breaks older branch source plugin versions. The Kubernetes plugin is another common offender, occasionally requiring a newer Jenkins core version than your current LTS supports.

When two plugins try to load different versions of the same underlying library, Jenkins’s classloader isolation breaks down. The resulting errors (NoSuchMethodError, ClassNotFoundException, and similar exceptions) often appear as mysterious runtime crashes with no obvious connection to a recent plugin change.

Diagnosing them requires understanding which plugins share which transitive dependencies.

Plugin maintainers sometimes abandon their projects. When that happens, known CVEs can remain unpatched indefinitely, while the plugin continues to be installed, trusted, and automatically updated by pipelines.

By the time a CVE appears in Jenkins’s security advisory feed, affected environments have typically already been exposed for some time.

We covered the broader security implications of this pattern in detail in our article “What Are The Security Risks of CI/CD Plugin Architectures?”

Jenkins records that a plugin was installed, but not who installed it, why, or who approved it. Without external logging pipelines or custom auditing plugins, meeting compliance requirements for audit trails around CI/CD configuration becomes difficult. This is increasingly relevant as regulatory frameworks pay more attention to build and delivery infrastructure.

This audit gap is closely related to a broader problem: configuration drift. When plugin changes and other CI/CD configuration changes aren’t traceable, environments gradually diverge from their documented state.

If you’re dealing with this specifically, our guide on how to manage configuration drift in your Jenkins environment covers how to baseline, codify, and monitor your configuration to maintain auditability.

Understanding the license obligations of a plugin requires reviewing not just the plugin itself but all of its dependencies. For organizations with strict compliance policies – particularly around copyleft licenses – this can be time-consuming and easy to get wrong.

The most frequent failure mode: upgrading Plugin A causes it to require a new version of Plugin B, which breaks Plugin C that depended on the older version of Plugin B. This is not an edge case: it’s a predictable consequence of how plugin dependencies are resolved in Jenkins.

A well-known example is the Git plugin upgrade path. Upgrading the Git plugin sometimes forces a new SCM API version, which breaks older branch source plugin versions. The Kubernetes plugin is another common offender, occasionally requiring a newer Jenkins core version than your current LTS supports.

When two plugins try to load different versions of the same underlying library, Jenkins’s classloader isolation breaks down. The resulting errors (NoSuchMethodError, ClassNotFoundException, and similar exceptions) often appear as mysterious runtime crashes with no obvious connection to a recent plugin change.

Diagnosing them requires understanding which plugins share which transitive dependencies.

Plugin maintainers sometimes abandon their projects. When that happens, known CVEs can remain unpatched indefinitely, while the plugin continues to be installed, trusted, and automatically updated by pipelines.

By the time a CVE appears in Jenkins’s security advisory feed, affected environments have typically already been exposed for some time.

We covered the broader security implications of this pattern in detail in our article “What Are The Security Risks of CI/CD Plugin Architectures?”

Jenkins records that a plugin was installed, but not who installed it, why, or who approved it. Without external logging pipelines or custom auditing plugins, meeting compliance requirements for audit trails around CI/CD configuration becomes difficult. This is increasingly relevant as regulatory frameworks pay more attention to build and delivery infrastructure.

This audit gap is closely related to a broader problem: configuration drift. When plugin changes and other CI/CD configuration changes aren’t traceable, environments gradually diverge from their documented state.

If you’re dealing with this specifically, our guide on how to manage configuration drift in your Jenkins environment covers how to baseline, codify, and monitor your configuration to maintain auditability.

Understanding the license obligations of a plugin requires reviewing not just the plugin itself but all of its dependencies. For organizations with strict compliance policies – particularly around copyleft licenses – this can be time-consuming and easy to get wrong.

This is one of the more honest challenges in Jenkins operations: not really, at least not reliably.

The standard approach is a sandbox Jenkins instance – typically running in Docker or a lightweight Kubernetes distribution – that mirrors the production environment.

The problem is that maintaining a sandbox that truly mirrors production is itself a significant operational burden. Most organizations that attempt it find the sandbox gradually drifting from production, which means a plugin that works in the sandbox can still break in production.

This isn’t a criticism specific to Jenkins; it’s a genuine constraint of complex, stateful CI/CD environments. But it does mean that plugin changes carry more inherent risk than most other configuration changes in your infrastructure.

The goal of plugin governance is to make plugin decisions deliberately rather than reactively. Here’s a practical framework.

Before evaluating any plugin, ask whether the functionality can be achieved without one. Built-in pipeline steps, shared libraries, or external services often cover the same ground. Every plugin you don’t install is one fewer dependency to manage, one fewer attack surface to monitor, and one fewer restart to plan.

Consider automatically disqualifying plugins that meet any of these conditions:

These criteria won’t catch everything, but they eliminate the highest-risk candidates before anyone spends time on deeper evaluation.

A plugin is only as secure as its weakest dependency. When you evaluate a plugin, map its full dependency tree, including transitive dependencies, before making a decision. Note the minimum Jenkins core version required by each node in the graph. This gives you an “upgrade blast radius”: how many components would need to change if this plugin requires a future core update.

Drawing this graph manually is tedious but valuable. It makes the true cost of plugin adoption visible before you commit.

Decide who has authority to approve plugin installations and who is responsible for their ongoing maintenance. In practice this usually means senior developers, DevOps engineers, or designated Jenkins administrators.

Plugin requesters should be required to document: why the plugin is needed, what alternatives were considered, what its dependencies are, and how to roll back if something goes wrong.

This process sounds heavy, but it prevents the accumulation of orphaned plugins (installed for a one-off experiment and never removed), which is how most Jenkins installations develop their worst technical debt.

Once a plugin is installed, pin its version. Automatic updates might seem convenient, but in a complex dependency graph, an unreviewed update to one plugin can trigger a cascade of compatibility issues. Version pinning gives you control over when and how updates are applied, and makes rollback straightforward.

Jenkins installations accumulate plugins over time. Periodically audit your installed plugins and remove any that are no longer in active use, along with their dependencies (if not shared by other plugins). A smaller plugin footprint means fewer security exposures, fewer required restarts, and less maintenance overhead.

Before installing any plugin, work through these checks:

None of these checks guarantees safety, but skipping them significantly increases your exposure.

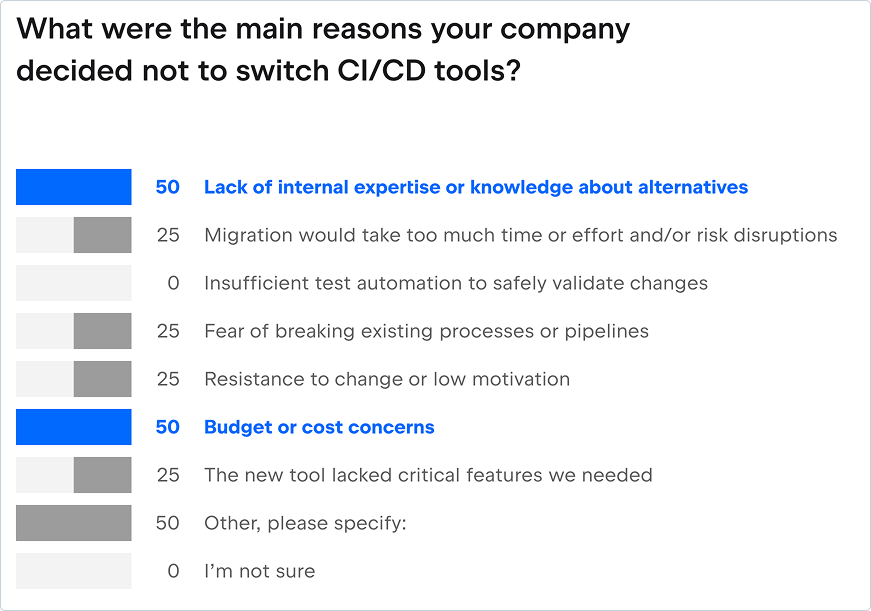

There’s no universal threshold, but organizations typically hit a wall when they’re spending more time managing plugins than using them. Specific signals include:

At this point the question isn’t how to manage plugins better. It’s whether the plugin model itself is the right fit for your environment.

For many teams, yes. Jenkins is mature, highly capable, and has a large community of practitioners who know how to operate it well.

Organizations that run Jenkins successfully at scale tend to treat plugin governance as a first-class operational discipline from the start, rather than retrofitting it after problems emerge.

The teams that struggle most with Jenkins plugins are typically those that installed plugins freely in early stages and are now managing the accumulated technical debt of a large, undocumented dependency graph.

If you’re starting fresh, a disciplined default-deny approach, only installing plugins when there’s no viable alternative, dramatically reduces the long-term management burden.

If you’re inheriting a complex existing installation, the priority is a full plugin audit: what’s installed, what’s actually used, what’s maintained, and what can be removed.

Integrated CI/CD platforms bundle core functionality natively rather than relying on community plugins for essential features. This changes the maintenance model: instead of tracking dozens of independent plugin release cycles, you have a single vendor responsible for updates, compatibility, and security patches.

The trade-off is flexibility. Jenkins’s plugin ecosystem covers an enormous range of integrations and use cases. Integrated platforms may not support every integration you need, and migration from a complex Jenkins installation is a significant undertaking that shouldn’t be underestimated.

The right time to evaluate alternatives is when Jenkins’s plugin overhead is measurably affecting delivery velocity or security posture, not because a vendor comparison suggests you should.

Further reading on the TeamCity blog:

Recently our sister company Marra built an agentic “teammate” for user onboarding using Microsoft Power Platform exploring new AI features available. They asked Scott Logic to do the same using Microsoft Agent Framework. Luckily I was on the small team who got involved.

The project was to ultimately compare the two teams’ experiences, find the advantages and challenges of each and to explore agentic technology. This post focuses on our work with Microsoft Agent Framework rather than comparison.

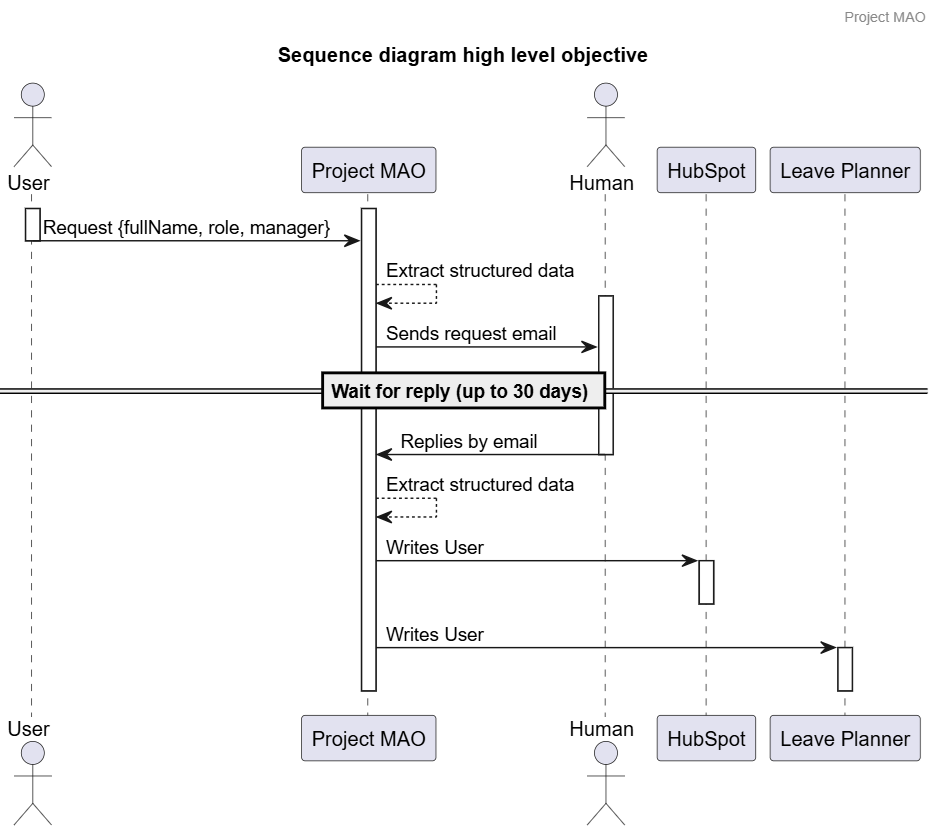

We were tasked to build an agentic system for user onboarding with human-in-the-loop. Onboarded users should be saved on two downstream systems: HubSpot and LeavePlanner (SharePoint hosted Excel Workbook).

The objective was to communicate as you would with a “teammate”. This was a time-boxed exercise (three weeks to investigate, build, and present).

Microsoft Agent Framework was up to the challenge. We built a single entry point minimal multi-agent solution. We hosted our agentic workflow in Azure as a container, not quite on Microsoft Foundry as we would have liked, but next time we might get there.

Our solution interaction was by email. We used an Azure Function to poll the “agent” mailbox, pick up relevant emails and extract the content to send to our agent. Our agent could also send email for communication, and perform the user onboarding tasks using custom tools.

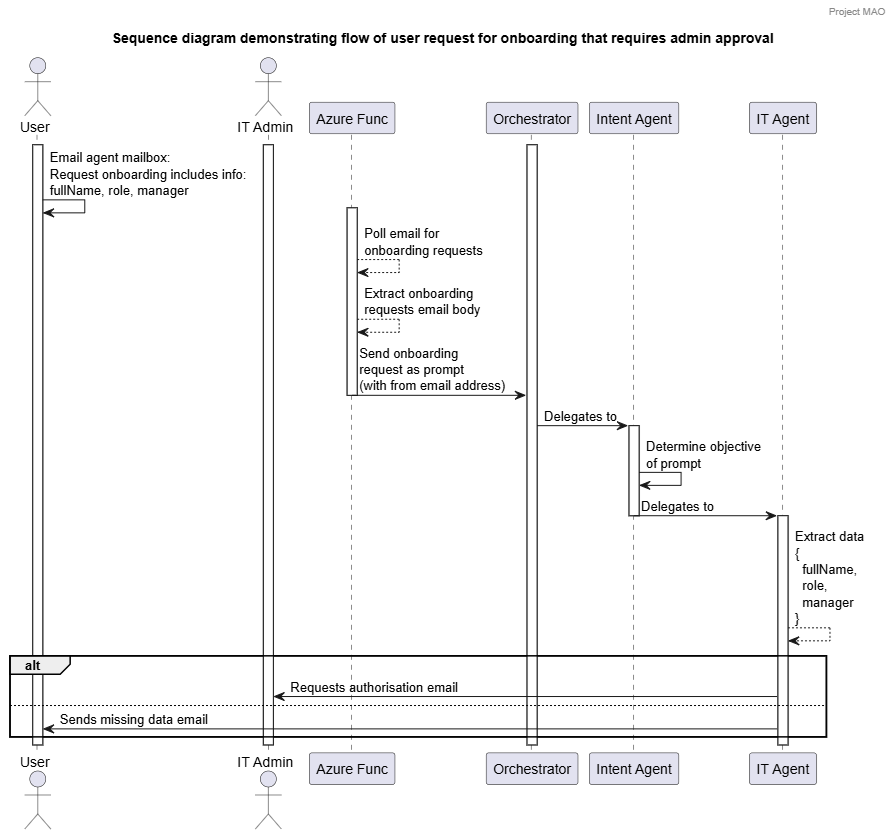

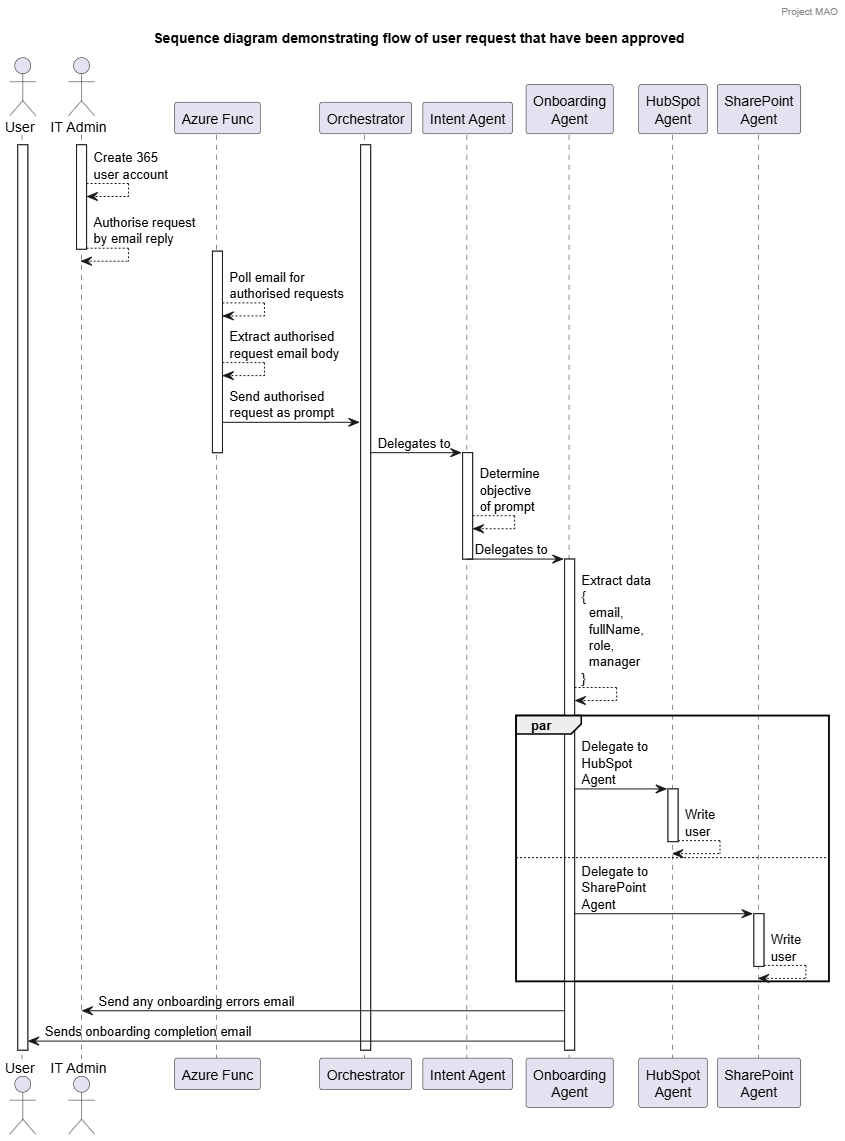

To understand what we’ve built I have a couple more sequence diagrams:

A traditional system could have been written to do this without the use of an LLM. The exercise was to use an existing Microsoft based communication system as a teammate. The LLM gave us easy data extraction from free text in an email string, then tools enabled us to write custom actions we could expect the LLM to execute.

The solution discussed here was built entirely as an exploratory exercise proof of concept, we would have needed to do a fair amount of work for a production hardened system.

Our team brought relevant experience from previous LLM-powered projects and other agentic solutions.

On initial investigation, Microsoft Agent Framework was very interesting. We could quickly define a deterministic workflow and agents handing off to each other in a pretty modular way.

This gave us a quick start to get moving with code samples from the github repo and some documentation.

The Microsoft Agent Framework and Microsoft Foundry was our primary focus, this was relatively new and brought together previous frameworks Semantic Kernel and AutoGen.

We could have many small agents working together to perform a bigger task by handing off to each other. The advantage here with the small dedicated tasks meant we could keep the system and user prompts super short and to the point. The smaller context per agent gave us a much higher chance of getting exactly what we wanted from our LLM.

We quickly configured the Microsoft Foundry GPT-5 nano model and started planning our onboarding agent.

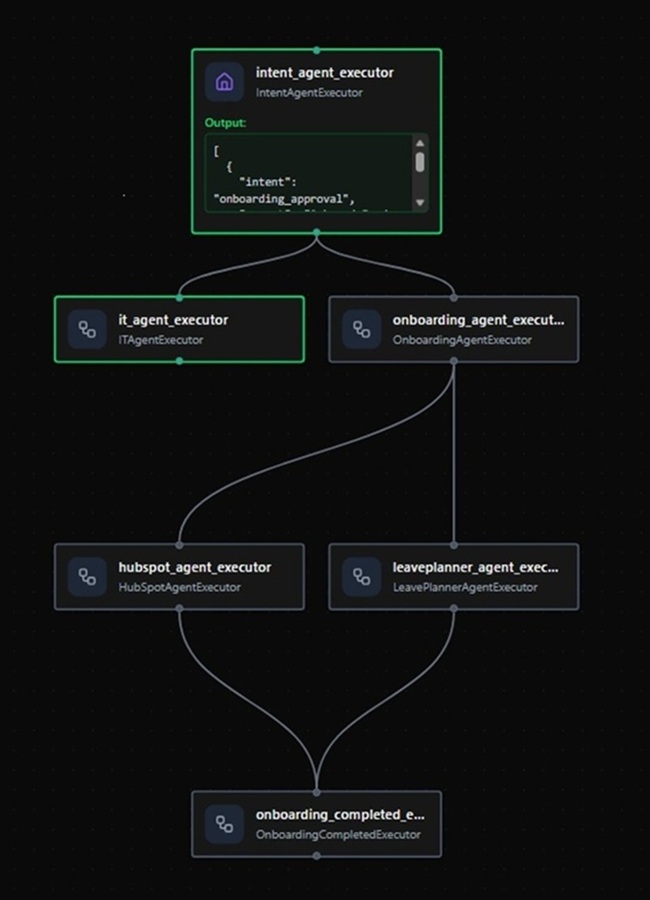

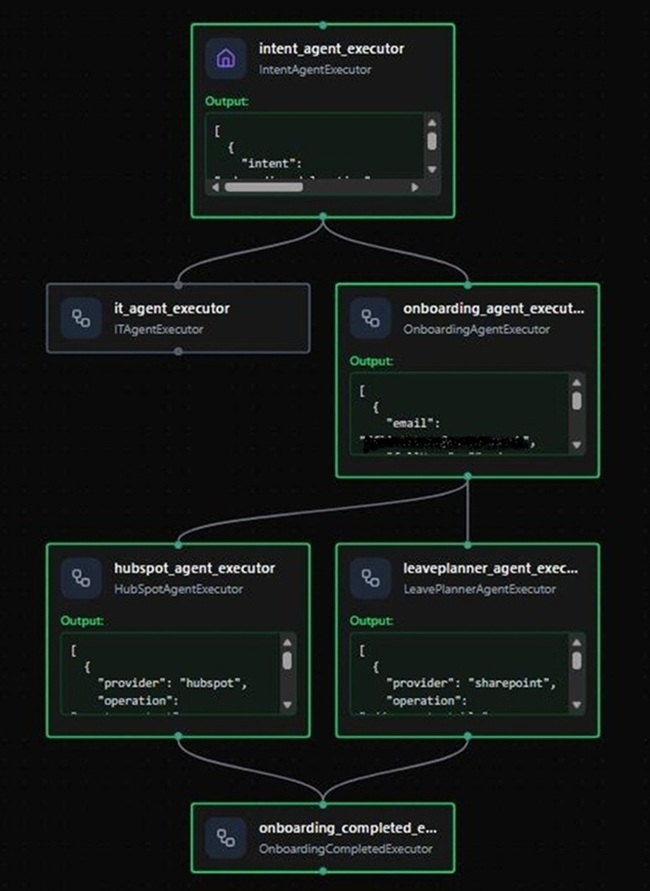

We implemented DevUI (included with Microsoft Agent Framework), it was a nice addition for quickly visualising our agentic workflow and testing as we built. Also very useful when demonstrating functionality to stakeholders, see screen grabs of workflows below.

Microsoft Agent Framework DevUI - Example of the approval steps:

Microsoft Agent Framework DevUI - Example of the onboard steps:

We needed to include our agent as a “teammate”, initial thoughts gave us email with GraphAPI (polling or subscription), Microsoft Teams, or even email Adaptive Cards for Outlook Actionable Message. After considerable team debate and research, we opted for Graph API with email polling due to its setup simplicity and faster configuration.

We opted to have the email polling done using an Azure Function and submit the body as prompts to the agent rather than have the agent read the mailbox directly. So the flow was that the user sent an email to their “teammate” and this was forwarded to the agent which could reply to email as necessary.

Then the human-in-the-loop point where the agent requested the 365 account creation before proceeding to onboard the user, gave us some security before accounts were simply created.

Perhaps the most interesting part was what our agents actually did. We had various agents implementing different techniques and tools which lent themselves well to our scenario.

Simply determine the intent of the request. Our scenario had two paths: a request from a user sent by email to go to IT for approval, or a request to go to the onboarding agent.

The agent figured this out based on its system prompt (a short instruction and a few brief examples) and the email request body, the email body was a simple sentence, not CSV content or any specific format we wanted the user to remember. Next step was then delegate to either the IT Agent or the Onboarding Agent.

We sent the actual request to the agent using an Azure Function that extracted the email body text. This could have been done by the agent and was likely something we would have investigated given extra time.

Future growth here is pretty big as we could add many more scenarios that the agent handles, change job role, remove user etc - if it gets too much then simply plug in a couple of other agents as necessary.

This agent handed the request off to a human. The onboarding flow required that the 365 user account was created by a human, so a request for IT to do this would be sent by email. This enabled the human operator to interact with the agent by replying to the email with the new users 365 email address.

The IT Agent performed entity extraction to get the user’s name, job role and line manager.

The IT Agent had a custom send email tool (using Microsoft Graph API - authenticated with Entra ID). The tool sent an email request for onboarding to the human administrator to complete the request. Alternatively, if the entity extraction determined data was missing, an explanation was sent to the user.

This was the second time around. The administrator had now created a new business user account and provided the 365 email/username to our Intent Agent. The admin had replied to the email (which the Azure Function was sending back to the agent). This time the Intent Agent had determined this and handed over to the Onboarding Agent.

The Onboarding Agent ran entity extraction. Then given success on email, the user’s name, job role and line manager, started onboarding.

Here we handed off to multiple agents at once: HubSpot agent and SharePoint (Leave Planner shared Excel workbook).

Future growth here might be that the user administrator could decide to exclude some systems to onboard to or have different systems based on user role.

Our first external system to onboard the user, simply call the API to create the user. To do this we built a custom tool for the operation that we provided to the agent.

Our tool had some logic for error handling but we’d see future growth where the agent could have more functionality. Search, update and delete (securely, of course) tools could be available in a more production hardened solution.

The end result then continued to the Completion Agent.

The task here was simple, insert a record for the new user in the fictional LeavePlanner holiday system, which was represented by an Excel workbook on SharePoint. The agent had a single tool to insert the row in the workbook.

Of course, this could get considerably more complicated and could offer more tools such as update, delete and select.

The end result then continued to the Completion Agent.

Here we wanted to see green on DevUI when using example prompts. This was less of an agent and more a point to connect the workflow back to a resolution. Check the results from inbound agents, send success emails to systems the user had been onboarded to, and report any issues encountered to the IT department.

Overall this was a fast-moving, interesting project, providing a better understanding of where agentic systems add value and the parts of an agentic approach. I’ve reflected on a previous LLM powered systems I worked on, and how dedicated agents could give a more robust solution than a large single prompt.

Microsoft Agent Framework is definitely going to be a point of interest in any upcoming LLM-powered solutions our team build.

As for the email a “teammate” interaction we investigated. Do people want to communicate with an AI-powered “teammate” rather than having another system to use that submits requests through a traditional form. I can definitely see this being preferable is various situations.

Then do I want an agentic “teammate” to do things I have to do as part of life that I consider boring - yes. I already do, daily I’m using copilot to make work quicker and easier.