Docker Desktop 4.43 just rolled out a set of powerful updates that simplify how developers run, manage, and secure AI models and MCP tools.

Model Runner now includes better model management, expanded OpenAI API compatibility, and fine-grained controls over runtime behavior. The improved MCP Catalog makes it easier to discover and use MCP servers, and now supports submitting your own MCP servers! Meanwhile, the MCP Toolkit streamlines integration with VS Code and GitHub, including built-in OAuth support for secure authentication. Gordon, Docker’s AI agent, now supports multi-threaded conversations with faster, more accurate responses. And with the new Compose Bridge, you can convert local compose.yaml files into Kubernetes configuration in a single command.

Together, these updates streamline the process of building agentic AI apps and offer a preview of Docker’s ongoing efforts to make it easier to move from local development to production.

New model management commands and expanded OpenAI API support in Model Runner

This release includes improvements to the user interface of the Docker Model Runner, the inference APIs, and the inference engine under the hood.

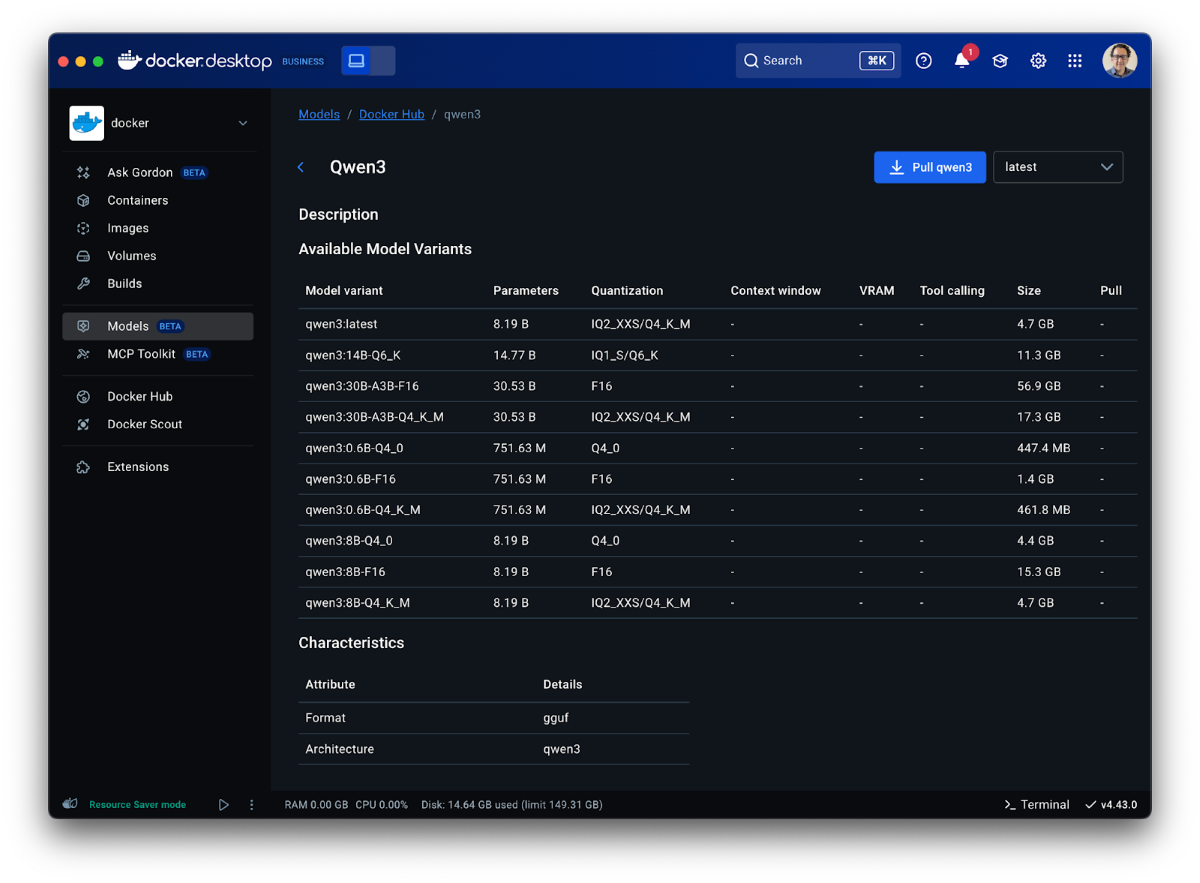

Starting with the user interface, developers can now inspect models (including those already pulled from Docker Hub and those available remotely in the AI catalog) via model cards available directly in Docker Desktop. Below is a screenshot of what the model cards look like:

Figure 1: View model cards directly in Docker Desktop to get an instant overview of all variants in the model family and their key features.

In addition to the GUI changes, the docker model command adds three new subcommands to help developers inspect, monitor, and manage models more effectively:

- docker model ps: Show which models are currently loaded into memory

- docker model df: Check disk usage for models and inference engines

- docker model unload: Manually unload a model from memory (before its idle timeout)

For WSL2 users who enable Docker Desktop integration, all of the docker model commands are also now available from their WSL2 distros, making it easier to work with models without changing your Linux-based workflow.

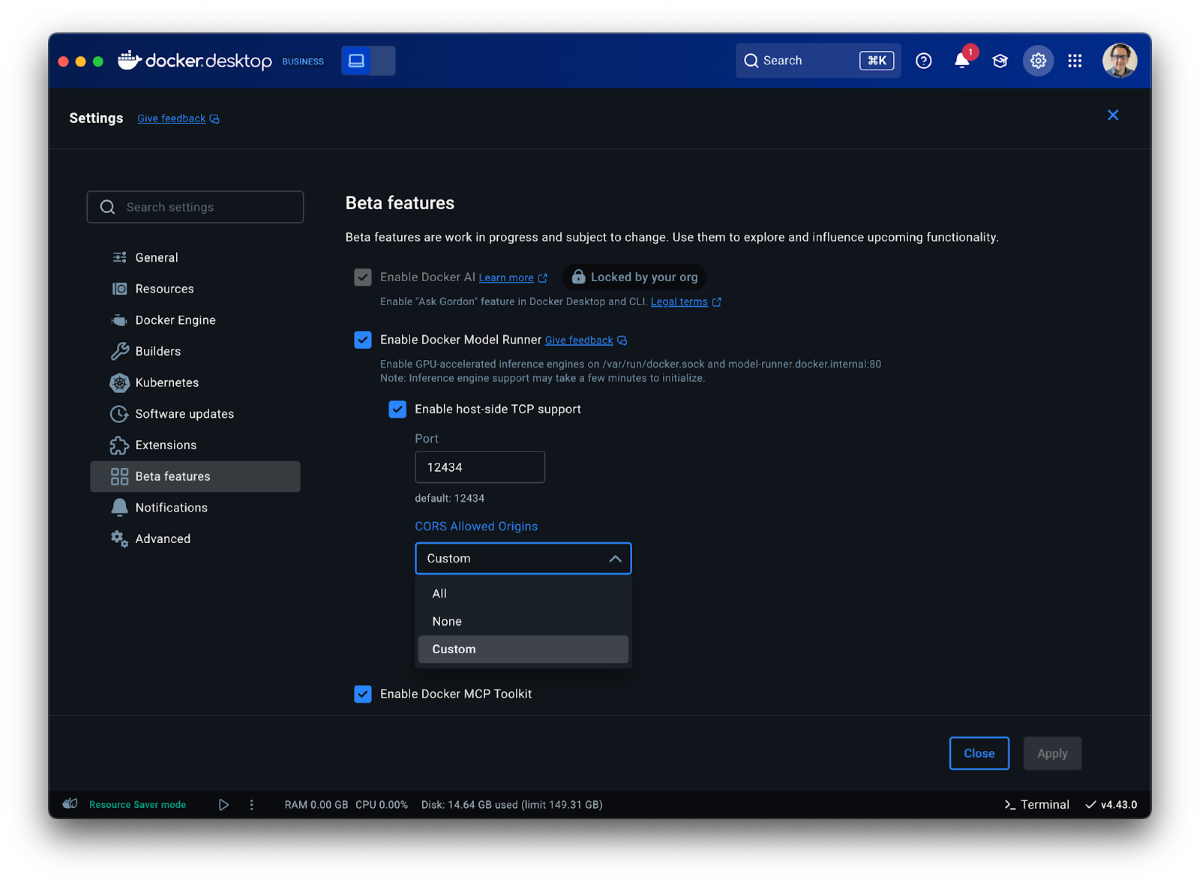

On the API side, Model Runner now offers additional OpenAI API compatibility and configurability. Specifically, tools are now supported with {“stream”: “true”}, making agents built on Docker Model Runner more dynamic and responsive. Model Runner’s API endpoints now support OPTIONS calls for better compatibility with existing tooling. Finally, developers can now configure CORS origins in the Model Runner settings pane, offering better compatibility and control over security.

Figure 2: CORS Allowed Origins are now configurable in Docker Model Runner settings, giving developers greater flexibility and control.

For developers who need fine-grained control over model behavior, we’re also introducing the ability to set a model’s context size and even the runtime flags for the inference engine via Docker Compose, for example:

services:

mymodel:

provider:

type: model

options:

model: ai/gemma3

context-size: 8192

runtime-flags: "--no-prefill-assistant"

In this example, we’re using the (optional) context-size and runtime-flags parameters to control the behavior of the inference engine underneath. In this case, the associated runtime is the default (llama.cpp), and you can find a list of flags here. Certain flags may override the stable default configuration that we ship with Docker Desktop, but we want users to have full control over the inference backend. It’s also worth noting that a particular model architecture may limit the maximum context size. You can find information about maximum context lengths on the associated model cards on Docker Hub.

Under the hood, we’ve focused on improving stability and usability. We now have better error reporting in the event that an inference process crashes, along with more aggressive eviction of crashed engine processes. We’ve also enhanced the Docker CE Model Runner experience with better handling of concurrent usage and more robust support for model providers in Compose on Docker CE.

MCP Catalog & Toolkit: Secure, containerized AI tools at scale

New and redesigned MCP Catalog

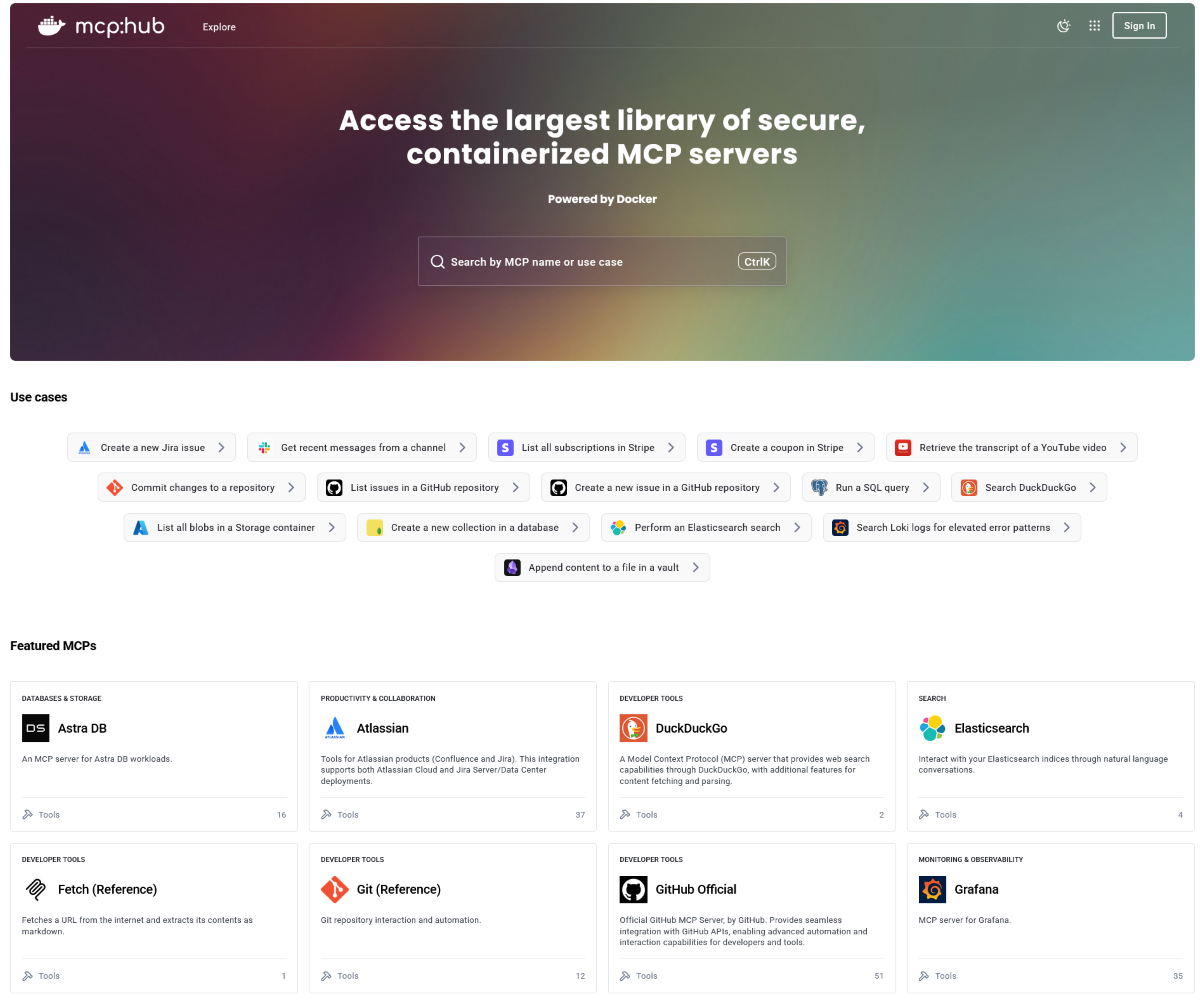

Docker’s MCP Catalog now features an improved experience, making it easier to search, discover, and identify the right MCP servers for your workflows. You can still access the catalog through Docker Hub or directly from the MCP Toolkit in Docker Desktop, and now, it’s also available via a dedicated web link for even faster access.

Figure 3: Quickly find the right MCP server for your agentic app and use the new Catalog to browse by specific use cases.

The MCP Catalog currently includes over 100 verified, containerized tools, with hundreds more on the way. Unlike traditional npx or uvx workflows that execute code directly on your host, every MCP server in the catalog runs inside an isolated Docker container. Each one includes cryptographic signatures, a Software Bill of Materials (SBOM), and provenance attestations.

This approach eliminates the risks of running unverified code and ensures consistent, reproducible environments across platforms. Whether you need database connectors, API integrations, or development tools, the MCP Catalog provides a trusted, scalable foundation for AI-powered development workflows that move the entire ecosystem away from risky execution patterns toward production-ready, containerized solutions.

Submit your MCP Server to the Docker MCP Catalog

We’re launching a new submission process, giving developers flexible options to contribute by following the process here. Developers can choose between two options: Docker-Built and Community-Built servers.

Docker-Built Servers

When you see “Built by Docker,” you’re getting our complete security treatment. We control the entire build pipeline, providing cryptographic signatures, SBOMs, provenance attestations, and continuous vulnerability scanning.

Community-Built Servers

These servers are packaged as Docker images by their developers. While we don’t control their build process, they still benefit from container isolation, which is a massive security improvement over direct execution.

Docker-built servers demonstrate the gold standard for security, while community-built servers ensure we can scale rapidly to meet developer demand. Developers can change their mind after submitting a community-built server and opt to resubmit it as a Docker-built server.

Get your MCP server featured in the Docker MCP Catalog today and reach over 20 million developers. Learn more about our new MCP Catalog in our announcement blog and get insights on best practices on building, running, and testing MCP servers. Join us in building the largest library of secure, containerized MCP servers! .

MCP Toolkit adds OAuth support and streamlined Integration with GitHub and VS Code

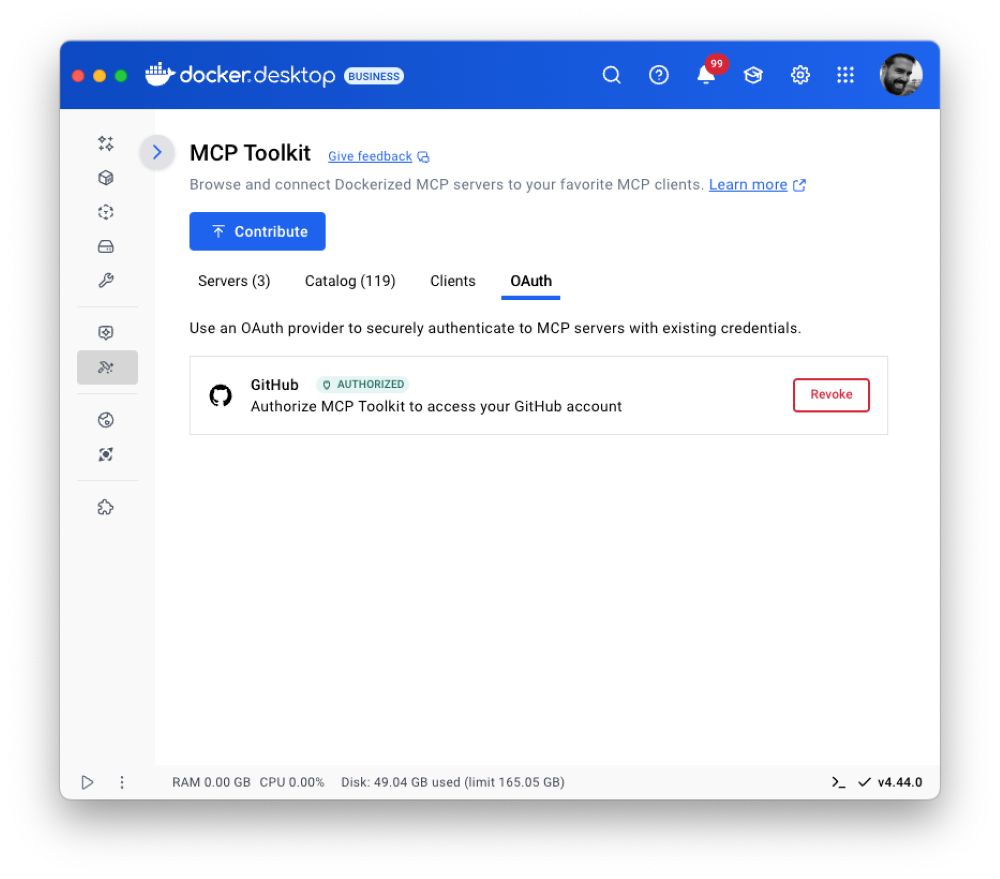

Many MCP servers’ credentials are passed as plaintext environment variables, exposing sensitive data and increasing the risk of leaks. The MCP Toolkit eliminates that risk with secure credential storage, allowing clients to authenticate with MCP servers and third-party services without hardcoding secrets. We’re taking it a step further with OAuth support, starting with the most widely used developer tool, GitHub. This will make it even easier to integrate secure authentication into your development workflow.

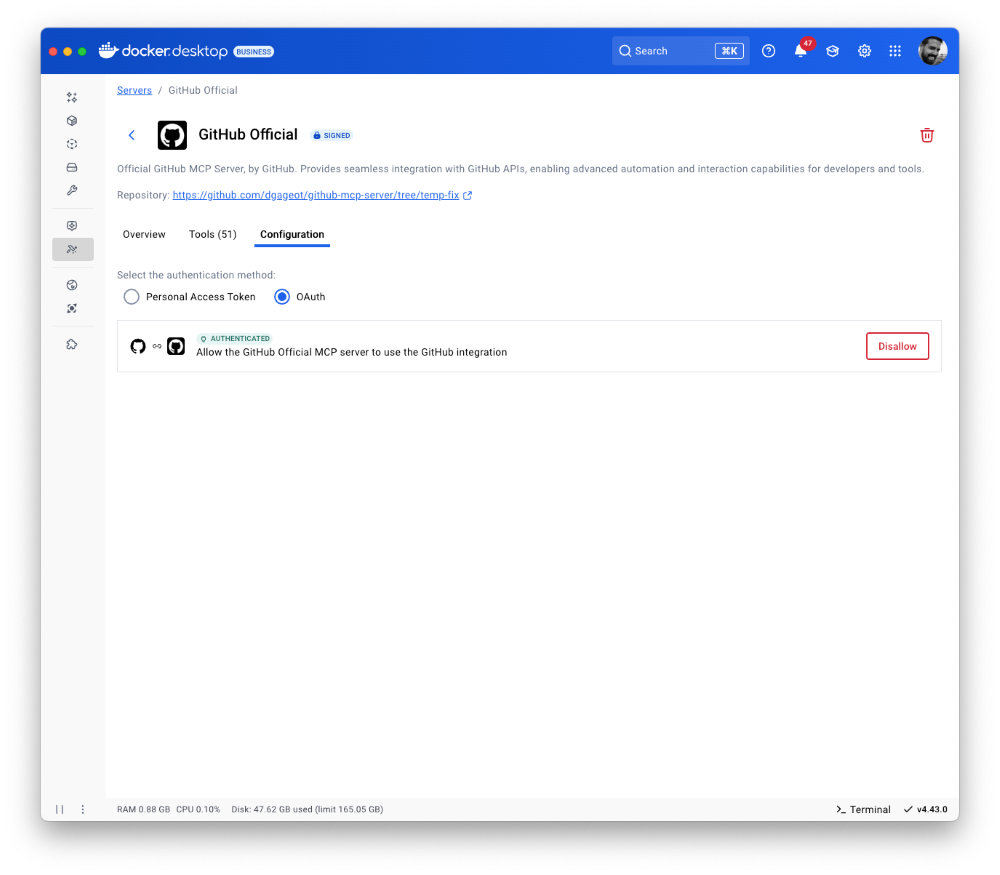

Figure 4: OAuth is now supported for the GitHub MCP server.

To set up your GitHub MCP server, go to the OAuth tab, connect your GitHub account, enable the server, and authorize OAuth for secure authentication.

Figure 5: Go to the configurations tab of the GitHub MCP servers to enable OAuth for secure authentication

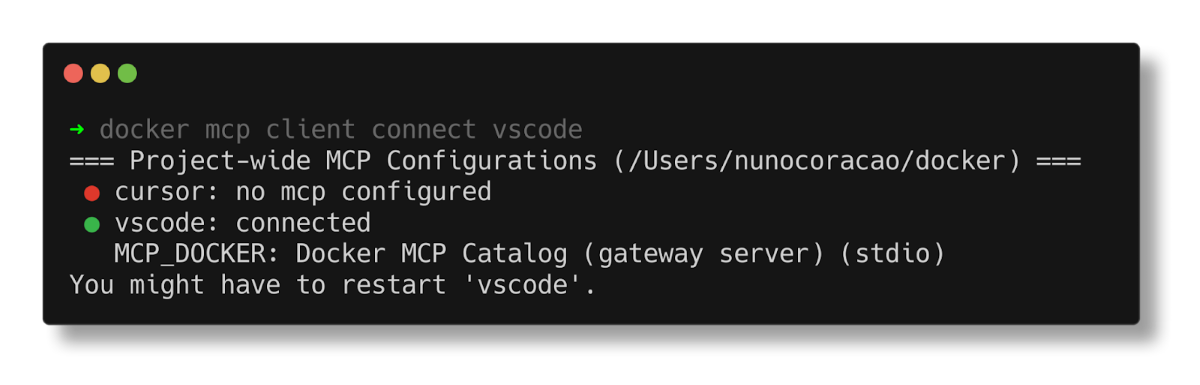

The MCP Toolkit allows you to connect MCP servers to any MCP client, with one-click connection to popular ones such as Claude and Cursor. We are also making it easier for developers to connect to VSCode with the docker mcp client connect vscode command. When run in your project’s root folder, it creates an mcp.json configuration file in your .vscode folder.

Figure 6: Connect to VS Code via MCP commands in the CLI.

Additionally, you can also configure the MCP Toolkit as a global MCP server available to VSCode by adding the following config to your user settings. Check out this doc for more details. Once connected, you can leverage GitHub Copilot in agent mode with full access to your repositories, issues, and pull requests.

"mcp": {

"servers": {

"MCP_DOCKER": {

"command": "docker",

"args": [

"mcp",

"gateway",

"run"

],

"type": "stdio"

}

}

}

Gordon gets smarter: Multi-threaded conversations and 5x faster performance

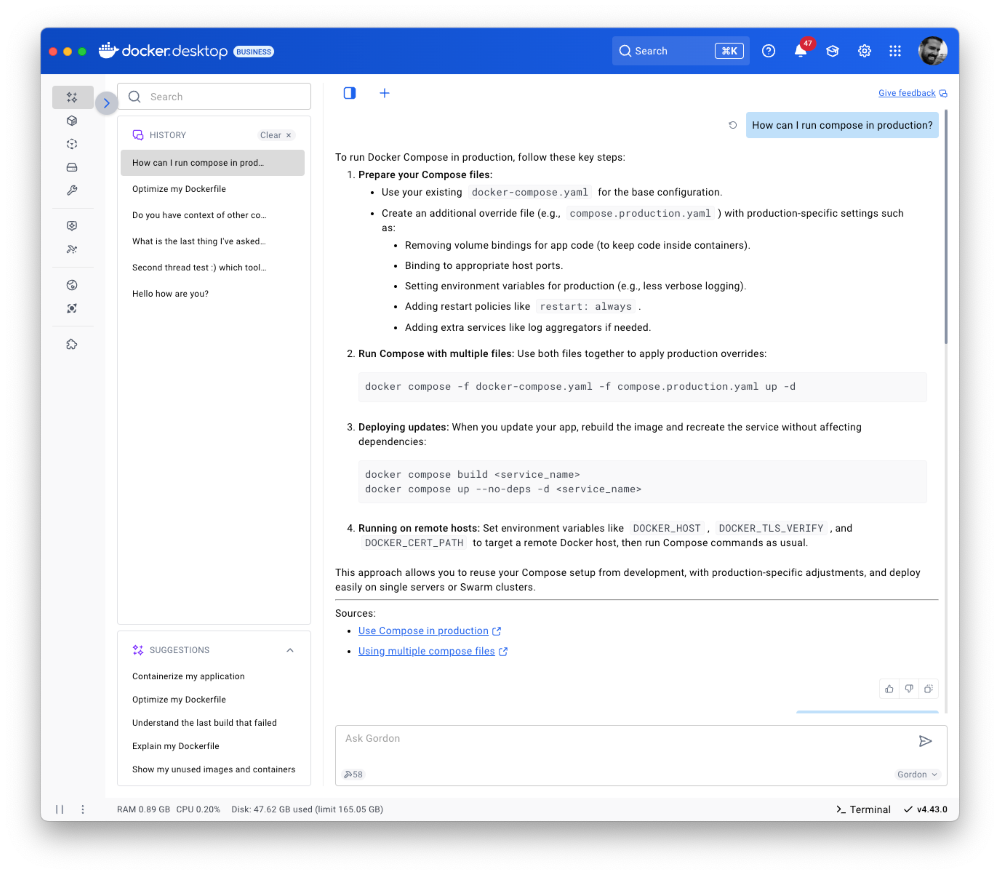

Docker’s AI Agent Gordon just got a major upgrade: multi-threaded conversation support. You can now run multiple distinct conversations in parallel and switch between topics like debugging a container issue in one thread and refining a Docker Compose setup in another, without losing context. Gordon keeps each thread organized, so you can pick up any conversation exactly where you left off.

Gordon’s new multi-threaded capabilities work hand-in-hand with MCP tools, creating a powerful boost for your development workflow. Use Gordon alongside your favorite MCP tools to get contextual help while keeping conversations organized by task. No more losing focus to context switching!

Figure 7: Gordon’s new multi-threaded support cuts down on context switching and boosts productivity.

We’ve also rolled out major performance upgrades, Gordon now responds 5x faster and delivers more accurate, context-aware answers. With improved understanding of Docker-specific commands, configurations, and troubleshooting scenarios, Gordon is smarter and more helpful than ever!

Compose Bridge: Seamlessly go from local Compose to Kubernetes

We know that developers love Docker Compose for managing local environments—it’s simple and easy to understand. We’re excited to introduce Compose Bridge to Docker Desktop. This new powerful feature helps you transform your local compose.yaml into Kubernetes configuration with a single command.

Translate Compose to Kubernetes in seconds

Compose Bridge gives you a streamlined, flexible way to bring your Compose application to Kubernetes. With smart defaults and options for customization, it’s designed to support both simple setups and complex microservice architectures.

All it takes is:

docker compose bridge convert

And just like that, Compose Bridge generates the following Kubernetes resources from your Compose file:

- A Namespace to isolate your deployment

- A ConfigMap for every Compose config entry

- Deployments for running and scaling your services

- Services for exposed and published ports—including LoadBalancer services for host access

- Secrets for any secrets in your Compose file (encoded for local use)

- NetworkPolicies that reflect your Compose network topology

- PersistentVolumeClaims using Docker Desktop’s hostpath storage

This approach replicates your local dev environment in Kubernetes quickly and accurately, so you can test in production-like conditions, faster.

Built-in flexibility and upcoming enhancements

Need something more customized? Compose Bridge supports advanced transformation options so you can tweak how services are mapped or tailor the resulting configuration to your infrastructure.

And we’re not stopping here—upcoming releases will allow Compose Bridge to generate Kubernetes config based on your existing cluster setup, helping teams align development with production without rewriting manifests from scratch.

Get started

You can start using Compose Bridge today:

- Download or update Docker Desktop

- Open your terminal and run:

- Review the documentation to explore customization options

docker compose bridge convert

Conclusion

Docker Desktop 4.43 introduces practical updates for developers building at the intersection of AI and cloud-native apps. Whether you’re running local models, finding and running secure MCP servers, using Gordon for multi-threaded AI assistance, or converting Compose files to Kubernetes, this release cuts down on complexity so you can focus on shipping. From agentic AI projects to scaling workflows from local to production, you’ll get more control, smoother integration, and fewer manual steps throughout.

Learn more