There are at least three reasons why the open source community is paying attention to DocumentDB. The first is that it combines the might of two popular databases: MongoDB (DocumentDB is essentially an open source version of MongoDB) and PostgreSQL. A PostgreSQL extension makes MongoDB’s document functionality available to Postgres; a gateway translates MongoDB’s API to PostgreSQL’s API.

Secondly, the schemaless document store is completely free and accessible through the MIT license. The database utilizes the core of Microsoft Azure Cosmos DB for MongoDB, which has been deployed in numerous production settings over the years. Microsoft donated DocumentDB to the Linux Foundation in August. A DocumentDB Kubernetes Operator, enabling the solution to run in the cloud, at the edge, or on premises, was announced at KubeCon + CloudNativeCon NA in November.

Thirdly, DocumentDB reinforces a number of vital use cases for generative models, intelligent agents and multiagent instances. These applications entail using the database for session history for agents, conversational history for chatbots and semantic caching for vector stores.

According to Karthik Ranganathan, CEO of Yugabyte, which is on the steering committee for the DocumentDB project, these and other employments of the document store immensely benefit from its schema-free implementations. “Mongo gives this core database functionality, what the engine can do,” Ranganathan said. “And then there’s these languages on top that give the developer the flexibility to model these things.”

Free From Schema Restrictions

The coupling of MongoDB’s technology with PostgreSQL’s is so noteworthy because it effectively combines the relational capabilities of the latter, which Ranganathan termed as “semi-schematic,” with the lack of schema concerns characterizing the former. The freedom to support the aforementioned agent-based and generative model use cases without schema limitations is imperative for maximizing the value of these applications. With DocumentDB, users can avail themselves of this advantage at the foundational database layer.

“As everything is going agentic, it’s important to give this capability in the places where you’d be building those applications, as opposed to having a separate way of doing it,” Ranganathan said. For example, if an engineer were constructing a user profile for an application, the lack of schema would only behoove him as he was able to implement multiple fields for a mobile number, office number, fax number and anything else he thought of while coding. “You don’t want a strict schema for that,” Ranganathan said. “You want to just build those fields on the fly.”

Multiagent Deployments

The lack of schema and general adaptability of the document format are particularly useful for situations in which agents are collaborating. For these applications, DocumentDB can function as a means of providing session history for the various actions and interactions taking place between agents and resources, and between agents with each other.

“It’s super, super important for any agent, or any sequence of operations that you work with an agent to accomplish, for the agent to remember what it did,” Ranganathan said. Each of the operations agents perform individually or collectively can be stored in DocumentDB to serve as the memory for agents.

Without such a framework, agents would be constantly restarting their tasks. According to German Eichberger, principal software engineering manager at Microsoft, DocumentDB’s viability for this use case extends beyond memory. “As things progress, we’ll have multiple agents working together on transactions,” Eichberger said. “And they will not agree on something, so they’ll have rollbacks. We feel that doing this in a document will be better because they can all work on the same document and when they are happy, commit it.” Such utility is not dissimilar to the way humans work in Google Docs.

Chatbots and Semantic Caching

There are multiple ways in which DocumentDB underpins other applications of generative models, including Retrieval-Augmented Generation (RAG), vector database deployments and chatbots. For these use cases, the document store can also supply a centralized form of memory for bots discoursing with employees or customers. That way, developers of these systems can avoid situations in which, “If you forget everything we just talked about and just answer the next question, it’s completely out of context and meaningless,” Ranganathan remarked.

DocumentDB can also provide a semantic caching layer that preserves the underlying meaning of jargon, pronouns and other facets of episodic memory so intelligent bots can quickly retrieve this information for timelier, more savvy responses. With DocumentDB, such semantic understanding and memory capabilities are baked into the primary resource engineers rely on — the database.

“The history of what we talked about, that becomes extremely important,” Ranganathan said. “There’s different ways to solve it, but it must be in the context of the developer ecosystem. So, rather than give one way to solve it and ask everyone to integrate it that way, just give the way the person expects to build the AI application.”

What Developers Expect

With DocumentDB, developers get the overall flexibility to build applications the way they’d like. The document store is available through PostgreSQL, which is highly extensible and supports an array of workloads, including those involving vector databases and other frameworks for implementing generative models.

Moreover, they’re not constrained by any schema limitations, which spurs creativity and a developer-centric means of building applications. Lastly, it provides a reliable mechanism for agents to collaborate with each other, retain the history of what actions were done to perform a task and come to a consensus before completing it.

The fact that DocumentDB is free, as well as at the behest of the open source community for these applications of intelligent agents and more, can potentially further the scope of these deployments. “With AI, the growth is going to be exponential, but you’re not going to get there in one hop,” Ranganathan said. “You’ll get there in a series of rapid iterations. The mathematical way to represent it, it’s like 1.1 to the power of 365. This is a 10% improvement every day, which is like 10 raised to the 17th power, a huge number.”

DocumentDB may not be solely responsible for such advancements in statistical AI, but it may have contributed to the day’s improvement in this technology.

The post What DocumentDB Means for Open Source appeared first on The New Stack.

Trust is the most important consideration when you connect AI assistants to real tools. While MCP containerization provides strong isolation and limits the blast radius of malfunctioning or compromised servers, we’re continuously strengthening trust and security across the Docker MCP solutions to further reduce exposure to malicious code. As the MCP ecosystem scales from hundreds to tens of thousands of servers (and beyond), we need stronger mechanisms to prove what code is running, how it was built, and why it’s trusted.

To strengthen trust across the entire MCP lifecycle, from submission to maintenance to daily use, we’ve introduced three key enhancements:

- Commit Pinning: Every Docker-built MCP server in the Docker MCP Registry (the source of truth for the MCP Catalog) is now tied to a specific Git commit, making each release precisely attributable and verifiable.

- Automated, AI-Audited Updates: A new update workflow keeps submitted MCP servers current, while agentic reviews of incoming changes make vigilance scalable and traceable.

- Publisher Trust Levels: We’ve introduced clearer trust indicators in the MCP Catalog, so developers can easily distinguish between official, verified servers and community-contributed entries.

These updates raise the bar on transparency and security for everyone building with and using MCP at scale with Docker.

Commit pins for local MCP servers

Local MCP servers in the Docker MCP Registry are now tied to a specific Git commit with source.commit. That commit hash is a cryptographic fingerprint for the exact revision of the server code that we build and publish. Without this pinning, a reference like latest or a branch name would build whatever happens to be at that reference right now, making builds non-deterministic and vulnerable to supply chain attacks if an upstream repository is compromised. Even Git tags aren’t really immutable since they can be deleted and recreated to point to another commit. By contrast, commit hashes are cryptographically linked to the content they address, making the outcome of an audit of that commit a persistent result.

To make things easier, we’ve updated our authoring tools (like the handy MCP Registry Wizard) to automatically add this commit pin when creating a new server entry, and we now enforce the presence of a commit pin in our CI pipeline (missing or malformed pins will fail validation). This enforcement is deliberate: it’s impossible to accidentally publish a server without establishing clear provenance for the code being distributed. We also propagate the pin into the MCP server image metadata via the org.opencontainers.image.revision label for traceability.

Here’s an example of what this looks like in the registry:

# servers/aws-cdk-mcp-server/server.yaml

name: aws-cdk-mcp-server

image: mcp/aws-cdk-mcp-server

type: server

meta:

category: devops

tags:

- aws-cdk-mcp-server

- devops

about:

title: AWS CDK

description: AWS Cloud Development Kit (CDK) best practices, infrastructure as code patterns, and security compliance with CDK Nag.

icon: https://avatars.githubusercontent.com/u/3299148?v=4

source:

project: https://github.com/awslabs/mcp

commit: 7bace1f81455088b6690a44e99cabb602259ddf7

directory: src/cdk-mcp-server

And here’s an example of how you can verify the commit pin for a published MCP server image:

$ docker image inspect mcp/aws-core-mcp-server:latest \

--format '{{index .Config.Labels "org.opencontainers.image.revision"}}'

7bace1f81455088b6690a44e99cabb602259ddf7

In fact, if you have the cosign and jq commands available, you can perform additional verifications:

$ COSIGN_REPOSITORY=mcp/signatures cosign verify mcp/aws-cdk-mcp-server --key https://raw.githubusercontent.com/docker/keyring/refs/heads/main/public/mcp/latest.pub | jq -r ' .[].optional["org.opencontainers.image.revision"] '

Verification for index.docker.io/mcp/aws-cdk-mcp-server:latest --

The following checks were performed on each of these signatures:

- The cosign claims were validated

- Existence of the claims in the transparency log was verified offline

- The signatures were verified against the specified public key

7bace1f81455088b6690a44e99cabb602259ddf7

Keeping in sync

Once a server is in the registry, we don’t want maintainers needing to hand‑edit pins every time they merge something into their upstream repos (they have better things to do with their time), so a new automated workflow scans upstreams nightly, bumping source.commit when there’s a newer revision, and opening an auditable PR in the registry to track the incoming upstream changes. This gives you the security benefits of pinning (immutable references to reviewed code) without the maintenance toil. Updates still flow through pull requests, so you get a review gate and approval trail showing exactly what new code is entering your supply chain. The update workflow operates on a per-server basis, with each server update getting its own branch and pull request.

This raises the question, though: how do we know that the incoming changes are safe?

AI in the review loop, humans in charge

Every proposed commit pin bump (and any new local server) will now be subject to an agentic AI security review of the incoming upstream changes. The reviewers (Claude Code and OpenAI Codex) analyze MCP server behavior, flagging risky or malicious code, adding structured reports to the PR, and offering standardized labels such as security-risk:high or security-blocked. Humans remain in the loop for final judgment, but the agents are relentless and scalable.

The challenge: untrusted code means untrusted agents

When you run AI agents in CI to analyze untrusted code, you face a fundamental problem: the agents themselves become attack vectors. They’re susceptible to prompt injection through carefully crafted code comments, file names, or repository structure. A malicious PR could attempt to manipulate the reviewing agent into approving dangerous changes, exfiltrating secrets, or modifying the review process itself.

We can’t trust the code under review, but we also can’t fully trust the agents reviewing it.

Isolated agents

Our Compose-based security reviewer architecture addresses this trust problem by treating the AI agents as untrusted components. The agents run inside heavily isolated Docker containers with tightly controlled inputs and outputs:

- The code being audited is mounted read-only — The agent can analyze code but never modify it. Moreover, the code it audits is just a temporary copy of the upstream repository, but the read-only access means that the agent can’t do something like modify a script that might be accidentally executed outside the container.

- The agent can only write to an isolated output directory — Once the output is written, the CLI wrapper for the agent only extracts specific files (a Markdown report and a text file of labels, both with fixed names), meaning any malicious scripts or files that might be written to that directory are deleted.

- The agent lacks direct Internet access — the reviewer container cannot reach external services.

- CI secrets and API credentials never enter the reviewer container — Instead, a lightweight reverse proxy on a separate Docker network accepts requests from the reviewer, injects inference provider API keys on outbound requests, and shields those keys from the containerized code under review.

All of this is encapsulated in a Docker Compose stack and wrapped by a convenient CLI that allows running the agent both locally and in CI.

Most importantly, this architecture ensures that even if a malicious PR successfully manipulates the agent through prompt injection, the damage is contained: the agent cannot access secrets, cannot modify code, and cannot communicate with external attackers.

CI integration and GitHub Checks

The review workflow is automatically triggered when a PR is opened or updated. We still maintain some control over these workflows for external PRs, requiring manual triggering to prevent malicious PRs from exhausting inference API credits. These reviews surface directly as GitHub Status Checks, with each server being reviewed receiving dedicated status checks for any analyses performed.

The resulting check status maps to the associated risk level determined by the agent: critical findings result in a failed check that blocks merging, high and medium findings produce neutral warnings, while low and info findings pass. We’re still tuning these criteria (since we’ve asked the agents to be extra pedantic) and currently reviewing the reports manually, but eventually we’ll have the heuristics tuned to a point where we can auto-approve and merge most updated PRs. In the meantime, these reports serve as a scalable “canary in the coal mine”, alerting Docker MCP Registry maintainers to incoming upstream risks — both malicious and accidental.

It’s worth noting that the agent code in the MCP Registry repository is just an example (but a functional one available under an MIT License). The actual security review agent that we run lives in a private repository with additional isolation, but it follows the same architecture.

Reports and risk labels

Here’s an example of a report our automated reviewers produced:

# Security Review Report

## Scope Summary

- **Review Mode:** Differential

- **Repository:** /workspace/input/repository (stripe)

- **Head Commit:** 4eb0089a690cb60c7a30c159bd879ce5c04dd2b8

- **Base Commit:** f495421c400748b65a05751806cb20293c764233

- **Commit Range:** f495421c400748b65a05751806cb20293c764233...4eb0089a690cb60c7a30c159bd879ce5c04dd2b8

- **Overall Risk Level:** MEDIUM

## Executive Summary

This differential review covers 23 commits introducing significant changes to the Stripe Agent Toolkit repository, including: folder restructuring (moving tools to a tools/ directory), removal of evaluation code, addition of new LLM metering and provider packages, security dependency updates, and GitHub Actions workflow permission hardening.

...

The reviewers can produce both differential analyses (looking at the changes brought in by a specific set of upstream commits) as well as full analyses (looking at entire codebases). We intend to run both differential for PRs and full analyses regularly.

Why behavioral analysis matters

Traditional scanners remain essential, but they tend to focus on things like dependencies with CVEs, syntactical errors (such as a missing break in a switch statement), or memory safety issues (such as dereferencing an uninitialized pointer) — MCP requires us to also examine code’s behavior. Consider the recent malicious postmark-mcp package impersonation: a one‑line backdoor quietly BCC’d outgoing emails to an attacker. Events like this reinforce why our registry couples provenance with behavior‑aware reviews before updates ship.

Real-world results

In our scans so far, we’ve already found several real-world issues in upstream projects (stay tuned for a follow-up blog post), both in MCP servers and with a similar agent in our Docker Hardened Images pipeline. We’re happy to say that we haven’t run across anything malicious so far, just logic errors with security implications, but the granularity and subtlety of issues that these agents can identify is impressive.

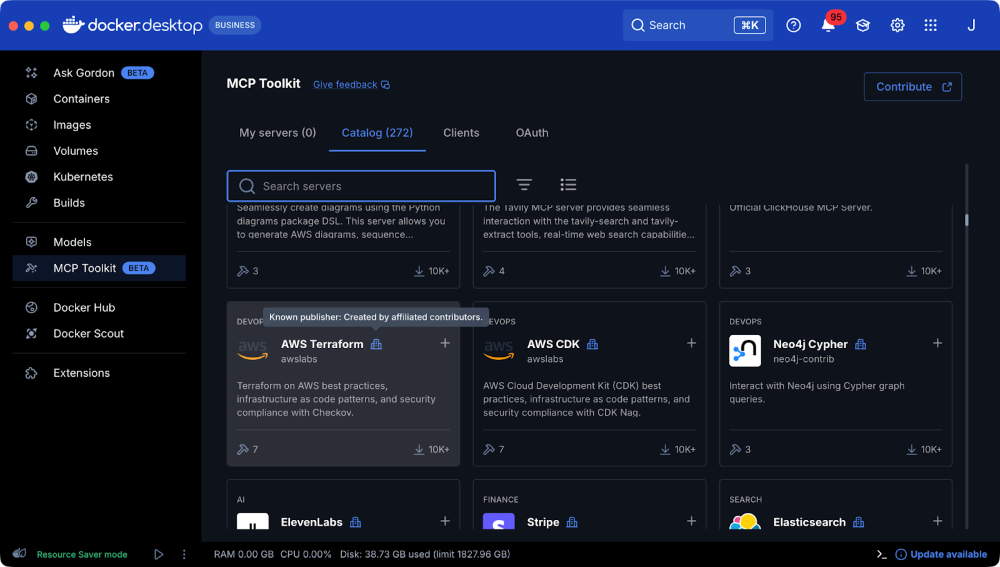

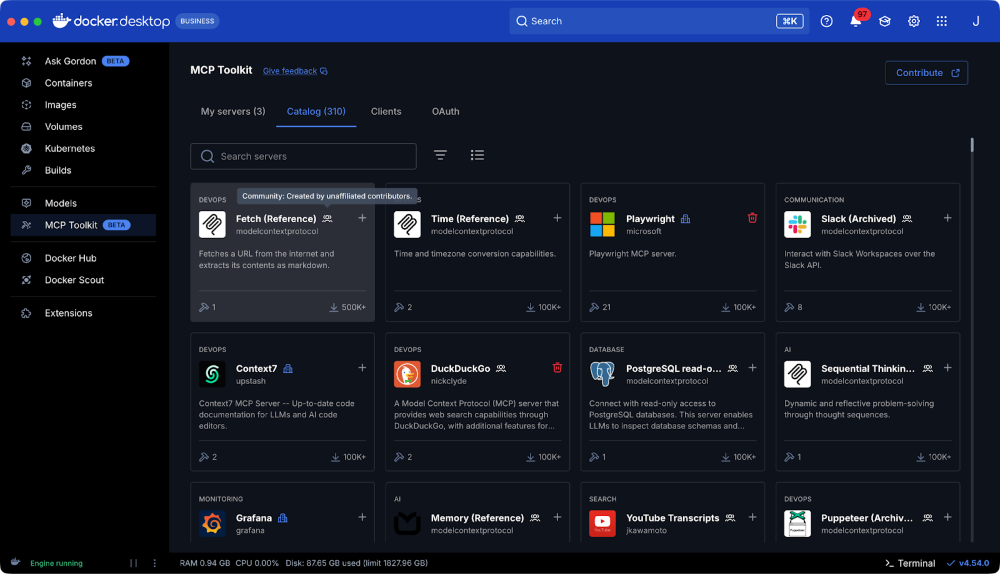

Trust levels in the Docker MCP Catalog

In addition to the aforementioned technical changes, we’ve also introduced publisher trust levels in the Docker MCP Catalog, exposing them in both the Docker MCP Toolkit in Docker Desktop and on Docker MCP Hub. Each server will now have an associated icon indicating whether the server is from a “known publisher” or maintained by the community. In both cases, we’ll still subject the code to review, but these indicators should provide additional context on the origin of the MCP server.

Figure 1: Here’s an example of an MCP server, the AWS Terraform MCP published by a known, trusted publisher

Figure 2: The Fetch MCP server, an example of an MCP community server

What does this mean for the community?

Publishers now benefit from a steady stream of upstream improvements, backed by a documented, auditable trail of code changes. Commit pins make each release precisely attributable, while the nightly updater keeps the catalog current with no extra effort from publishers or maintainers. AI-powered reviewers scale our vigilance, freeing up human reviewers to focus on the edge cases that matter most.

At the same time, developers using MCP servers get clarity about a server’s publisher, making it easier to distinguish between official, community, and third-party contributions. These enhancements strengthen trust and security for everyone contributing to or relying on MCP servers in the Docker ecosystem.

Submit your MCP servers to Docker by following the submission guidance here!

Learn more

- Explore the MCP Catalog: Discover containerized, security-hardened MCP servers.

- Get started with the MCP Toolkit: Run MCP servers easily and securely.

- Find documentation for Docker MCP Catalog and Toolkit.

I've written about Advent of Code in the past, but that was 5 years ago, so this warrants a new post, and there's an extra opportunity, I think.

The code calendar

The advent of code is a daily code challenge, comprising of two parts based around the same problem, with increasing complexity. There's a wonderful little story that's weaved through the days, usually relating to Christmas and rouge elves that you can help.

I use it as a yearly chance to try to bend the jq language into shapes it definitely wasn't intended for. It's another way of clearing out some of the cobwebs that gather in my head (these are my attempts so far).

Perhaps you're new to software development or a particular language, then this is a great way of having real problems to solve where there's an absolute answer. There's also a wealth of solutions posted up on the Reddit channel if you (or I) get stuck and need inspiration.

Changes to the programme

As of this year, there's now only 12 challenge days (previously it was 24 - one for each day until Christmas eve). For me this is welcomed, partly because I'd usually break by day 16, but also, I'm not sure I want to spend hours tinkering on a hard problem on Christmas eve (having never gotten past 16, I hadn't yet!).

The global leaderboard has also been removed. I personally never made it anywhere near the leaderboard, but some people would obsess over it. Sadly, with the rise and ease of access of AI tools, it meant quickly the time from challenge release to posting a correct answer started turning up in seconds long. That's single digit seconds to solve the problem - which really isn't in the spirit at all.

AI was banned/asked to not join in with the leaderboard, but completely removing the global leaderboard completely takes the pressure off (and that idea that "why can't I solve it that fast").

You can still create a leaderboard for your friends or team - which makes sense.

Speaking of AI

Here's where my final suggestion might be controversial: why not try to solve using AI?

By that, I mean, if you're in a similar camp as me and have been sceptical of AI and a little wary, this might be a good opportunity to dip your toe in. I don't mean to paste in the challenge and have AI spit out the answer - that doesn't help anyone.

It could be seen as a chance to practise controlling the context and the problem space with AI doing the work. Perhaps testing different models from providers, but perhaps trying out local LLM models to see if they can do the work (so perhaps we could be a little more in control of the power usage).

As for me, I'm having to actively disable copilot in VS Code when solving the advent of code, because it's so desperate to help me!

Originally published on Remy Sharp's b:log

Vite 8 Beta: The Rolldown-powered Vite

December 3, 2025

TL;DR: The first beta of Vite 8, powered by Rolldown, is now available. Vite 8 ships significantly faster production builds and unlocks future improvement possibilities. You can try the new release by upgrading vite to version 8.0.0-beta.0 and reading the migration guide.

We're excited to release the first beta of Vite 8. This release unifies the underlying toolchain and brings better consistent behaviors, alongside significant build performance improvements. Vite now uses Rolldown as its bundler, replacing the previous combination of esbuild and Rollup.

A new bundler for the web

Vite previously relied on two bundlers to meet differing requirements for development and production builds:

- esbuild for fast compilation during development

- Rollup for bundling, chunking, and optimizing production builds

This approach lets Vite focus on developer experience and orchestration instead of reinventing parsing and bundling. However, maintaining two separate bundling pipelines introduced inconsistencies: separate transformation pipelines, different plugin systems and a growing amount of glue code to keep bundling behavior aligned between development and production.

To solve this, VoidZero team has built Rolldown, the next-generation bundler with the goal to be used in Vite. It is designed for:

- Performance: Rolldown is written in Rust and operates at native speed. It matches esbuild’s performance level and is 10–30× faster than Rollup.

- Compatibility: Rolldown supports the same plugin API as Rollup and Vite. Most Vite plugins work out of the box with Vite 8.

- More Features: Rolldown unlocks more advanced features for Vite, including full bundle mode, more flexible chunk split control, module-level persistent cache, Module Federation, and more.

Unifying the toolchain

The impact of Vite’s bundler swap goes beyond performance. Bundlers leverage parsers, resolvers, transformers, and minifiers. Rolldown uses Oxc, another project led by VoidZero, for these purposes.

That makes Vite the entry point to an end-to-end toolchain maintained by the same team: The build tool (Vite), the bundler (Rolldown) and the compiler (Oxc).

This alignment ensures behavior consistency across the stack, and allows us to rapidly adopt and align with new language specifications as JavaScript continues to evolve. It also unlocks a wide range of improvements that previously couldn’t be done by Vite alone. For example, we can leverage Oxc’s semantic analysis to perform better tree-shaking in Rolldown.

How Vite migrated to Rolldown

The migration to a Rolldown-powered Vite is a foundational change. Therefore, our team took deliberate steps to implement it without sacrificing stability or ecosystem compatibility.

First, a separate rolldown-vite package was released as a technical preview. This allowed us to work with early adopters without affecting the stable version of Vite. Early adopters benefited from Rolldown’s performance gains while providing valuable feedback. Highlights:

- Linear's production build times were reduced from 46s to 6s

- Mercedes-Benz.io cut their build time down by up to 38%

- Beehiiv reduced their build time by 64%

Next, we set up a test suite for validating key Vite plugins against rolldown-vite. This CI job helped us catch regressions and compatibility issues early, especially for frameworks and meta-frameworks such as SvelteKit, react-router and Storybook.

Lastly, we built a compatibility layer to help migrate developers from Rollup and esbuild options to the corresponding Rolldown options.

As a result, there is a smooth migration path to Vite 8 for everyone.

Migrating to Vite 8 Beta

Since Vite 8 touches the core build behavior, we focused on keeping the configuration API and plugin hooks unchanged. We created a migration guide to help you upgrade.

There are two available upgrade paths:

- Direct Upgrade: Update

viteinpackage.jsonand run the usual dev and build commands. - Gradual Migration: Migrate from Vite 7 to the

rolldown-vitepackage, and then to Vite 8. This allows you to identify incompatibilities or issues isolated to Rolldown without other changes to Vite. (Recommended for larger or complex projects)

IMPORTANT

If you are relying on specific Rollup or esbuild options, you might need to make some adjustments to your Vite config. Please refer to the migration guide for detailed instructions and examples. As with all non-stable, major releases, thorough testing is recommended after upgrading to ensure everything works as expected. Please make sure to report any issues.

If you use a framework or tool that uses Vite as dependency, for example Astro, Nuxt, or Vitest, you have to override the vite dependency in your package.json, which works slightly different depending on your package manager:

{

"overrides": {

"vite": "8.0.0-beta.0"

}

}{

"resolutions": {

"vite": "8.0.0-beta.0"

}

}{

"pnpm": {

"overrides": {

"vite": "8.0.0-beta.0"

}

}

}{

"overrides": {

"vite": "8.0.0-beta.0"

}

}After adding these overrides, reinstall your dependencies and start your development server or build your project as usual.

Additional Features in Vite 8

In addition to shipping with Rolldown, Vite 8 comes with:

- Built-in tsconfig

pathssupport: Developers can enable it by settingresolve.tsconfigPathstotrue. This feature has a small performance cost and is not enabled by default. emitDecoratorMetadatasupport: Vite 8 now has built-in automatic support for TypeScript'semitDecoratorMetadataoption. See the Features page for more details.

Looking Ahead

Speed has always been a defining feature for Vite. The integration with Rolldown and, by extension, Oxc means JavaScript developers benefit from Rust’s speed. Upgrading to Vite 8 should result in performance gains simply from using Rust.

We are also excited to ship Vite’s Full Bundle Mode soon, which drastically improves Vite’s dev server speed for large projects. Preliminary results show 3× faster dev server startup, 40% faster full reloads, and 10× fewer network requests.

Another defining Vite feature is the plugin ecosystem. We want JavaScript developers to continue extending and customizing Vite in JavaScript, the language they’re familiar with, while benefiting from Rust’s performance gains. Our team is collaborating with VoidZero team to accelerate JavaScript plugin usage in these Rust-based systems.

Upcoming optimizations that are currently experimental:

- Raw AST transfer. Allow JavaScript plugins to access the Rust-produced AST with minimal overhead.

- Native MagicString transforms. Simple custom transforms with logic in JavaScript but computation in Rust.

Connect with us

If you've tried Vite 8 beta, then we'd love to hear your feedback! Please report any issues or share your experience:

- Discord: Join our community server for real-time discussions

- GitHub: Share feedback on GitHub discussions

- Issues: Report issues on the rolldown-vite repository for bugs and regressions

- Wins: Share your improved build times in the rolldown-vite-perf-wins repository

We appreciate all reports and reproduction cases. They help guide us towards the release of a stable 8.0.0.