Advanced Function Calling and Multi-Agent Systems with Small Language Models in Foundry Local

In our previous exploration of function calling with Small Language Models, we demonstrated how to enable local SLMs to interact with external tools using a text-parsing approach with regex patterns. While that method worked, it required manual extraction of function calls from the model's output; functional but fragile.

Today, I'm excited to show you something far more powerful: Foundry Local now supports native OpenAI-compatible function calling with select models. This update transforms how we build agentic AI systems locally, making it remarkably straightforward to create sophisticated multi-agent architectures that rival cloud-based solutions. What once required careful prompt engineering and brittle parsing now works seamlessly through standardized API calls.

We'll build a complete multi-agent quiz application that demonstrates both the elegance of modern function calling and the power of coordinated agent systems. The full source code is available in this GitHub repository, but rather than walking through every line of code, we'll focus on how the pieces work together and what you'll see when you run it.

What's New: Native Function Calling in Foundry Local

As we explored in our guide to running Phi-4 locally with Foundry Local, we ran powerful language models on our local machine. The latest version now support native function calling for models specifically trained with this capability.

The key difference is architectural. In our weather assistant example, we manually parsed JSON strings from the model's text output using regex patterns and frankly speaking, meticulously testing and tweaking the system prompt for the umpteenth time 🙄. Now, when you provide tool definitions to supported models, they return structured tool_calls objects that you can directly execute.

Currently, this native function calling capability is available for the Qwen 2.5 family of models in Foundry Local. For this tutorial, we're using the 7B variant, which strikes a great balance between capability and resource requirements.

Quick Setup

Getting started requires just a few steps. First, ensure you have Foundry Local installed. On Windows, use

winget install Microsoft.FoundryLocal

, and on macOS, use

bash brew install microsoft/foundrylocal/foundrylocal

You'll need version 0.8.117 or later.

Install the Python dependencies in the requirements file, then start your model. The first run will download approximately 4GB:

foundry model run qwen2.5-7b-instruct-cuda-gpu

If you don't have a compatible GPU, use the CPU version instead, or you can specify any other Qwen 2.5 variant that suits your hardware. I have set a DEFAULT_MODEL_ALIAS variable you can modify to use different models in utils/foundry_client.py file.

Keep this terminal window open. The model needs to stay running while you develop and test your application.

Understanding the Architecture

Before we dive into running the application, let's understand what we're building. Our quiz system follows a multi-agent architecture where specialized agents handle distinct responsibilities, coordinated by a central orchestrator.

The flow works like this: when you ask the system to generate a quiz about photosynthesis, the orchestrator agent receives your message, understands your intent, and decides which tool to invoke. It doesn't try to generate the quiz itself, instead, it calls a tool that creates a specialist QuizGeneratorAgent focused solely on producing well-structured quiz questions. Then there's another agent, reviewAgent, that reviews the quiz with you.

The project structure reflects this architecture:

quiz_app/

├── agents/ # Base agent + specialist agents

├── tools/ # Tool functions the orchestrator can call

├── utils/ # Foundry client connection

├── data/

├── quizzes/ # Generated quiz JSON files

│── responses/ # User response JSON files

└── main.py # Application entry point

The orchestrator coordinates three main tools: generate_new_quiz, launch_quiz_interface, and review_quiz_interface. Each tool either creates a specialist agent or launches an interactive interface (Gradio), handling the complexity so the orchestrator can focus on routing and coordination.

How Native Function Calling Works

![]()

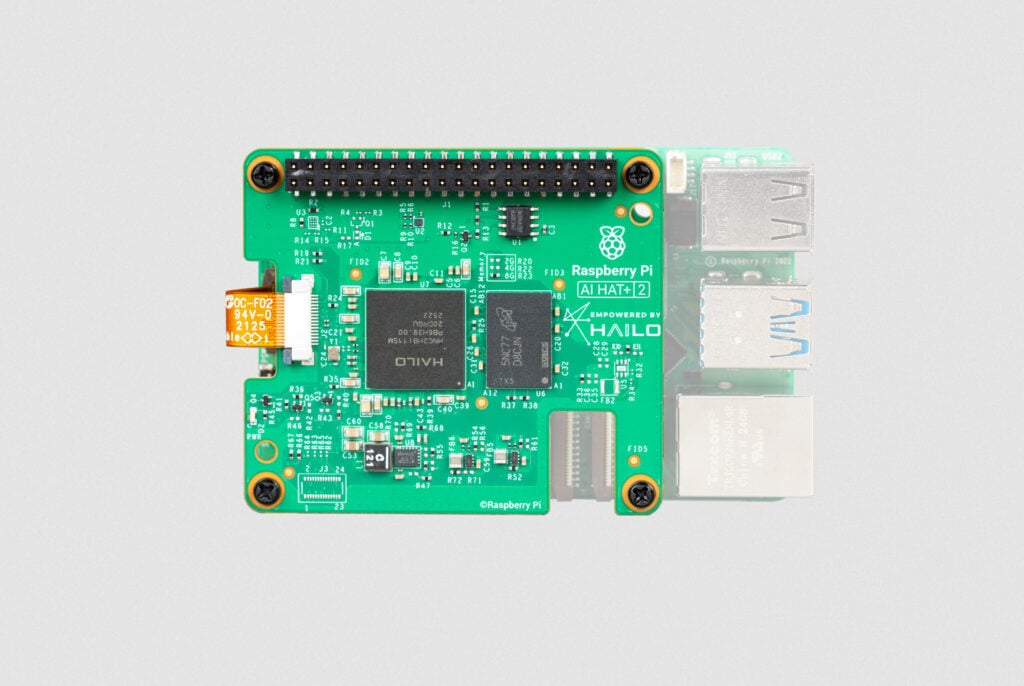

When you initialize the orchestrator agent in main.py, you provide two things: tool schemas that describe your functions to the model, and a mapping of function names to actual Python functions. The schemas follow the OpenAI function calling specification, describing each tool's purpose, parameters, and when it should be used.

Here's what happens when you send a message to the orchestrator:

The agent calls the model with your message and the tool schemas. If the model determines a tool is needed, it returns a structured tool_calls attribute containing the function name and arguments as a proper object—not as text to be parsed. Your code executes the tool, creates a message with "role": "tool" containing the result, and sends everything back to the model. The model can then either call another tool or provide its final response.

The critical insight is that the model itself controls this flow through a while loop in the base agent. Each iteration represents the model examining the current state, deciding whether it needs more information, and either proceeding with another tool call or providing its final answer. You're not manually orchestrating when tools get called; the model makes those decisions based on the conversation context.

Seeing It In Action

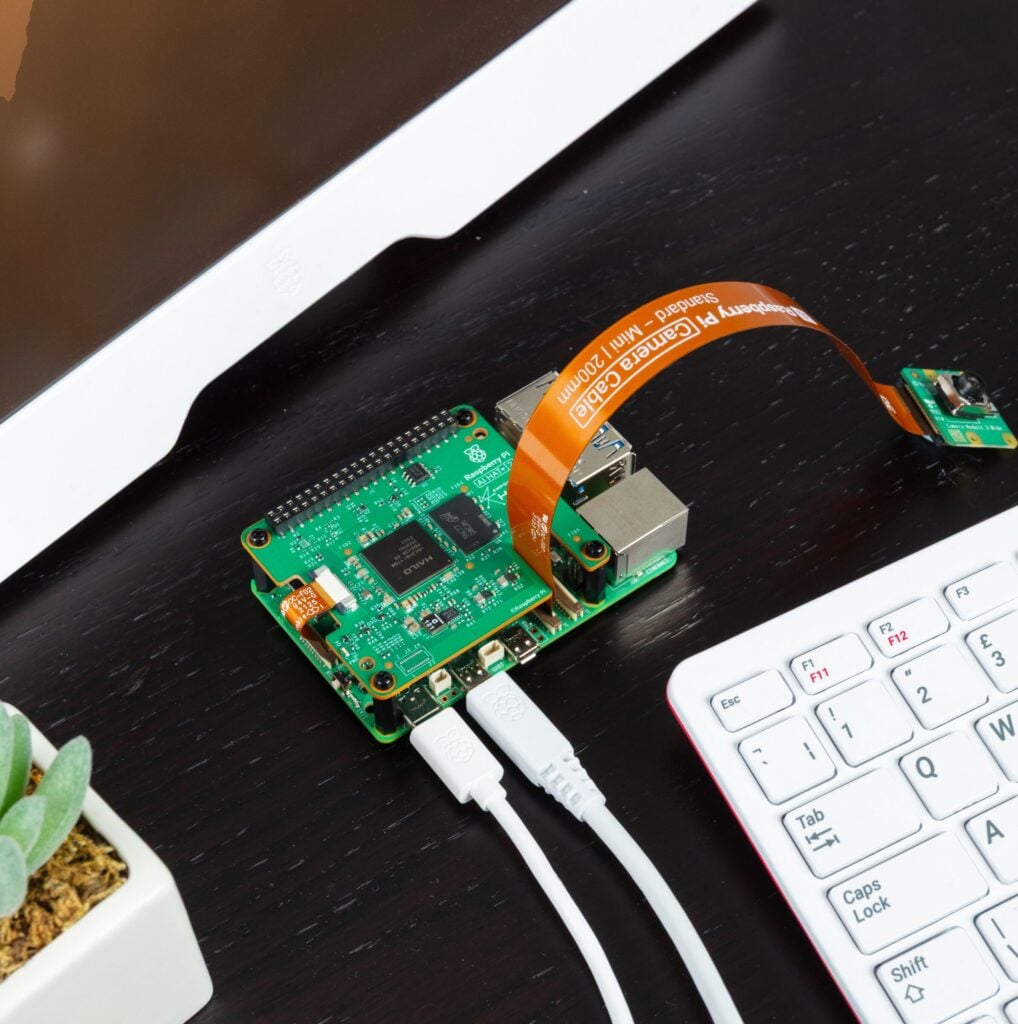

Let's walk through a complete session to see how these pieces work together. When you run python main.py, you'll see the application connect to Foundry Local and display a welcome banner:

![]()

Now type a request like "Generate a 5 question quiz about photosynthesis." Watch what happens in your console:

![]()

The orchestrator recognized your intent, selected the generate_new_quiz tool, and extracted the topic and number of questions from your natural language request. Behind the scenes, this tool instantiated a QuizGeneratorAgent with a focused system prompt designed specifically for creating quiz JSON. The agent used a low temperature setting to ensure consistent formatting and generated questions that were saved to the data/quizzes folder.

This demonstrates the first layer of the multi-agent architecture: the orchestrator doesn't generate quizzes itself. It recognizes that this task requires specialized knowledge about quiz structure and delegates to an agent built specifically for that purpose.

Now request to take the quiz by typing "Take the quiz." The orchestrator calls a different tool and Gradio server is launched. Click the link to open in a browser window displaying your quiz questions. This tool demonstrates how function calling can trigger complex interactions—it reads the quiz JSON, dynamically builds a user interface with radio buttons for each question, and handles the submission flow.

![]()

After you answer the questions and click submit, the interface saves your responses to the data/responses folder and closes the Gradio server. The orchestrator reports completion:

![]()

The system now has two JSON files: one containing the quiz questions with correct answers, and another containing your responses. This separation of concerns is important—the quiz generation phase doesn't need to know about response collection, and the response collection doesn't need to know how quizzes are created. Each component has a single, well-defined responsibility.

Now request a review. The orchestrator calls the third tool:

![]()

A new chat interface opens, and here's where the multi-agent architecture really shines. The ReviewAgent is instantiated with full context about both the quiz questions and your answers. Its system prompt includes a formatted view of each question, the correct answer, your answer, and whether you got it right. This means when the interface opens, you immediately see personalized feedback:

![]()

The Multi-Agent Pattern

Multi-agent architectures solve complex problems by coordinating specialized agents rather than building monolithic systems. This pattern is particularly powerful for local SLMs. A coordinator agent routes tasks to specialists, each optimized for narrow domains with focused system prompts and specific temperature settings. You can use a 1.7B model for structured data generation, a 7B model for conversations, and a 4B model for reasoning, all orchestrated by a lightweight coordinator. This is more efficient than requiring one massive model for everything.

Foundry Local's native function calling makes this straightforward. The coordinator reliably invokes tools that instantiate specialists, with structured responses flowing back through proper tool messages. The model manages the coordination loop—deciding when it needs another specialist, when it has enough information, and when to provide a final answer.

In our quiz application, the orchestrator routes user requests but never tries to be an expert in quiz generation, interface design, or tutoring. The QuizGeneratorAgent focuses solely on creating well-structured quiz JSON using constrained prompts and low temperature. The ReviewAgent handles open-ended educational dialogue with embedded quiz context and higher temperature for natural conversation. The tools abstract away file management, interface launching, and agent instantiation, the orchestrator just knows "this tool launches quizzes" without needing implementation details.

This pattern scales effortlessly. If you wanted to add a new capability like study guides or flashcards, you could just easily create a new tool or specialists. The orchestrator gains these capabilities automatically by having the tool schemas you have defined without modifying core logic. This same pattern powers production systems with dozens of specialists handling retrieval, reasoning, execution, and monitoring, each excelling in its domain while the coordinator ensures seamless collaboration.

Why This Matters

The transition from text-parsing to native function calling enables a fundamentally different approach to building AI applications. With text parsing, you're constantly fighting against the unpredictability of natural language output. A model might decide to explain why it's calling a function before outputting the JSON, or it might format the JSON slightly differently than your regex expects, or it might wrap it in markdown code fences. Native function calling eliminates this entire class of problems. The model is trained to output tool calls as structured data, separate from its conversational responses.

The multi-agent aspect builds on this foundation. Because function calling is reliable, you can confidently delegate to specialist agents knowing they'll integrate smoothly with the orchestrator. You can chain tool calls—the orchestrator might generate a quiz, then immediately launch the interface to take it, based on a single user request like "Create and give me a quiz about machine learning." The model handles this orchestration intelligently because the tool results flow back as structured data it can reason about.

Running everything locally through Foundry Local adds another dimension of value and I am genuinely excited about this (hopefully, the phi models get this functionality soon). You can experiment freely, iterate quickly, and deploy solutions that run entirely on your infrastructure. For educational applications like our quiz system, this means students can interact with the AI tutor as much as they need without cost concerns.

Getting Started With Your Own Multi-Agent System

The complete code for this quiz application is available in the GitHub repository, and I encourage you to clone it and experiment. Try modifying the tool schemas to see how the orchestrator's behavior changes. Add a new specialist agent for a different task. Adjust the system prompts to see how agent personalities and capabilities shift.

Think about the problems you're trying to solve. Could they benefit from having different specialists handling different aspects? A customer service system might have agents for order lookup, refund processing, and product recommendations. A research assistant might have agents for web search, document summarization, and citation formatting. A coding assistant might have agents for code generation, testing, and documentation.

Start small, perhaps with two or three specialist agents for a specific domain. Watch how the orchestrator learns to route between them based on the tool descriptions you provide. You'll quickly see opportunities to add more specialists, refine the existing ones, and build increasingly sophisticated systems that leverage the unique strengths of each agent while presenting a unified, intelligent interface to your users.

In the next entry, we will be deploying our quizz app which will mark the end of our journey in Foundry and SLMs these past few weeks. I hope you are as excited as I am!

Thanks for reading.